New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Are campaigns getting cancelled or running SUPER fast? #35

Comments

|

I should note that in |

|

There are a lot of things you can check. Any errors in execution would show up earlier in the output log than what you provided.

|

|

Thanks, and sorry, I just tore down and created a new NPK instance because I was hitting some limit error warnings that I thought were false positives (see this issue). I'll try these same crack jobs in the new instance and see how they shake out and then update the issue. |

|

Hi again @c6fc thanks for the help. Ok I ran another crack job that should've taken about 16 hours, but only ran about 5 minutes. There are two output logs that all conclude with essentially this: File management page shows no cracked hashes. Campaign management shows the campaign completed. Prior to the output above, there are a ton of lines that appear to be setting up the infrastructure/campaign: I searched the whole output for the word "error" and besides the ones mentioned above, I see one chunk like this: If you need additional info or want to see the whole log let me know. Thanks, |

|

You're doing a crack in NetNTLMv2 mode, so make sure you're only providing the hash itself. Check the type 5600 example at https://hashcat.net/wiki/doku.php?id=example_hashes and make sure yours is being provided in the same format, one hash per line. As a sanity check, you can also verify that you have a useable hash file by running hashcat on your own machine using similar parameters as to what you see in the output logs. If hashcat can ingest it and start the cracking process without using the --username flag, then so can NPK. Give that a shot and let me know how it goes. |

|

Much appreciated. I grabbed that hash file off NPK (there are three users/lines in it), copied to a text file on Kali and ran straight through with I just got a bundle of NTLM hashes from another engagement. I'm gonna run it through the same wordlist/rules/masks just to see if I run into the same issues. I'll also just try ONE NTLMv2 hash as well. Will keep you posted, thx again |

|

Actually, I hadn't noticed that the S3 bucket giving the 403 forbidden was the userdata bucket. Your instance definitely shouldn't be getting errors from that. Let me do some testing. It's possible this is related to the AWS permissions change that affected other folks a couple weeks ago. |

|

Yup, sure enough.

The only reason this should ever happen is either if the presigned URL was created wrong, or if the campaign was started a long time after it was created. The campaign should throw an error in such a case, but I've never personally tested it (this check happens at Did you by chance wait a long time after creating the campaign before you clicked 'Start' on the dashboard? Or is your host possibly configured with the wrong timezone? |

|

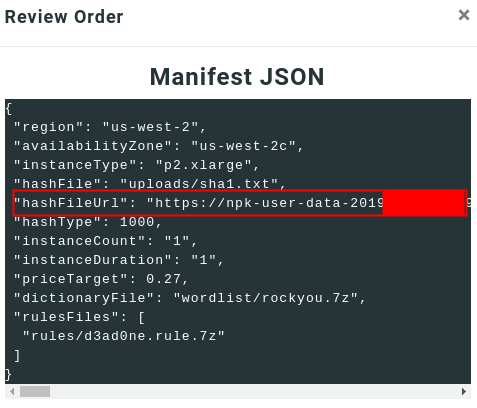

As a sanity check for this, when you go to create a campaign, wait for a several seconds at the 'Review Order' screen. You'll eventually see the 'hashFileUrl' change from Copy the URL and visit it with a browser (don't forget to delete the double-quote at the end though). It should show your hashes. If it doesn't, let me know. |

|

Ok so in looking back at the history at some of the failed jobs, it does look like in a few cases Amazon took ~2 hours to actually fire up the instances and do its thing (I think those jobs were all multi-instance). Does the HashFileURL stays valid for a short amount of time? If so, that could be the cause for failure in these cases... But, with that said, I changed my deployment VM from UTC to CST (just to put it in my time zone), rebooted the VM and ran Then I took a single hash (that I was able to crack totally fine with hashcat on my own machine), loaded it up into NPK and selected a single instance. I was able to see the full hash URL before submitting the job. Then I hit "start" right away, and Amazon lit up the spot immediately and ran through the job. But unfortunately, it failed again with Weird right? Any other things I can try or logs/troubleshooting I can provide? |

|

The hashFileUrl is only valid for an hour, so the compute nodes need to come up and download that file within that time. A couple questions about your most recent run:

If you didn't get a 403 this time around and it still failed, I'd love to work with you more closely on figuring this out. DM me and we'll get something coordinated if you're willing. |

|

Hey there, yep I saw the hashFileUrl fine too. Now that I've had a few hours of sleep, let me try everything ONCE MORE today, double-check my work and report back. |

|

Hey, sorry I totally spaced on writing back. Well after some more sleep and another run at the hashes, things seem to be running along just fine now! I'm not totally sure what the issue was unfortunately. I did delete and reimport my hash file but I don't think it changed from the first import. I realize this will not help anybody who has the same issue, but I'm so happy it's working - thanks! Before closing this out, one other quick question...if I had some feature requests should I just open them as issues here or would you prefer them emailed or sent in some other way? I LOVE what this project can do but I think it could kick even more behind if it could do some of the hatecrack methodology (https://github.com/trustedsec/hate_crack) as that tends to give me a high number of cracked hashes in a short amount of time. |

|

I'll take a look and open a separate issue if it's something I can add. |

Hi there,

I setup a campaign today to crack 2 NTLMv2 hashes with 2 p3.8xlarges using ACDC wordlist and OneRuleToRuleThemAll. NPK estimated this effort at 16 hours, but I kicked the campaign off and it seemed to finished in just a few minutes.

From what I can see on the NPK and AWS side, it doesn't appear something cancelled it prematurely. I did notice that on the AWS side it sat in a pending state (can't remember the exact status name) for about 45 minutes before seeming to find the right resources and firing up.

The NPK output log ended in:

Is this indicative of a clean run?

Brian

The text was updated successfully, but these errors were encountered: