-

Notifications

You must be signed in to change notification settings - Fork 449

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Hue deviation and noise : 64*64 -> 256*256 #69

Comments

|

@uminaty I have encountered the similar problem where the trained model produces noisy images with a colour shift. I haved tried the same setting to train the model for 50k iters over a much larger dataset celeA-HQ than my own dataset (100 images of a single object) and the outputs look good. I am now trying different amount of data (in celeA-HQ), learning rate or model structure ... But the reason may be the data distribution of training data I think ... |

|

@winnechan Thanks for your answer! The problem is that I already have more than 50,000 images in my training data... did you use the settings provided in the json file without modifying them? I tried to do it with 128*128 images but there is always this colour shift... it's really weird because some images are very good, and others very noisy and colour shifted. I wonder if I should just leave it for 1,000,000 iterations and hope that it gets better |

|

@uminaty the celeA-HQ I use is with 30k images and the model trained over 50k iters produces images without noise, the existing colour shift may be due to the random noise chosed at the beginning of sampling. I am wondering whether the diversity of the training data matters to cover the whole latent space of normal noise (which is similar to the GAN) ... |

|

@winnechan I see, is there other option for the random noise ? Would it be possible for you to share your .json config file ? I'd like to try your configuration |

|

Hi everyone! If anyone has the same problem as me, please note that the problem may be due to a learning rate that is too high (1e-4 in my case with batch sizes of 16 or 4). By dividing the lr by 10 I got excellent results! I would suggest to use a lr scheduler as proposed in the paper. Of course if you can afford to greatly increase the batch size, this will also bring significant improvements. If even after lowering the lr you still have noise or color shift, I suggest increasing the number of channels and the number of res blocks. |

|

I encountered similar colour shift problem with the original sr_sr3_16_128.json config. However, after around 300k iters, colour shift in samples can hardly be observed. |

|

@bibona without any learning rate scheduler ? |

|

@uminaty Yes. I didn't change the configuration file and any code. The training set is FFHQ and validation set is CelebAHQ. But my task is 16-128. I'm not sure whether the colour shift will disappear on 64-256 task as the training goes. |

|

@bibona Ok thank you very much for all your answers ! |

|

@uminaty what schedule did you use for the learning rate? |

|

@vishhvak I started with 1e-4 for the first 50k iterations and then linear decay starting at 1e-5. But honestly, I've tried many things with LR, I think my batch size (max 2) is really not enough. Also i can't have too much parameters (12Go VRAM). Besides, when I do on 32 -> 128 it works very well with a constant LR of 1e-5. I think you really need a bigger network and a bigger batch size to do 64->256 |

|

Hey, thanks for your contributions for this good question and I will keep it for others to add more details |

|

As for our setting on experiment, training epoch can not be too few, especially training from scratch, may be over 100epoch is required to ensure the result is stabel. If you don't want to wait for too long, just try to use pretrained weights. |

|

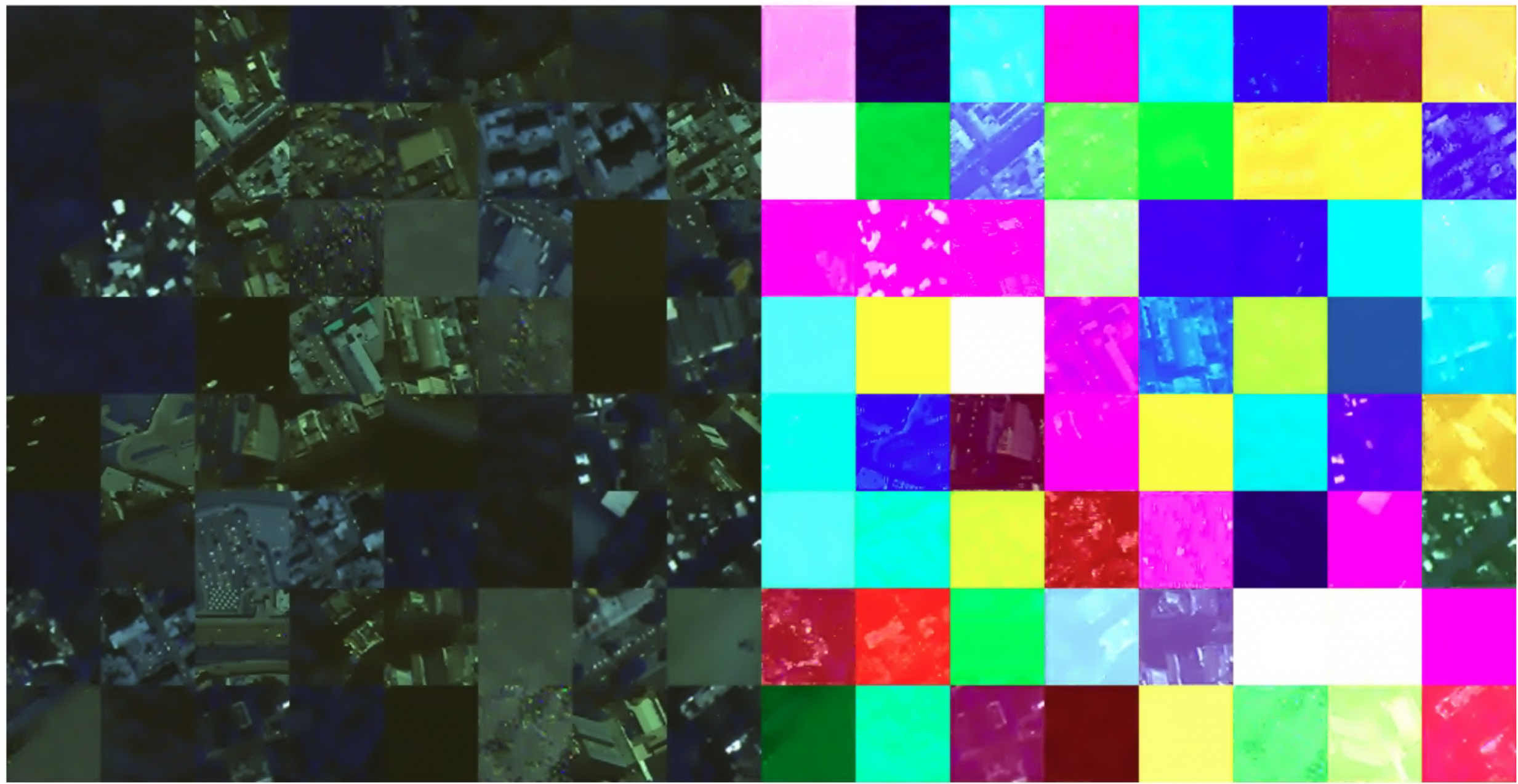

@uminaty @Janspiry As you know, statellie images have several channels (more than 3 rgb channels) than natural images. I try conditional generated task using ddpm. But I still met colour shift problem althought spatial content is good (you can see this below, the left part is ground truth and the right part is generated image). I try to change lr to 1e-5 and train it for 100k iterations but the colour shift didn't disappear, I wonder if a longer training iterations can help. Notably, I disabled clipping the reconstructed x My training setting is: Looking forward to your reply. |

|

Hi @294coder, about clipping, if your data (the x0) are between -1 and 1, it should not be a problem in theory I guess. Otherwise, I noticed that I had much less problem when I had a much larger batch size (for that you may need smaller images or a smaller network...). You can also try gradient accumulation, but it's quite slow... Finally, I haven't really investigated, but the group norms can maybe accentuate this problem? By the way, you can notice that recent papers using diffusion models often indicates much larger batch sizes than what we usually observe for GANs for example. This is probably due to the fact that the network has both to learn a certain distribution and at the same time different noise levels. |

|

@uminaty Hi, |

|

Hi, everyone. If you meet color shift problem and change all the hyperparameters and other settings without any mitigation. You can try to substitue For me, my dataset is satelite images with more channels (4 or 8 channels) which means when generating, the model should output images with the same channel numbers. If you are doing the expriments on satelite images, hyperspectral images, near infrared images and other images with more than rgb channels, it may help. I don't know what with In the end, thanks @uminaty for this advice and @Janspiry for this wonderful codebase. |

|

That's Great ! Thank you @294coder for testing this ! Yes the color shift I've observed was like yours, but in my case a bigger batch size and a lower LR was enough.. It is curious that the problem may indeed come from the GroupNorm, it was specifically designed for small batch sizes. This may be due in our case to different dinamics between the channels, I honnestly don't know.. Anyway, I wish you the best for the future of your experimentation! |

|

@294coder nice work! Look forward to discussing and analyzing with you more! and i will use relu & batchnorm instead of groupnorm to verify your conclusions, thank you for your advise again! |

|

Hello ! If you have enough data, you should be able to train from scratch without any problems. I'm not sure I understand what you've done, but if what you're doing is using pre-trained network weights on another distbution and adding a 1x1 convolution before, I think it's normal to have bad results knowing that satelite images have very different dynamics than "real world" images. Hope it helps ! |

|

@codgodtao For my own observation:

But I noticed satelite images task using dpm on high level task(segmentation), it did a good performance. I think forward and backward process can help model learn more rather than the task itself. Hope it can help you. |

|

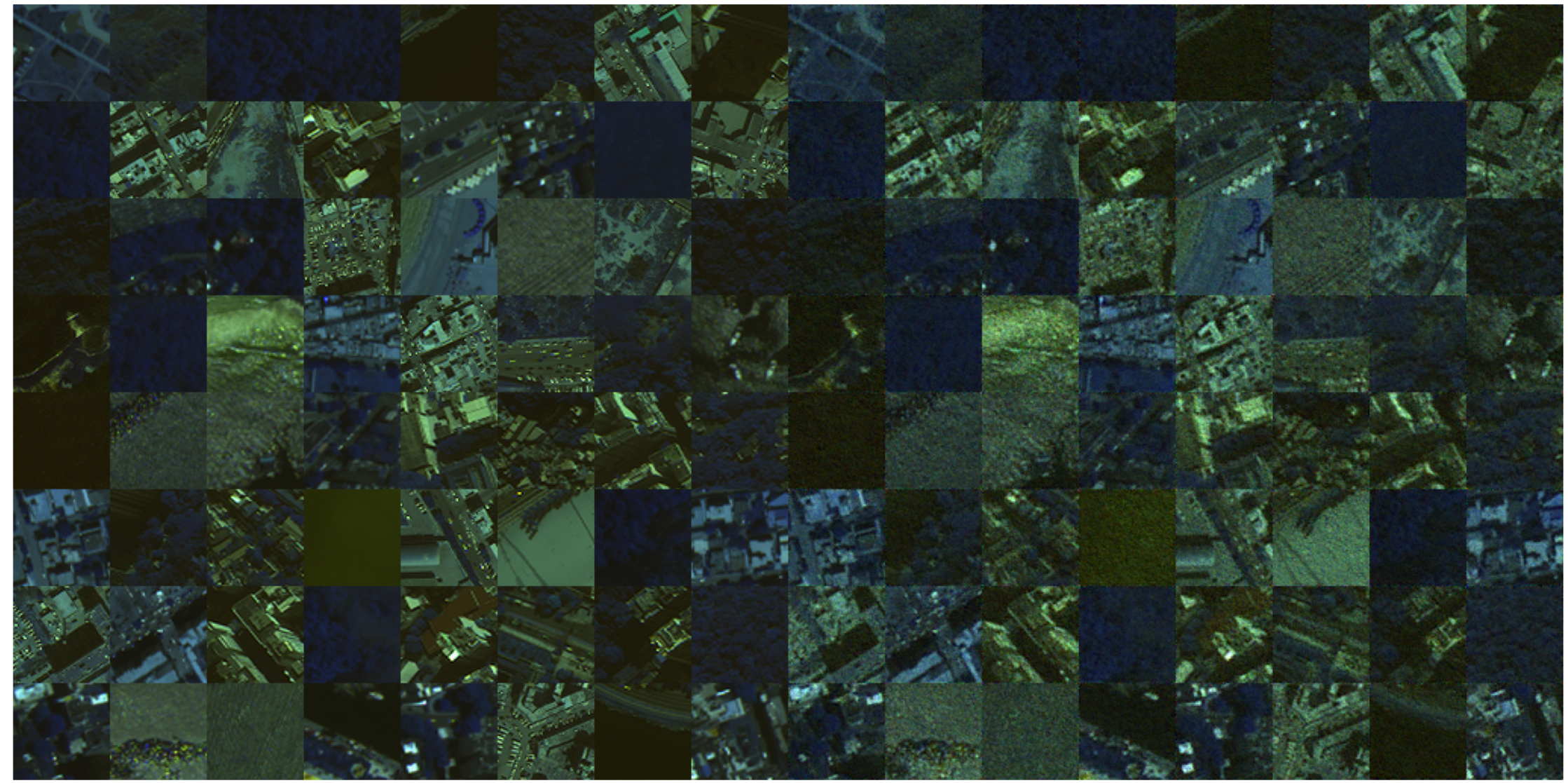

@294coder @uminaty Since my dataset only has more than 6000+ 64*64 training images, it is hard to train a large model, so I have tried on pre-training model, and the result is indeed not good. Some of my observation, for reference.

It is worth noting that my unet is [1,2,4,8] without attention due to the limitation of data size. However, the images still have a lot of noise. Next I will try some more valuable ideas. |

|

@294coder 哥们你也是电子科大的😂?好像我们的方向类似,留个联系方式交流一下怎么说 |

Hi, hello! I have been studying super-resolution recently and have been struggling with a question. When processing or saving images, should I save them in jpg format or png format? Also, in super-resolution, images are often cut into small patches for training. During testing, should the entire test image be input as a whole, or should it be cut into patches of the same size as used in training for input? I hope to get your reply. |

|

This is an automatic reply, confirming that your e-mail was received. Thank you.--------------------------------------------Best,Mei Yang

|

Hello,

First of all, thank you very much for the implementation you have done. it has saved us a lot of time. it's really a great job

I am trying to do super resolution on 6464 to 256256 images. I have kept the same hyperparameters as in the configuration file "sr_sr3_16_128.json" just changing the size of the images.

It seems that the network doesn't learn anymore after 50 000 iterations and produces noisy images or with a colour shift.

The training is very long and I can't afford to test all the possible hyperparameters. According to you, should I rather lower the learning rate? or rather increase the number of layers? I also thought of making 128*128 images but it's not ideal for my case.

Is it useful to just let the training run? knowing that the loss l1 does not evolve anymore?

For your information, the images used are satelite images, rather homogeneous in term of distribution.

PS: in the configuration file "val_freq", "save_checkpoint_freq" are at 1e4, but if I don't put 10 000, the network doesn't save and I don't know why. Maybe 1e4 is a float and it doesn't work for the % operator.

The text was updated successfully, but these errors were encountered: