New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

What params should I use for the 128 to 1024 task, and for the 512 to 1024 task? How should I choose the Unet architecture? #73

Comments

|

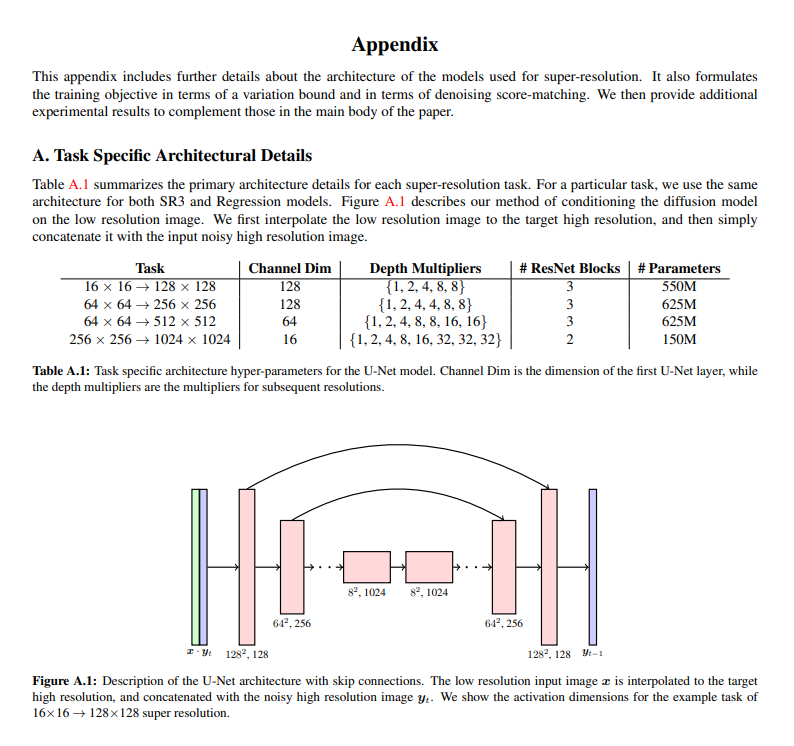

I assume you've looked at the hyperparameters used in the paper (https://arxiv.org/pdf/2104.07636.pdf), so for reference, here is what they used: Regarding dropout, they mention:

Although your sizes are slightly different, I would imagine you could "interpolate/extrapolate" and choose hyperparameters. It would appear as though the general trend is that more parameters are needed for very small to medium sized images, and less are required for medium to large images.

If I had to estimate, you could probably use the following:

Of course, as with any ML task... hyperparameter tuning is highly task-specific, so you will undoubtedly need to play around with the values |

|

Thank you for the detailed answer! I'm using 4 GPUs with 24GB of memory each and I set the batch size to 4 (one image per GPU) |

|

Sure, that sounds reasonable 👍 Let me know how it goes. 👌 |

|

Feel free to reopen the issue if there is any question. |

Thanks for explanation !! But I still have a question. Which parameter represents Channel Dim in this code? |

Specifaclly, I'm asking about the following section in the config file:

The text was updated successfully, but these errors were encountered: