New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Dynamic dimensions required for input: input, but no shapes were provided. Automatically overriding shape to: 1x3x608x608 #1111

Comments

|

@vilmara It looks like the model input is called |

|

Hi @pranavm-nvidia, thanks for your prompt reply. You are right, I have tried with input name "input" and got the same result from trtexec generating the engine with static batch size ( Step: Generating the engine with shape's name 'input' Running the model | BS=2 Note: I got batch dimension errors again, the engine was converted to static batch size 1x3x608x608 instead of keeping the dynamic shape, and the engine generates wrong throughput |

|

@vilmara Can you try using |

|

@pranavm-nvidia , please see below the results; with shape =1 and shape =2 With Max batch: 1 Throughput: 154.158 qps With BS=2 | --shapes='input':2x3x608x608

Throughput: 0 qps |

|

Looks right - with batch size 1, the latency is 6.45ms and with batch size 2 it's 10.35ms. Those numbers seem reasonable to me. |

|

@pranavm-nvidia, and the throughput? with shape>1 it shows 0 qps (I guest it means FPS) |

|

@vilmara Yeah hadn't noticed that before. That looks like a bug in |

|

@pranavm-nvidia, It seems the generated model with trtexec has issues with its deployment on DS-Triton. Is there another sample/tool that shows how to optimize a YOLO Pytorch-ONNX to TensorRT engine INT8 mode with full INT8 calibration and dynamic input shapes?. I have reported the DS-Triton issue here triton-inference-server/server#2606 |

Hi @pranavm-nvidia, I will take a look at it later on. In regards to the See below: Run the inference with trtexec and default batch size Print the engine's input and output shapes: Deploy the engine with DS Run inference on DS with max_batch_size=1 Run inference on DS with max_batch_size=4 |

|

@vilmara It looks like you've built the TRT engine correctly. Regarding the deepstream issue, you'd probably want to ask here: https://forums.developer.nvidia.com/c/accelerated-computing/intelligent-video-analytics/deepstream-sdk/15 |

|

Hi @pranavm-nvidia, thanks for helping to build the TRT engine correctly, I have submitted the new issue at the forum |

|

Closing since no remaining issue in this thread according to last comment, thanks |

Description

I am trying to convert the pre-trained Pytorch YOLOV4 (darknet) model to TensorRT INT8 with dynamic batching, to later on deploying it on DS-Triton. I am following the general steps in the same NVIDIA-AI-IOT/yolov4_deepstream, but getting issues first with dynamic dimensions at the ONNX-TRT conversion step, then loading the model on DS-Triton :

Environment

TensorRT Version: 7.2.1

NVIDIA GPU: T4

NVIDIA Driver Version: 450.51.06

CUDA Version: 11.1

CUDNN Version: 8.0.4

Operating System: Ubuntu 18.04

Python Version (if applicable): 1.8

Tensorflow Version (if applicable):

PyTorch Version (if applicable): container image

nvcr.io/nvidia/pytorch:20.11-py3Baremetal or Container (if so, version): container image

deepstream:5.1-21.02-tritonRelevant Files

YOLOV4 pre-trained model weights and cfg downloaded from

https://raw.githubusercontent.com/AlexeyAB/darknet/master/cfg/yolov4.cfg

https://github.com/AlexeyAB/darknet/releases/download/darknet_yolo_v3_optimal/yolov4.weights

Steps To Reproduce

Complete Pipeline: Pytoch YOLOV4 (darknet) --> ONNX --> TensorRT --> DeepStream-Triton

Step 1: download cfg file and weights from the above link

Step 2: git clone repository pytorch-YOLOv4

$ sudo git clone https://github.com/Tianxiaomo/pytorch-YOLOv4.gitStep 3: Convert model YOLOV4 Pytoch --> ONNX | Dynamic Batch size

Result:

Step 4: Convert model ONNX --> TensorRT | Dynamic Batch size

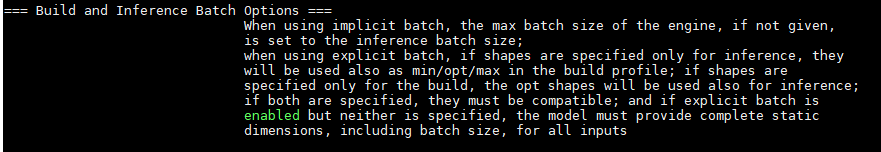

$ sudo docker run --gpus all -it --rm --shm-size=1g --ulimit memlock=-1 --ulimit stack=67108864 -v /tmp/.X11-unix:/tmp/.X11-unix -e DISPLAY=$DISPLAY -v /pytorch-YOLOv4/:/workspace/pytorch-YOLOv4/ deepstream:5.1-21.02-triton$ /usr/src/tensorrt/bin/trtexec --onnx=yolov4_-1_3_608_608_dynamic.onnx --explicitBatch --minShapes=\'data\':1x3x608x608 --optShapes=\'data\':2x3x608x608 --maxShapes=\'data\':8x3x608x608 --workspace=4096 --buildOnly -- saveEngine=yolov4_-1_3_608_608_dynamic.onnx_int8.engine --int8Note: trtexec automatically overrides the engine shape to: 1x3x608x608 instead of keeping the dynamicbatching

$ /usr/src/tensorrt/bin/trtexec --loadEngine=yolov4_-1_3_608_608_dynamic.onnx_int8.engine --int8Result BS=1:

Result BS=2:

Error:

03/09/2021-22:48:45] [E] [TRT] Parameter check failed at: engine.cpp::enqueue::445, condition: batchSize > 0 && batchSize <= mEngine.getMaxBatchSize(). Note: Batch size was: 2, but engine max batch size was: 1Step 5: Config the DS-Triton files as described in the sample NVIDIA-AI-IOT/yolov4_deepstream

Step 6: Run YOLOV4 INT8 mode with Dynamic shapes with DS-Triton

$ deepstream-app -c deepstream_app_config_yoloV4.txtError: "unable to autofill for 'yolov4_nvidia', either all model tensor configuration should specify their dims or none"

I think the problem is with

trtexec, is there a sample/tool that shows how to optimize a YOLO Pytorch-ONNX to TensorRT engine INT8 mode with full INT8 calibration and dynamic input shapes?The text was updated successfully, but these errors were encountered: