New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

polygraphy.exception.exception.PolygraphyException: Could not parse ONNX correctly #1677

Comments

|

@hmh10098 This doesn't look like a valid ONNX model. How was it exported? For this to be a valid broadcast, the slopes tensor should have had a shape of And to be sure, ONNX-Runtime complains with a similar error (run with

You can fix this with a simple ONNX-GraphSurgeon script like: import onnx

import onnx_graphsurgeon as gs

import numpy as np

graph = gs.import_onnx(onnx.load("SFace.onnx"))

for node in graph.nodes:

if node.op == "PRelu":

# Make the slope tensor broadcastable

slope_tensor = node.inputs[1]

slope_tensor.values = np.expand_dims(slope_tensor.values, axis=(0, 2, 3))

onnx.save(gs.export_onnx(graph), "SFace_fixed.onnx")After which you can try |

Thanks for your help ! |

Description

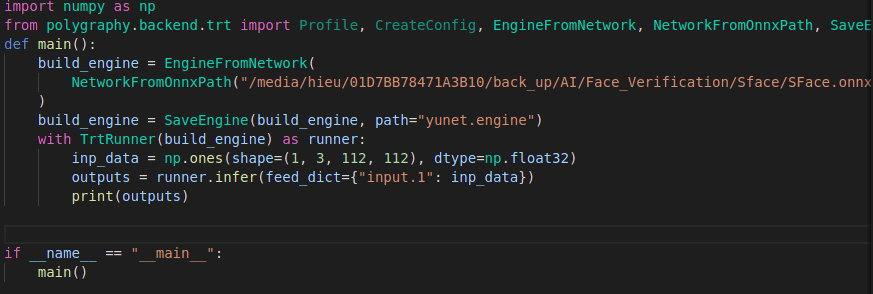

*My code:

https://drive.google.com/file/d/1g_EsRDPQi_jTuY67BLAkUD9q6dA3j_w3/view?usp=sharing

[12/21/2021-13:42:26] [TRT] [E] [graphShapeAnalyzer.cpp::analyzeShapes::1285] Error Code 4: Miscellaneous (IPointWiseLayer conv_1_relu: broadcast dimensions must be conformable)

[12/21/2021-13:42:26] [TRT] [E] ModelImporter.cpp:773: While parsing node number 4 [PRelu -> "conv_1_relu"]:

[12/21/2021-13:42:26] [TRT] [E] ModelImporter.cpp:774: --- Begin node ---

[12/21/2021-13:42:26] [TRT] [E] ModelImporter.cpp:775: input: "conv_1_batchnorm"

input: "conv_1_relu_gamma"

output: "conv_1_relu"

name: "conv_1_relu"

op_type: "PRelu"

[12/21/2021-13:42:26] [TRT] [E] ModelImporter.cpp:776: --- End node ---

[12/21/2021-13:42:26] [TRT] [E] ModelImporter.cpp:779: ERROR: ModelImporter.cpp:179 In function parseGraph:

[6] Invalid Node - conv_1_relu

[graphShapeAnalyzer.cpp::analyzeShapes::1285] Error Code 4: Miscellaneous (IPointWiseLayer conv_1_relu: broadcast dimensions must be conformable)

[E] In node 4 (parseGraph): INVALID_NODE: Invalid Node - conv_1_relu

[graphShapeAnalyzer.cpp::analyzeShapes::1285] Error Code 4: Miscellaneous (IPointWiseLayer conv_1_relu: broadcast dimensions must be conformable)

[!] Could not parse ONNX correctly

Traceback (most recent call last):

File "/media/hieu/01D7BB78471A3B10/back_up/AI/onnx2tensorRT/test_polygraphy.py", line 15, in

main()

File "/media/hieu/01D7BB78471A3B10/back_up/AI/onnx2tensorRT/test_polygraphy.py", line 8, in main

with TrtRunner(build_engine) as runner:

File "/media/hieu/01D7BB78471A3B10/back_up/anaconda3/envs/IF-gpu/lib/python3.8/site-packages/polygraphy/backend/base/runner.py", line 59, in enter

self.activate()

File "/media/hieu/01D7BB78471A3B10/back_up/anaconda3/envs/IF-gpu/lib/python3.8/site-packages/polygraphy/backend/base/runner.py", line 94, in activate

self.activate_impl()

File "/media/hieu/01D7BB78471A3B10/back_up/anaconda3/envs/IF-gpu/lib/python3.8/site-packages/polygraphy/backend/trt/runner.py", line 71, in activate_impl

engine_or_context, owning = util.invoke_if_callable(self._engine_or_context)

File "/media/hieu/01D7BB78471A3B10/back_up/anaconda3/envs/IF-gpu/lib/python3.8/site-packages/polygraphy/util/util.py", line 579, in invoke_if_callable

ret = func(*args, **kwargs)

File "/media/hieu/01D7BB78471A3B10/back_up/anaconda3/envs/IF-gpu/lib/python3.8/site-packages/polygraphy/backend/base/loader.py", line 41, in call

return self.call_impl(*args, **kwargs)

File "/media/hieu/01D7BB78471A3B10/back_up/anaconda3/envs/IF-gpu/lib/python3.8/site-packages/polygraphy/backend/trt/loader.py", line 689, in call_impl

engine, owns_engine = util.invoke_if_callable(self._engine)

File "/media/hieu/01D7BB78471A3B10/back_up/anaconda3/envs/IF-gpu/lib/python3.8/site-packages/polygraphy/util/util.py", line 579, in invoke_if_callable

ret = func(*args, **kwargs)

File "/media/hieu/01D7BB78471A3B10/back_up/anaconda3/envs/IF-gpu/lib/python3.8/site-packages/polygraphy/backend/base/loader.py", line 41, in call

return self.call_impl(*args, **kwargs)

File "/media/hieu/01D7BB78471A3B10/back_up/anaconda3/envs/IF-gpu/lib/python3.8/site-packages/polygraphy/backend/trt/loader.py", line 591, in call_impl

return engine_from_bytes(super().call_impl)

File "", line 3, in func_impl

File "/media/hieu/01D7BB78471A3B10/back_up/anaconda3/envs/IF-gpu/lib/python3.8/site-packages/polygraphy/backend/base/loader.py", line 41, in call

return self.call_impl(*args, **kwargs)

File "/media/hieu/01D7BB78471A3B10/back_up/anaconda3/envs/IF-gpu/lib/python3.8/site-packages/polygraphy/backend/trt/loader.py", line 615, in call_impl

buffer, owns_buffer = util.invoke_if_callable(self._serialized_engine)

File "/media/hieu/01D7BB78471A3B10/back_up/anaconda3/envs/IF-gpu/lib/python3.8/site-packages/polygraphy/util/util.py", line 579, in invoke_if_callable

ret = func(*args, **kwargs)

File "/media/hieu/01D7BB78471A3B10/back_up/anaconda3/envs/IF-gpu/lib/python3.8/site-packages/polygraphy/backend/trt/loader.py", line 503, in call_impl

ret, owns_network = util.invoke_if_callable(self._network)

File "/media/hieu/01D7BB78471A3B10/back_up/anaconda3/envs/IF-gpu/lib/python3.8/site-packages/polygraphy/util/util.py", line 579, in invoke_if_callable

ret = func(*args, **kwargs)

File "/media/hieu/01D7BB78471A3B10/back_up/anaconda3/envs/IF-gpu/lib/python3.8/site-packages/polygraphy/backend/base/loader.py", line 41, in call

return self.call_impl(*args, **kwargs)

File "/media/hieu/01D7BB78471A3B10/back_up/anaconda3/envs/IF-gpu/lib/python3.8/site-packages/polygraphy/backend/trt/loader.py", line 189, in call_impl

trt_util.check_onnx_parser_errors(parser, success)

File "/media/hieu/01D7BB78471A3B10/back_up/anaconda3/envs/IF-gpu/lib/python3.8/site-packages/polygraphy/backend/trt/util.py", line 52, in check_onnx_parser_errors

G_LOGGER.critical("Could not parse ONNX correctly")

File "/media/hieu/01D7BB78471A3B10/back_up/anaconda3/envs/IF-gpu/lib/python3.8/site-packages/polygraphy/logger/logger.py", line 349, in critical

raise PolygraphyException(message) from None

polygraphy.exception.exception.PolygraphyException: Could not parse ONNX correctly

Environment

TensorRT Version: nvidia-tensorrt==8.2.1.8

NVIDIA GPU:

Tue Dec 21 13:45:41 2021

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 470.57.02 Driver Version: 470.57.02 CUDA Version: 11.4 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 NVIDIA GeForce ... Off | 00000000:08:00.0 Off | N/A |

| N/A 47C P8 N/A / N/A | 7MiB / 2004MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| 0 N/A N/A 1135 G /usr/lib/xorg/Xorg 2MiB |

| 0 N/A N/A 2279 G /usr/lib/xorg/Xorg 2MiB |

+-----------------------------------------------------------------------------+

NVIDIA Driver Version:

nvcc: NVIDIA (R) Cuda compiler driver

Copyright (c) 2005-2021 NVIDIA Corporation

Built on Wed_Jul_14_19:41:19_PDT_2021

Cuda compilation tools, release 11.4, V11.4.100

Build cuda_11.4.r11.4/compiler.30188945_0

CUDA Version: 11.4

CUDNN Version: 8.2.4

Operating System: Ubuntu 20.04

Python Version (if applicable): 3.8

PyTorch Version (if applicable): torch==1.10.1

Baremetal or Container (if so, version):

Relevant Files

Steps To Reproduce

The text was updated successfully, but these errors were encountered: