-

Notifications

You must be signed in to change notification settings - Fork 1.1k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

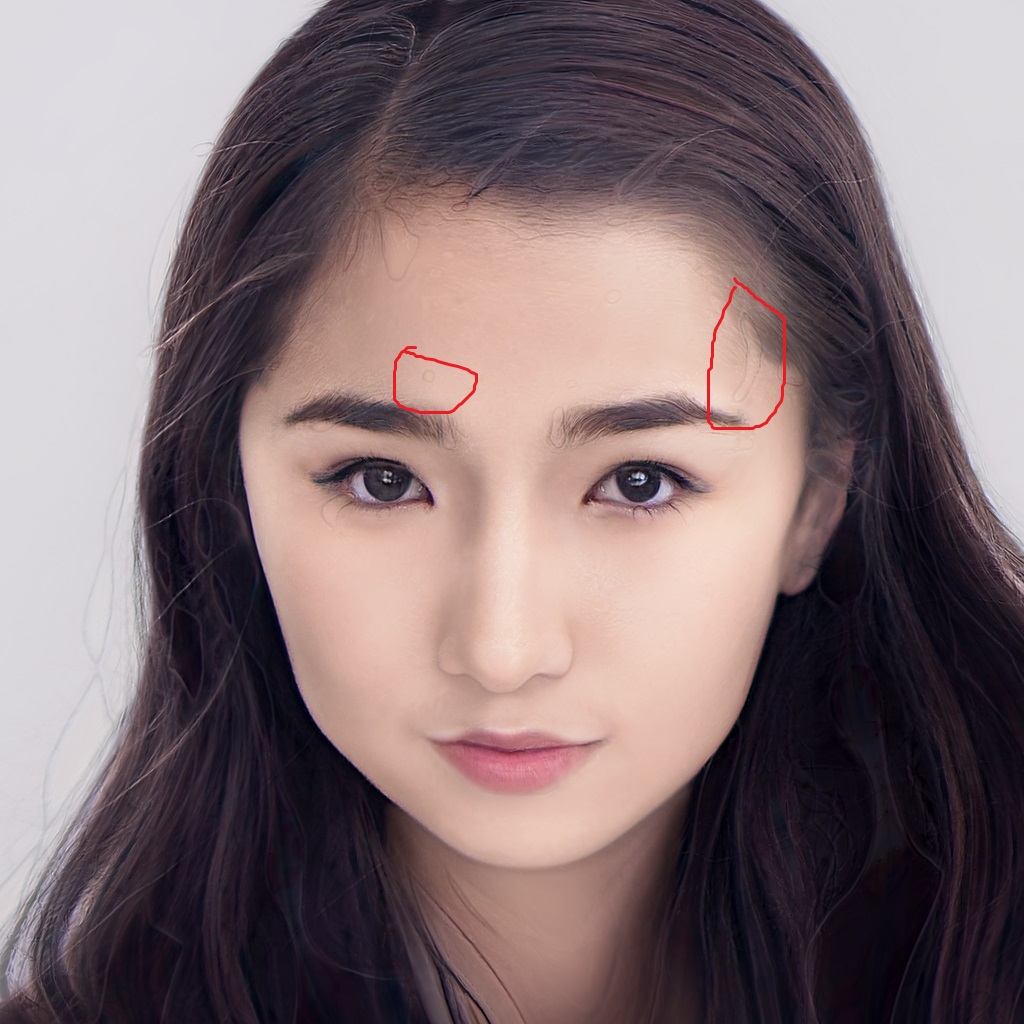

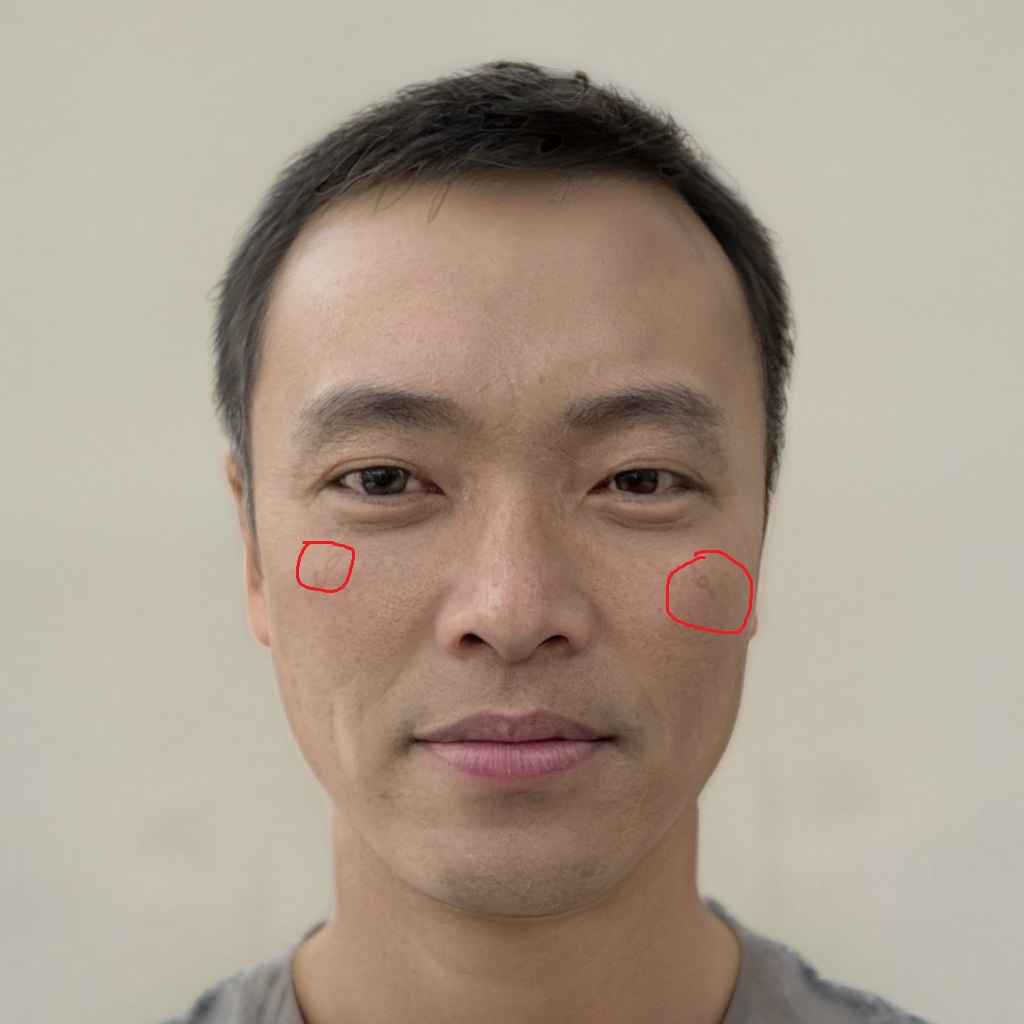

Project real photo,there are some strange circle in image. #35

Comments

Code is here."""Project given image to the latent space of pretrained network pickle.""" import copy import click import dnnlib def project( #---------------------------------------------------------------------------- @click.command() #---------------------------------------------------------------------------- if name == "main": #---------------------------------------------------------------------------- |

Project real photo,there are some strange circle in image. like below:

What cause this? How to avoid?

Thanks

The text was updated successfully, but these errors were encountered: