New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Timing issue (despite PREEMPT RT patch) #330

Comments

|

Someone a suggestion? I think one reason could be, that my kernel is not configured right. So I would try to get an older rt-kernel that is closer to my non-rt kernel version, to avoid major changes in the kernel config. But one issue I still have is the modulo behavior of |

|

Sorry for not answering earlier. OS timing issues are not exactly on topic for SOEM.

This is an issue indeed. The sample code assumes a 64bit DCtime. Some slaves only provide 32 bits. It is up to you to extend this to 64 bits. Only then you can take the direct difference as in the example. Extending or not. Some code change is necessary. Next to that you do have some configuration issues. Linux kernel with preempt-rt patch should have a max latency jitter around 50us on modern hardware. My advice is to stick to a vanilla kernel and patch that with the latest preempt-rt patch set. Configure a minimal system with debugging off. CPU isolation for non critical processes might help too. Keep kernel processes at a higher priority than your SOEM application (SOEM needs the kernel to be responsive). Pirority stack: |

|

Thank you for your answer! I will try to reconfigure the kernel. Regarding the DC: Can you tell me which register the DC uses, so I can check if this is 32 or 64 bit long? |

|

May I ask you some question regarding how have you got the histogram data from ethernet port? |

|

I use Ubuntu Server 18.04 with low-latency kernel, important, no monitor or USB device (keyboard, mouse) connected, NIC Intel i210at or i211at, isolcpu kernel-Parms to isolate a physical core. If hyperthreading is active, you must isolate two logical CPUs (0 & 2) or (1 & 3) as a combination within two physical CPUs. Interrupt of NIC rx & tx should be assigned to the isolated CPU. RT thread runs on isolated CPU. All non-RT threads and interrupts should be run on the non-isolated CPU. My machine runs with 250us cycle time and latency max. <5 us. I first use a while loop to catch the exact next clock, to send and receive ecat pdo, then ~ 70% of the cycle time with clock_nanosleep. I219 is very bad because of the very high latency on the receiving side. No chance to get performance. Note the following: Do not use SDOwrite or SDOread in the same RT thread. Both send a lot of Ethercat frames and wait for the answer. Your RT thread is blocked. However, running SDOwrite or SDOread on a separate, non-RT capable thread also gives you high latencies. If non-RT threads have access to the NIC and set a mutex, the RT thread is blocked. The trick is a small patch in the SOEM that introduces a delay into the SDO commands. The RT thread therefore reads all incoming frames (sdo & pdo) from ec_receive_processdata. Linux attempts to increase the priority of a mutex non-RT thread when an RT thread requests that mutex. However, this process is extremely indeterminate over time. I had tried it and there were these problems. rt preempt is unnecessary. Everything important in the patch to the Intel processors is contained in the low latency kernel. |

|

Can we close this issue? |

|

Inactive |

Hello!

I am facing a similar problem like robotixdeveloper in issue #296 . Running in cyclic sync position mode I am loosing packages and the servo is making cracking noises.

My setting is alo very similar: It is a Lenovo T460s with an core i5 with an Intel Ethernet Connection I219-V. Following the instruction from #296 and others I did the following:

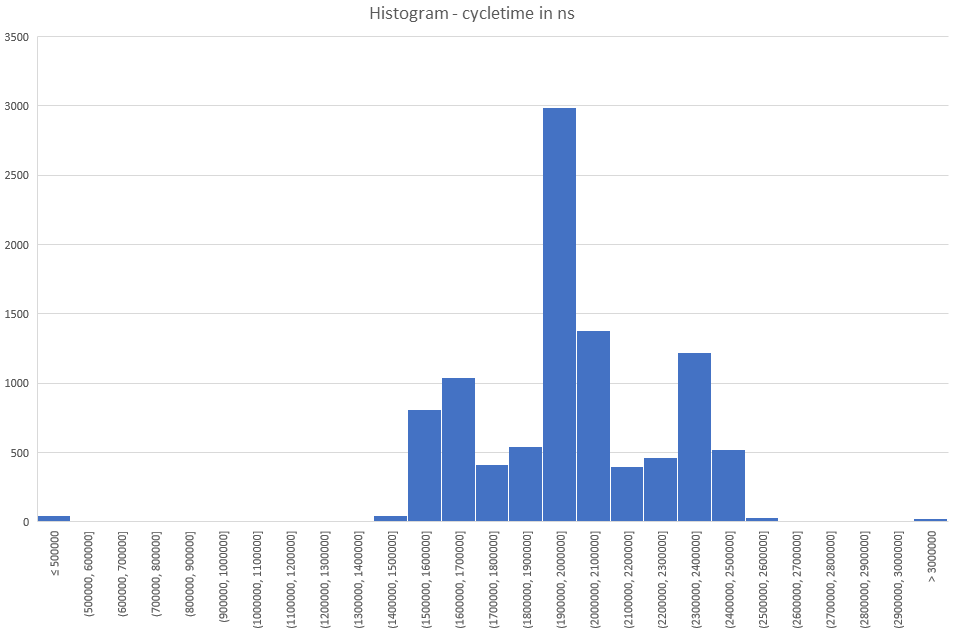

set_realtime_priority()(see below) in order to set the rt thread ecatthread from the red_test example to priority 99ethtool -C eth0 rx-usecs 0 rx-frames 1 tx-usecs 0 tx-frames 1)The test program runs for 20 seconds with a cycle time of 2 miliseconds. I made some plots of the cycle time with its histogram

of

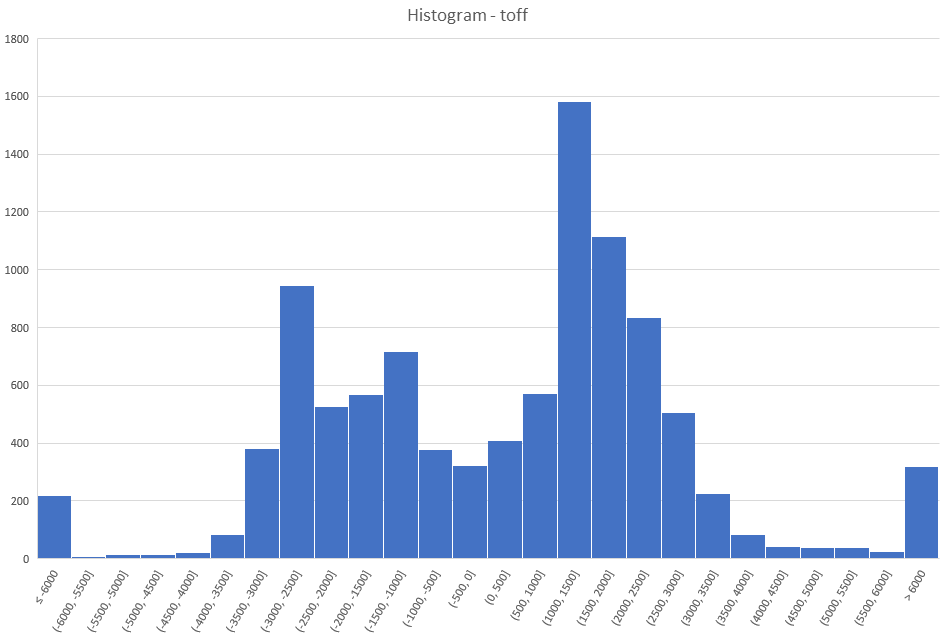

toffwith its histogramand of

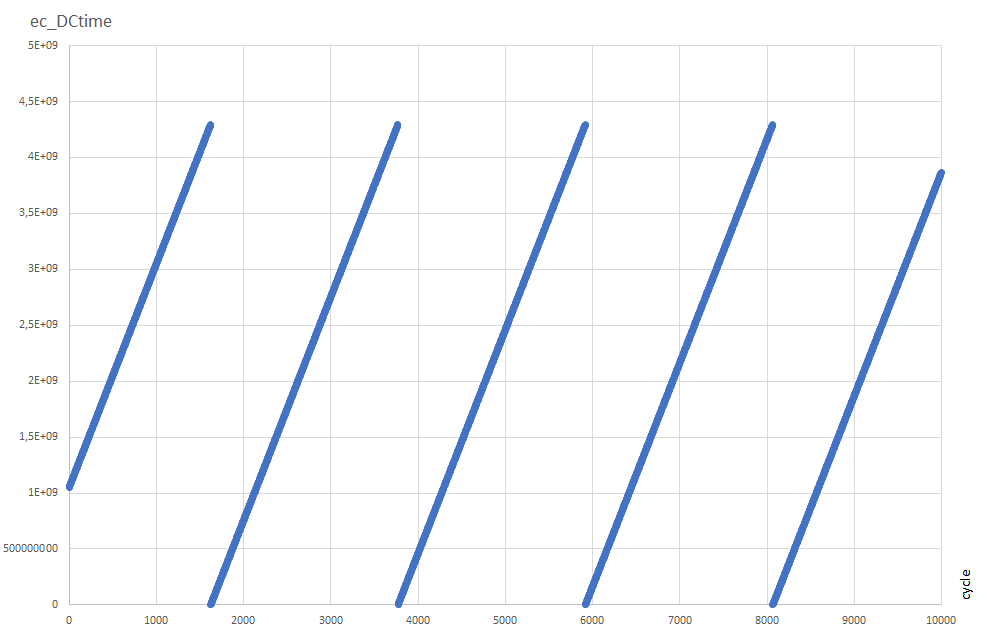

ec_DCtime.You can also find the wireshark capture of this test here:

test3.pcapng.txt

Any suggestions what might be wrong with my code or the setup? Is it correct, that

ec_DCtimehas a modulo behavior? Limited range?Thank you for your help!

The text was updated successfully, but these errors were encountered: