New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Error while running domain adaption (fine tuning) with distributed mode #269

Comments

|

Hi,

You have new vocabularies so you should update them in your configuration. |

|

I have four vocabulary files with me presently - The Thanks ! Mohammed Ayub |

|

Hi @guillaumekln , I passed the new vocabulary files (

2018-11-20 16:52:17.028197: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1103] Created TensorFlow device (/job:localhost/replica:0/task:0/device:GPU:7 with 10756 MB memory) -> physical GPU (device: 7, name: Tesla K80, pci bus id: 0000:00:1e.0, compute capability: 3.7) InvalidArgumentError (see above for traceback): Restoring from checkpoint failed. This is most likely due to a mismatch between the current graph and the graph from the checkpoint. Please ensure that you have not altered the graph expected based on the checkpoint. Original error: tensor_name = optim/learning_rate; expected dtype float does not equal original dtype double Not sure why its giving this error. Mohammed Ayub |

The PR referenced above will add a generation of the merged vocabulary to make this easier. For the error, does it work if instead of using |

Great ! Thank you for adding that @guillaumekln

No, it did not work for some reason. I did point my Also, its if you look at the log file it says "you can change only the non-structural values like dropout etc." I'm assuming that doesn't have to do anything with Mohammed Ayub |

|

It's most definitely a bug in our code. Do you have the full logs of the |

|

It did not give much logging info when running the command: below is what I got on std output, let me know if this works:

|

|

@guillaumekln Just checking if there was any update on this ? Thanks. Mohammed Ayub |

|

I tried to reproduce this error but did not succeed. Will keep looking unless you are able to send me the checkpoint and the vocabularies. Is there something I should know about your setup/installation? Looks like scalar tensors are silently promoted to float64. |

|

Sure. Here is the Dropbox link containing the files: https://www.dropbox.com/s/1cmynzc5kvbr89t/Issue269.zip?dl=0

It is a basic out of box setup. no custom code and changes are done. Mohammed Ayub |

|

Thanks, that's helpful. In both checkpoints, scalar variables are Did you run the initial training in a different setup (e.g. a different server)? |

|

I had to stop and change the instance type of AWS instance, but I'm using the same p2.8xlarge instance for training and fine tuning. Apart from that no changes on the server side. I had to update the OpenNMT-tf somewhere in between. Could that be because I ran training using different version of To double check let me retry this with another model on a different machine today. Mohammed Ayub |

|

@guillaumekln Here is the full log file: https://www.dropbox.com/s/o6a4jamua4el7um/da_error.txt?dl=0 |

|

We have been using the vocabulary update feature a lot around here so there might be something special here. Couple questions:

|

|

Below are my findings:

Distributed Mode: - Apparently No. Gives me the same error Key optim/cond/beta1_power not found in checkpoint

Yes, I'm using distributed training (because training is twice as fast and cost effective). Fine tuning seems to be working fine in non distributed mode. In short, looks like loading model checkpoints works in replicated mode (for retraining and fine tuning ) but not in distributed mode. Mohammed Ayub |

|

Thanks, that's very interesting. I will check what is happening in distributed mode (even though we let TensorFlow do everything). |

|

Also, not sure if this is a cascading issue --> When I run the fine tuning (in replicated mode) on the domain data my BLEU scores are continuously dropping and my eval predictions are getting worse. |

You highlighted another issue here, thanks! Models trained with gradient accumulation had some different variable names than models trained without. Fixed in ff38e89. |

|

Great ! Thanks for the fix. @guillaumekln |

|

Good news is loading checkpoint works fine in distributed mode now. |

|

Hi @guillaumekln, I did some more experiments on fine tuning with other base models, looks like the BLEU scores were decreasing because I had over-fit my base model. Running fine-tuning on partially learnt models seems to give better fine tuned BLEU scores. Thanks! Mohammed Ayub |

Hi,

I have created a new vocabulary files (source and target) on the domain data set and have updated the base model checkpoint file using the below statment:

onmt-update-vocab--model_dir /home/ubuntu/mayub/datasets/in_use/euro/run1/en_es_transformer_b/--output_dir /home/ubuntu/mayub/datasets/in_use/euro/run1/en_es_transformer_b/added_vocab/--src_vocab /home/ubuntu/mayub/datasets/in_use/euro/train_vocab/src_vocab_50k.txt--tgt_vocab /home/ubuntu/mayub/datasets/in_use/euro/train_vocab/trg_vocab_50k.txt--new_src_vocab /home/ubuntu/mayub/datasets/in_use/euro/train_vocab/src_vocab_nfpa_50k.txt--new_tgt_vocab /home/ubuntu/mayub/datasets/in_use/euro/train_vocab/trg_vocab_nfpa_50k.txtThis generates the new checkpoint file which I pass to the fine tuning train_and_eval command:

onmt-main train_and_eval--model_type Transformer--checkpoint_path /home/ubuntu/mayub/datasets/in_use/euro/run1/en_es_transformer_b/added_vocab/--config /home/ubuntu/mayub/datasets/in_use/euro/run1/config_run_da_nfpa.yml--auto_config --num_gpus 8Changes I have made to the config file -only updated the train and eval feature and labels file (source and target vocabulary are same)

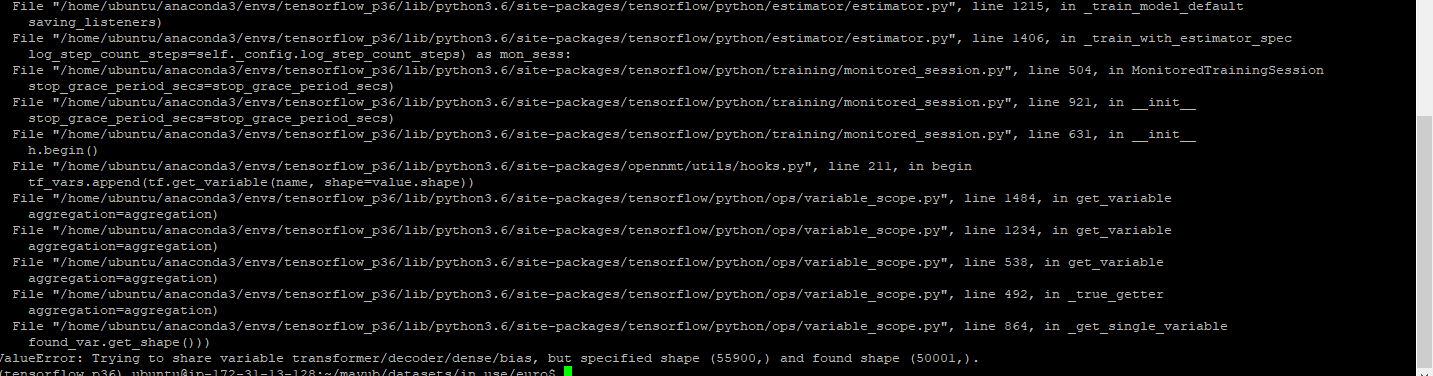

data:train_features_file: /home/ubuntu/mayub/datasets/in_use/euro/run1/nfpa_train_tokenized_bpe_applied.entrain_labels_file: /home/ubuntu/mayub/datasets/in_use/euro/run1/nfpa_train_tokenized_bpe_applied.eseval_features_file: /home/ubuntu/mayub/datasets/in_use/euro/run1/nfpa_dev_tokenized_bpe_applied.eneval_labels_file: /home/ubuntu/mayub/datasets/in_use/euro/run1/nfpa_dev_tokenized_bpe_applied.essource_words_vocabulary: /home/ubuntu/mayub/datasets/in_use/euro/train_vocab/src_vocab_50k.txttarget_words_vocabulary: /home/ubuntu/mayub/datasets/in_use/euro/train_vocab/trg_vocab_50k.txtBelow is the error I'm getting:

Not sure where I'm going wrong. Any help appreciated.

Thanks !

Mohammed Ayub

The text was updated successfully, but these errors were encountered: