-

Notifications

You must be signed in to change notification settings - Fork 183

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Request for help with understanding the poses #78

Comments

semantic-kitti-api/generate_sequential.py Lines 42 to 68 in c2d7712

Line 66 does the conversion: poses.append(np.matmul(Tr_inv, np.matmul(pose, Tr))) where Tr is the extrinsic calibration matrix from velodyne to camera.

diff = np.matmul(inv(pose), past["pose"])And yes, if you done the math correctly, then the static parts should be at the same place. hope that helps. |

|

Thank you so much for the guidance. We can see the 3 trees/ pillar like structures have come closer, but it is still not a perfect overlap. Also, the car at the right side near ego vehicle does not fully overlap. Here is the code snippent: `# load this frame and it's pose Is this what you would also expect or is there something I have done wrong? Thank you again for helping and looking forward to your comment. Best Regards |

|

No that's not expected. With our poses, all frames should be properly aligned. Let's say your poses are T1,T2, and T3. You now want to have everything in the reference frame of T3, then it's T3' = inv(T3)*T3 Obviously T3' is the identity. Operationally you can think of this like this: You first move the points to world coordinates and then substract the pose of T3. If you do it correctly, then the prior frames should be properly aligned. Hope that clarifies everything. |

|

Thank you for the super fast reply. I quickly gave it a try:

Here past_1 means frame - 1 Unfortunately, I still see the same 'not perfect' alignment when I concatenate the point clouds (I use np.vstack()). frame_velo_pose --> T3 (frame3) So, frame_velo_pcl_past1_mcomp = np.dot(pose_past1_comp, frame_velo_pcl_past1.T).T --> (inv(T3) * T2) * pcl_frame_1 Unfortunately I just don't see what am I doing different. Best Regards |

|

I noted you mention about the subtraction operation. But as I understand, I do not really should be subtracting anything, right? |

|

there is no substraction. it was meant as metaphor. Please refer to: https://github.com/PRBonn/semantic-kitti-api/blob/master/generate_sequential.py Specifically: semantic-kitti-api/generate_sequential.py Lines 180 to 202 in c2d7712

|

|

Okay, I shall give it a try again. Though I was actually referring this very code to begin with. I shall let you know as I make progress. I have a strong suspicion about the way I concatenate the points after transforming. May be I am doing something wrong there. Good Night and thank you, again!! Best Regards |

|

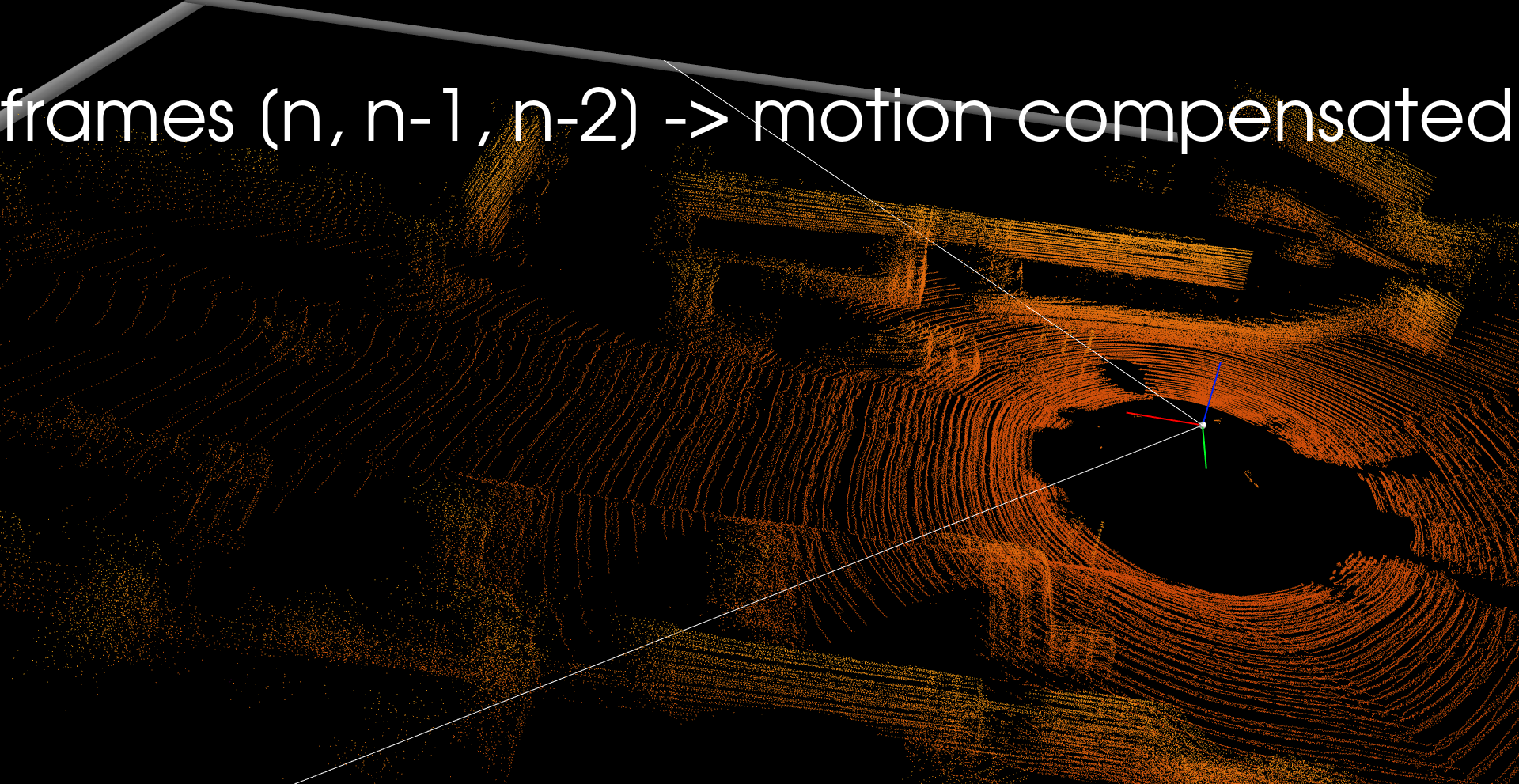

Hi, I am happy to inform - I found out the problem - I forgot to convert to homogeneous coordinates, hence the offsets. Here is how it looks now The static trees and poles are perfectly aligned now. The motorcycle can be seen moving. Thank you a lot!! Best Regards |

|

Good that it works now. Good luck with everything that you now build ontop! :) I will close now the issue. |

|

Hi, Thanks and looking forward to your reply. |

|

please have a look at the voxelizer (https://github.com/jbehley/voxelizer), where I create for scene completion a voxel grid of past and future scans. There are all necessary transformations included. You can have a look at the gen_data.cpp where this is achieved without the GUI, but it's as simple as having the pose of scan n and multiplying it with the inverse of (n+i, where i = -k, ..., -1, 1, ..., k), see https://github.com/jbehley/voxelizer/blob/0ace074b8981ded4c9bb73ad362b44ae2e57ad34/src/data/voxelize_utils.cpp#L183 hope that helps. If you have specific questions, then please open a new issue to also allow other to find it. |

Hi,

First, thank you so much for this great effort. Wonderful work!!

Now, I am trying to do motion compensation across frame n, n-1, n-2 ...

However, being a beginner in robotics, I have some questions regarding the poses:

The poses are in camera coordinates as I see from the site, so they should be converted to velodyne before applying, is this correct? I think the generate_sequential.py file shows this?

What is the reference of each pose P[i] - robot/ velodyne pose wrt to world coordinates or wrt previous pose p[i-1]?

Here again, I tried referring the generate_sequential.py script, but, I do not see a lot of difference after applying the compensation step, the concatenated point clouds look almost similar to what they look without any motion compensation (not applying the pose matrix).

Ideally, I expect, after motion compensation, all static points should overlap to a large extent, leaving only mobile objects appearing multiple times. Is this expectation correct?

Please help me understand this. I would really appreciate if you could present a small example as well.

Best Regards

Sambit

The text was updated successfully, but these errors were encountered: