New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Is there any reason for j to evaluate when i returns 0 rows? #2662

Comments

I use I probably wrote the latter a dozen times yesterday. I imagine I might also do Somewhat related: #2022 |

|

interesting... so then maybe there's some compromise to strike where j is inspected for structure and not evaluated? |

|

Hm, maybe you have a better example? Your example fails not only with zero rows but also with one (assuming you use a variable of a valid class, so not NA): (So this seems to argue for the |

|

FWIW, the behavior of data.table changed in 1.9.8 to cause j to be evaluated regardless of the size. There is some lengthy discussion here along with examples: For my team, this change was/is a big problem which caused us to lock data.table to 1.9.6 with no plans to update as it is too much overhead to find all uses of data.table that would be affected by this change. It would help us immensely if there was an option to not evaluate j if .N is 0 |

|

I used to use @brianbolt you should be able to use dtq package to identify each single case like this without much hassle https://github.com/jangorecki/dtq |

|

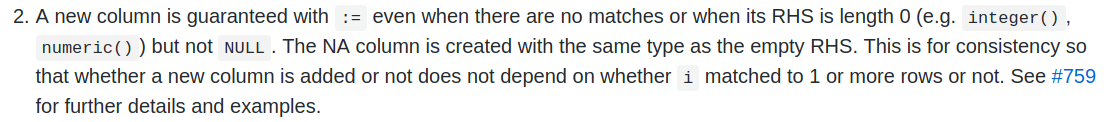

Here's the news item from 1.9.8 in the potentially-breaking-changes section at the top : The driver was #759 contains this comment from me :

In this case, the examples data and example expression is critical. Consider the provided example so far : but you get that error anyway, regardless of how many In this comment, Uwe changed his RHS expression to be a different function that is robust to empty input. That's fixing the root cause and seems right to me. I added a further comment here to show what I mean. Similarly, we need better examples to consider. @brianbolt So you're stuck at 1.9.6 but it is working. You know you're depending on that behaviour in 1.9.6 (because it broke in 1.9.8) but pinpointing which lines is what you'd like so you can migrate safely. If I understand correctly, you accept the new behaviour is the right way to go? If not, lets continue to discuss the cases with better examples from you. If yes, what might work is a modified version of 1.9.6 that emits a trace every time i is empty and does not run j. This way you get no other breakage, but you've identified the dependent lines and can fix them. If that's what you'd like, maybe that's possible. |

|

Anyway, it's nice to hear people have production code using data.table. Most people weighing up the options don't think you exist. If folk who do have production code using data.table could think of ways they could support the project, that would be great, @brianbolt. |

|

@mattdowle I'm planning to use data.table in production code as well. Would be nice if there was a function to check R-script files ( (Although this isn't support, it could serve imo as a direction for improving the chances of data.table being used in production code) |

|

I had a few more thoughts on this. In no particular order.

I do admit that I could have handled 1.9.8 better, and there should have been a migration option for this change. That wasn't "the best we could have done", I could have added an option too and should have done.

The fault was not providing an upgrade option in this case. And over in PR #2652, I've been tamed there by others on this project, and now we're not only providing options to return the old behaviour but also not even changing the default yet but giving notice that it will change in future. This is taking an enormous amount of time for such few people on this project. I haven't even started CRAN_Release procedures yet to check reverse dependencies (over 300). We should have a pre-release code freeze too at some point. |

|

The question asked by Michael in this issue has been answered. |

This returns

I'm surprised we got to

j. Is there any reason we'd want to evaluatejhere?I guess this is to cover grouping situations where we want to make sure each group returns the same shape, even if it has 0 rows?

If so, is there not something we can do to be smarter about how to handle this?

(in this situation, I'm not even sure what my expected output is, TBH)

The text was updated successfully, but these errors were encountered: