New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

get_lidar_all #106

Comments

|

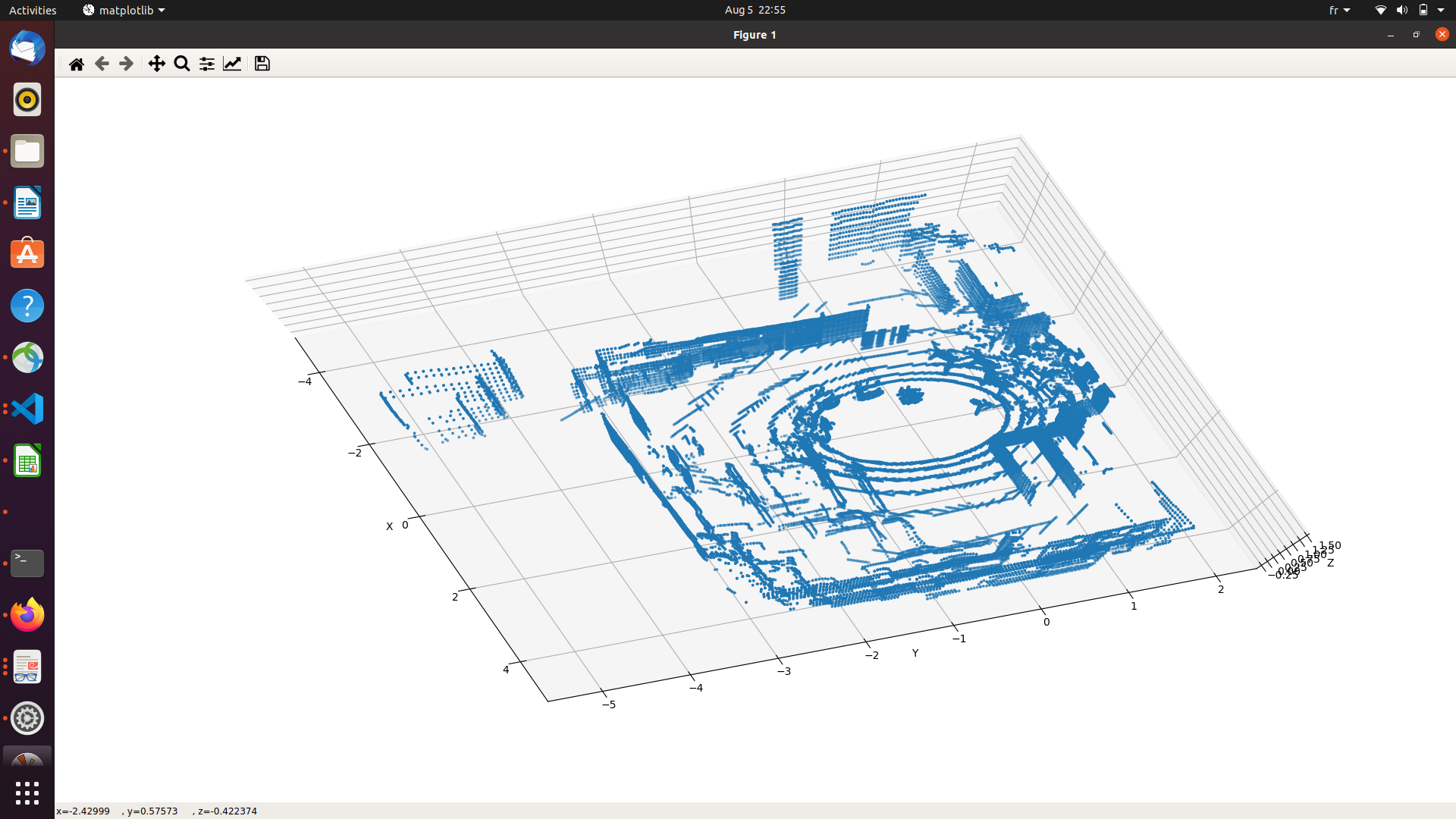

Hi @elhamAm, I cannot seem to reproduce your issue. I used the following script to generate 360 degree lidars by modifying Script: from igibson.robots.turtlebot_robot import Turtlebot

from igibson.simulator import Simulator

from igibson.scenes.gibson_indoor_scene import StaticIndoorScene

from igibson.objects.ycb_object import YCBObject

from igibson.utils.utils import parse_config

from igibson.render.mesh_renderer.mesh_renderer_settings import MeshRendererSettings

import numpy as np

from igibson.render.profiler import Profiler

import igibson

import os

from mpl_toolkits.mplot3d import Axes3D

import matplotlib.pyplot as plt

def main():

config = parse_config(os.path.join(igibson.example_config_path, 'turtlebot_demo.yaml'))

settings = MeshRendererSettings()

s = Simulator(mode='gui',

image_width=256,

image_height=256,

rendering_settings=settings)

scene = StaticIndoorScene('Rs',

build_graph=True,

pybullet_load_texture=True)

s.import_scene(scene)

turtlebot = Turtlebot(config)

s.import_robot(turtlebot)

turtlebot.apply_action([0.1, -0.1])

s.step()

lidar = s.renderer.get_lidar_all()

print(lidar.shape)

fig = plt.figure()

ax = Axes3D(fig)

ax.scatter(lidar[:,0], lidar[:,2], lidar[:,1], s=3)

plt.show()

s.disconnect()

if __name__ == '__main__':

main()output: |

|

Yes it's working for me too, sorry and thank you very much! I must've changed a value in the rotation matrices by accident. iGibson/igibson/render/mesh_renderer/mesh_renderer_cpu.py Line 1390 in 5f8d253 |

|

You are correct that r2 rotates the camera pi/2 wrt z axis every time. The values returned by |

|

Thank you very much! What if we are doing translation and rotation on the camera? How would the transformation matrix look then in regards to the additional translation of the camera? |

|

Would the get_lidar_all() with translation be something like this ? |

|

@elhamAm you are right that previous code doesn't handle rotation correctly. It only render the lidar as if the scanner is parallel to the floor. I handled it in a future update and yet need to test it more before emerging to the upstream. I also got ride of the need to use multiple transformation matrix and it should look cleaner and easier to understand. You can use the code here: |

|

Same as before, you can use |

|

Hello @fxia22 , File "/home/elham/fxia/gibson_demos/igibson/object_states/init.py", line 1, in |

|

And then if I replace non_sampleable_categories.txt in utils/constants.py with categories.txt, I get the below errors: exec(code, run_globals) |

|

Hi @elhamAm you don't have to use that branch, you can just copy |

|

Actually @elhamAm you can just pull master, since those changes are just applied to master branch. |

|

thank you, why is the rotation matrix 4 x 4 instead of 3 x 3? Would a translation vector also be 1 x 4 ? |

|

The transformation matrix is usually 4x4 to have both rotation and translation. If the translation is 0, it becomes a rotation matrix. I used it because it is convenient to multiply with the view matrix of the renderer. I guess you can read about these things further: http://www.opengl-tutorial.org/beginners-tutorials/tutorial-3-matrices/ |

|

Thank you again. `def get_lidar_all(self, offset_with_camera=np.array([0, 0, 0])): ) |

|

I think when you try to overlap multiple scans, they don't seem to be aligned. You would need to convert the lidar scans from camera frame to world frame and then put them together. |

|

Then how come for just rotation this was not an issue? |

|

Also, what is self.V? |

|

It's working now, thank you very very much for your help :) There was no need for frame change |

|

Can you post your solution here? Thanks. |

|

|

Thanks. Closing this issue since the problem is solved. |

Hello,

iGibson/igibson/render/mesh_renderer/mesh_renderer_cpu.py

Line 1390 in 5f8d253

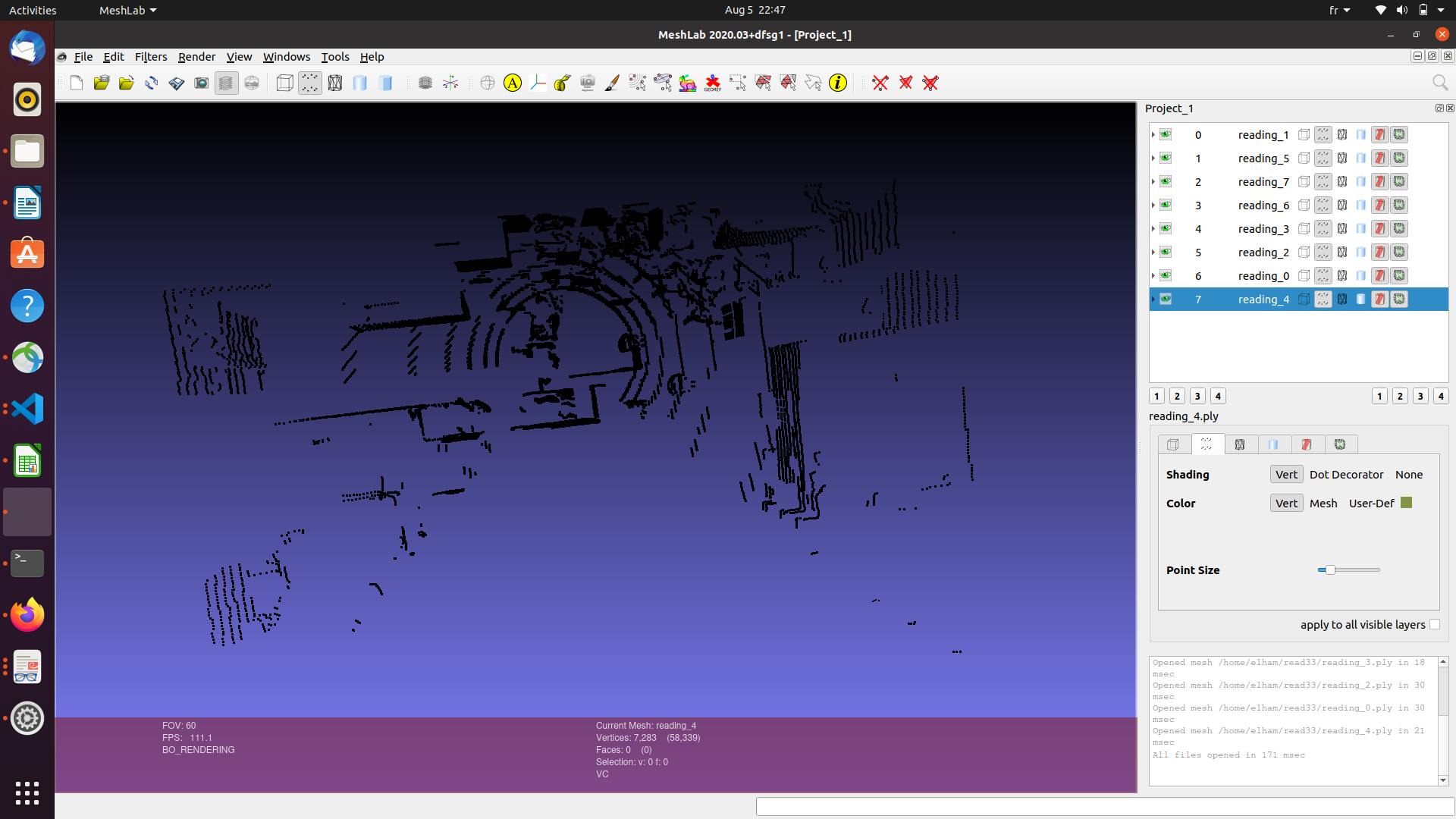

The function get_lidar_all is not working. The camera does not turn during the 4 iterations. So the result of the readings is the same chair scene rotated 90 degrees, 4 times and patched together.

I am trying to reconstruct a 360 degree scene by transforming 3d streams to the global coordianate system and patching them together but nothing is working. Please help.

The text was updated successfully, but these errors were encountered: