New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Fix NIOSSL.NIOSSLError.uncleanShutdown

#1015

Comments

|

Manually running the analysis job from the |

|

The same happens when running from within the |

|

Seeing the same error now also in the processing containers on |

|

Also note: the unclean shutdown error might be a red herring and not be indicative of processing failing. In fact, running the However, I've also explicitly added But the error message clearly doesn't have anything to do with the fact that reconciliation didn't work. |

p3 can't connect to dbp3 failed to update db

|

So to summarise:

I'm not sure yet how to test |

|

Almost all jobs are scheduled on So far everything looks to be in order but we haven't added any new packages yet. Will need to keep an eye on that. |

|

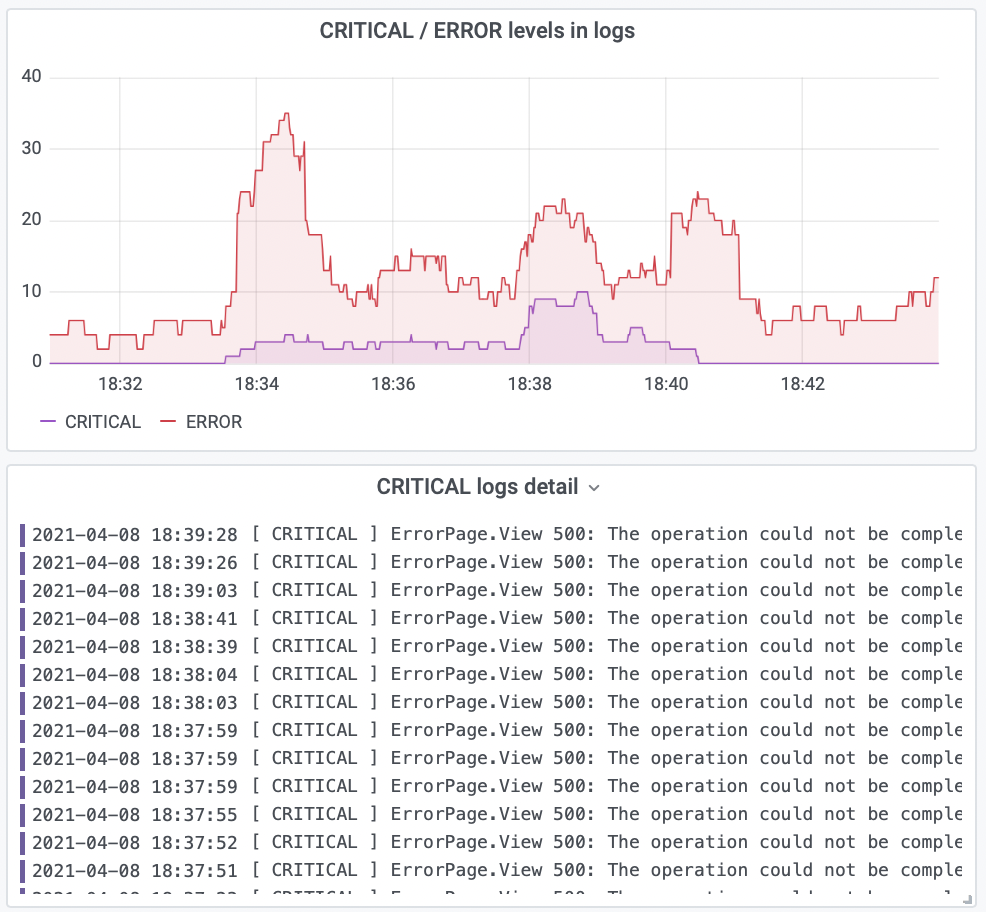

Last night we saw increased rates of 500s, presumably cause by the Might have been coincidental but seems to me that there is an intermittent db connectivity issue. If this persists, I think we need to consider moving back to a containerised db. This would fix several problems:

Of course, the downside is availability but we've not had any issues with that over months of use. |

p3 failed to update dbNIOSSL.NIOSSLError.uncleanShutdown

|

I've asked in Vapor's Discord if anyone has ideas how to fix this: https://discord.com/channels/431917998102675485/448584561845338139/833567195849424956 |

|

Azure ticket opened: Case 2104190050000549 Your question was successfully submitted - TrackingID#2104190050000549 |

|

Helpful gist to run a local Postgres with SSL in docker: https://gist.github.com/mrw34/c97bb03ea1054afb551886ffc8b63c3b Although note that the |

|

Good news, looks like there'll be a fix incoming: vapor/postgres-nio#150 (comment) |

|

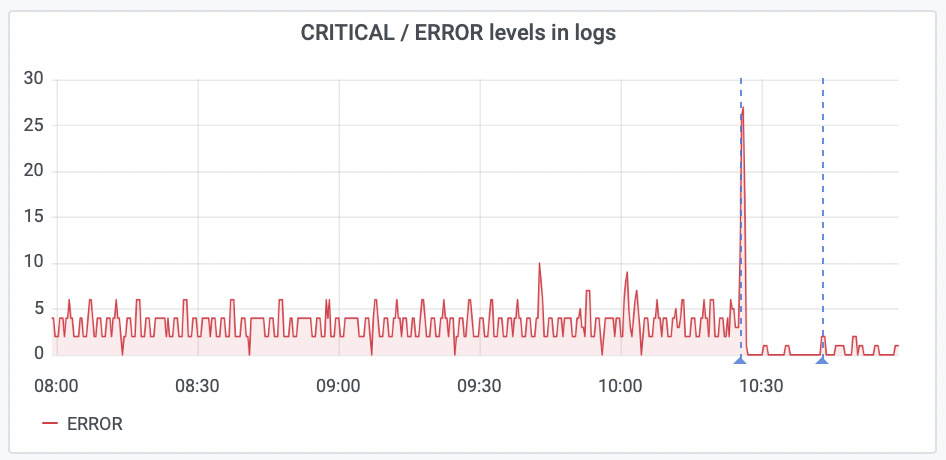

Confirmed fixed on staging: |

|

That spike as the first deployment (2.26.1) went out with the PG NIO fix (around 10:25) is from a bunch of SSL errors that were stacking up as the services restarted. Notice how the follow up deployment 2.26.2 a few minutes later doesn't cause a spike. |

Follow-up from #1014

They fail with:

Interestingly,

app_serverdoes seem to be able to connect:The text was updated successfully, but these errors were encountered: