-

Notifications

You must be signed in to change notification settings - Fork 800

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

tensorflow-tts-normalize: "UnboundLocalError: local variable 'subdir' referenced before assignment" #19

Comments

|

@ZDisket can u check the code in tensorflow_tts/bin/normalize.py. That mean somehow ur utt_id return not exist in both train_utt_ids.npy and valid_utt_ids.npy |

|

@dathudeptrai |

|

I've replaced |

|

@ZDisket so it's not my bug, right ?. BTW, what model you will training ? |

I think it's your bug, I haven't done anything to change its behavior so that it would act like that, only to fix it.

I'm trying to fine-tune the pre-existing LJSpeech model with that of a fictional character. By the way, is it normal for it to take 1 hour and 30 minutes for 1200 iterations (12 epochs) on a Tesla P100? Seeing the alignment figures, I'm assuming 1 iteration from this implementation is worth like 20 or 30 from the others I'm used to. |

|

@ZDisket For tacotron-2, the training speed is 4s/1it on 2080Ti, Fs is 3it/1s, melgan is 5it/s, melgan.stft is 2.5it/s. As far as i know, the speed of tacotron-2 is comparable with nvidia-pytorch, fs and melgan is a fastest training speed rightnow over all framework i tried. BTW, I don't know why ur utt_ids have "raw". On ljspeech processor, you can see: that mean if ur wav_path is ./.../file2292.wav then utt_id return file2292. |

|

@dathudeptrai

Neither do I. |

Maybe :)). The repo just released 18 hours ago. BTW, if you see any models here training slower than other repo, let me know :)) i will focus to make it training and convergence faster :D |

I can't say what compared to NVIDIA/Tacotron2, but your repo is already looking better than ESPNet. |

|

@ZDisket what is ur dataset do u use ? and how many samples ? |

|

@dathudeptrai |

|

@ZDisket seem dataset small :)). |

That's the best-case scenario of all the datasets I have. By the way, to fine-tune MelGAN-STFT, I load the pretrained weights for both the discriminator and generator or only one? |

That is ur choice :))), i think both :D |

|

i close issue :D. |

I've formatted my dataset like the LJSpeech one in the README so I can skip writing a dataloader for finetuning.

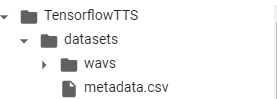

This is my directory

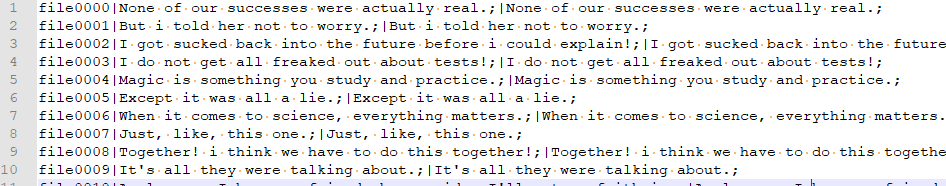

And this is my metadata.csv. I've made it fileid|transcription|transcription because in ljspeech.py there was

text = parts[2]which was giving me index out of range errors with just fileid|transAnd this is a small portion of

os.listdir("wavs")All the preprocessing steps run fine until the normalization one:

Am I doing something wrong?

The text was updated successfully, but these errors were encountered: