Benchmark TPCH TerarkDB vs RocksDB B

rockeet edited this page Mar 29, 2017

·

1 revision

We use the lineitem table in TPC-H dataset, and set the length of comment text field in the dbgen lineitem to 512 (from 27). So the average row length of the lineitem table is 615 bytes, in which, the key is the combination of the first 3 fields printed into decimal string from integers.

The total size of the dataset is 554,539,419,806 bytes, with 897,617,396 lines. The total size of the keys is 22,726,405,004 bytes, the other part is values.

TPC-H dbgen generates raw data of strings, which we use directly in our test, without any transformation on the data.

| Server B | |

|---|---|

| CPU Number | 2 |

| CPU Type | Xeon E5-2630 v3 |

| CPU Freq. | 2.4 GHz |

| CPU Actual Freq. | 2.6 GHz |

| Cores per CPU | 8 cores with16 threads |

| Cores in total | 16 cores with 32 threads |

| CPU Cache | 20M |

| CPU bogomips | 4793 |

| Memory | 64GB |

| Memory Freq. | DDR4 1866Hz |

| SSD Capacity | 480GB x 4 |

| SSD IOPS | Two Intel 730 IOPS 89000 Two Intel 530 IOPS 41000 |

| RocksDB | TerarkDB | |

|---|---|---|

| Level Layers | 4 | 4 |

| Compression | Level 0 doesn’t compress Level 1~3 use Snappy |

All level uses Terark Compression |

| Compact Type | Level based compaction | Universal compaction |

| MemTable Size | 1G | 1G |

| Cache Size | 16G | Do not need |

| MemTable Number | 3 | 3 |

| Write Ahead Log | Disabled | Disabled |

| Compact Threads | 2~4 | 2~4 |

| Flush Threads (MemTable Compression) |

2 | 2 |

| Terark Compress threads | NA | 12 |

| Working Threads | 25 in total 24 Random Read, 1 Write |

25 in total 24 Random Read, 1 Write |

| Target_file_size_base | Default (64M) | 1G |

| Target_file_size_multiplier | 1 (SST size in RocksDB do not influence performance) |

5 |

For both of them, we disable all write speed limits:

- options.level0_slowdown_writes_trigger = 1000;

- options.level0_stop_writes_trigger = 1000;

- options.soft_pending_compaction_bytes_limit = 2ull<<40

- options.hard_pending_compaction_bytes_limit = 4ull<<40

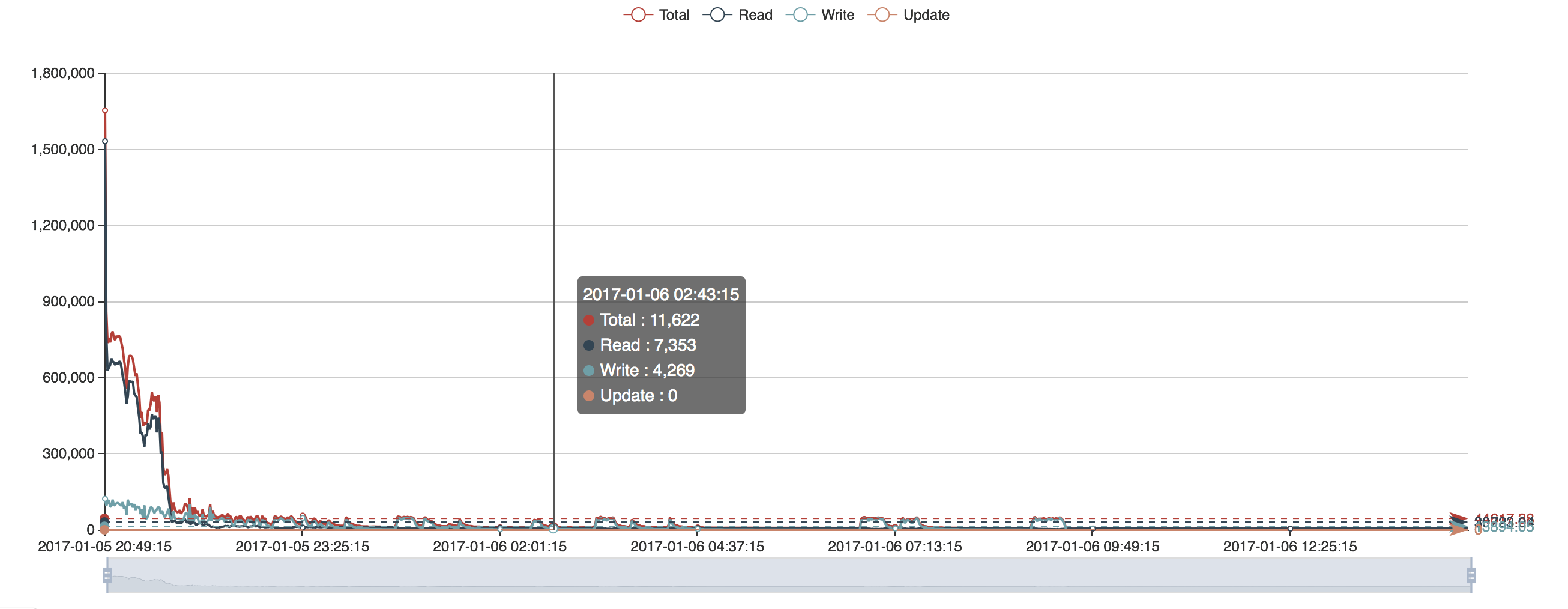

| RocksDB (DB Cache 16GB) | TerarkDB | |

| 0~2 minutes | Read OPS 970K Write OPS 100K (Apply Level Compaction) |

Read OPS 1.92M Write OPS 120K (Sufficient memory) |

| 2~15 minutes | Read OPS 620K Write OPS 96K |

Read OPS 850K Write OPS 100K (Sufficient memory) |

| 15~30 minutes | Read OPS 480K Write OPS 83K (Read and Write decline gradually) |

Read begins to fluctuate sharply, with average around 680K. Write keeps at around 88K, CPU usage close to 100%, IOWait close to 0 (Sufficient memory but compression thread in the background starts to hit the read threads) |

| 30~60 minutes | Read OPS 220K Write OPS 61K |

Read OPS fluctuates with average 330K, Write OPS keeps at 82K, CPU usage starts to drop (Compaction thread hits the read performance) |

| 60~120 minutes | Read 17K Write 39K (Memory runs out, read and write meet bottleneck) |

Read OPS fluctuates with average around 310K. Write OPS keeps at around 90K. |

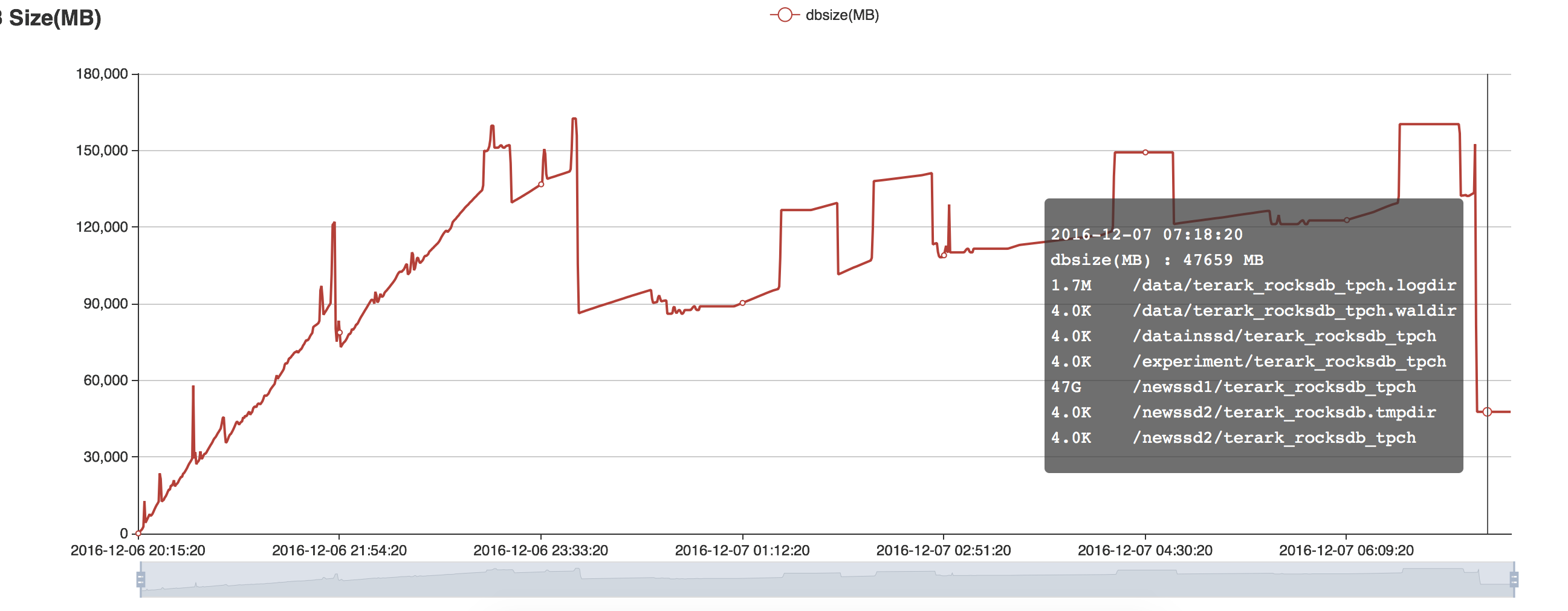

| 3 hours 20 minutes | Read and Write keep dropping | All 550G data completes writing, Read OPS fluctuates between 60 ~ 120K (Compaction thread hits the read performance) |

| 3~11 hours | Read and Write keep dropping | Read OPS keeps at around 170K (Data being compressed gradually, more data can be loaded into memory) |

| 12 hours 40 minutes | Data completes writing, current database size is 234GB (Continues to compress in the background) |

Read OPS keeps growing |

| 18 hours | Compaction completes with final data size 209GB after compression, Read OPS keeps at around 5K | |

| 30 hours | Data compression completes, Read OPS keeps at 2.2M (47G after compression, all data can be loaded into memory.) |