-

Notifications

You must be signed in to change notification settings - Fork 15

Data cleaning & preprocessing

Basic scheme: https://drive.google.com/file/d/1ooGtgptBMmHt6cuFXs1TAkPeOpHFMQOL/view?usp=sharing

The first one is to transform the current images. The second one is to use GANs to produce new images

- Different styles of transformation:

light augmentation: only flipping etc. heavier augmentation (see light and heavier augmentation)

- GAN:

Use GAN to produce new images similar to the current images to feed them in the model.

- offline augmentation: Extend the present data set. The images will be transformed (for example with numpy) and stored.

- online augmentation (augmentation on the fly): Extend the data set on each mini-batch. The transformed images won't be stored physically and will be used in a subset in mini-batches. Set seed to make it reproducible.

- Use traditional transformation like flipping.

-

Data augmentation (medium) introduction

- "The first option is known as offline augmentation. This method is preferred for relatively smaller datasets, as you would end up increasing the size of the dataset by a factor equal to the number of transformations you perform (For example, by flipping all my images, I would increase the size of my dataset by a factor of 2). The second option is known as online augmentation, or augmentation on the fly. This method is preferred for larger datasets, as you can’t afford the explosive increase in size. Instead, you would perform transformations on the mini-batches that you would feed to your model. Some machine learning frameworks have support for online augmentation, which can be accelerated on the GPU."

-

light and heavier augmentation

- "In this paper we build upon recent research that suggests that explicit regularization may not be as important as widely believed and carry out an abla- tion study that concludes that weight decay and dropout may not be necessary for object recognition if enough data augmentation is introduced."

-

Using GANs to generate new data for x-ray

- " However, standard augmentation methods that produce new examples of data by varying lighting, field of view, and spatial rigid transformations do not capture the biological variance of medical imaging data and could result in unrealistic images. Generative adversarial networks (GANs) provide an avenue to understand the underlying structure of image data which can then be utilized to generate new realistic samples."

- Using as an input for CNN

-

Using GANs to improve CNN classification

- "The classification performance using only classic data augmentation yielded 78.6% sensitivity and 88.4% specificity. By adding the synthetic data augmentation the results increased to 85.7% sensitivity and 92.4% specificity."

-

- "In this work, we employ various data augmentation techniques that address X-ray-specific image charac- teristics and evaluate them on lateral projections of the femur bone. We combine those with data and feature normalization strategies that could prove beneficial to this domain. We show that instance normalization is a viable alternative to batch normalization and demonstrate that con- trast scaling and the overlay of surgical tools and implants in the image domain can boost the representational capacity of available image data."

-

Data augmentation techniques II

- "This work compares augmentation strategies and shows that the extent to which an augmented training set retains properties of the original medical images determines model performance. Specifically, augmentation strategies such as flips and gaussian filters lead to validation accuracies of 84% and 88%, respectively. On the other hand, a less effective strategy such as adding noise leads to a significantly worse validation accuracy of 66%. Finally, we show that the augmentation affects mass generation."

- CNN

-

DOPING: Generative Data Augmentation for Unsupervised Anomaly Detection with GAN:

- "Show that our oversampling pipeline is a unified one: it is generally applicable to datasets with different complex data distributions. To the best of our knowledge, our method is the first data augmentation technique focused on improving performance in unsupervised anomaly detection. "

Image Augmentation Examples in Python: Medium. Numpy

Types of Data Augmentation: MXNet

DATA AUGMENTATION TECHNIQUES AND PITFALLS FOR SMALL DATASETS

Building powerful image classification models using very little data: Keras

Data augmentation in PyTorch: Forum

Data augmentation : boost your image dataset with few lines of Python: skimage

Data Augmentation for Computer Vision with PyTorch (Part 1: Image Classification)

Data Augmentation and Sampling for Pytorch

In order to crop XRAY images, preprocessing is done using the opencv library.

Rectangle shapes are found by using the opencv method findContours.

- Skewed rectangles are found and accepted by the algorithm as well.

- In case when a rectangle is skewed or part of the shape is not in the image, the minimal area rectangle is found around the shape to crop.

- When a big part of a rectangle is not in the image frames, there are problems with detecting it.

Shape detection tutorial

Cropping found shape

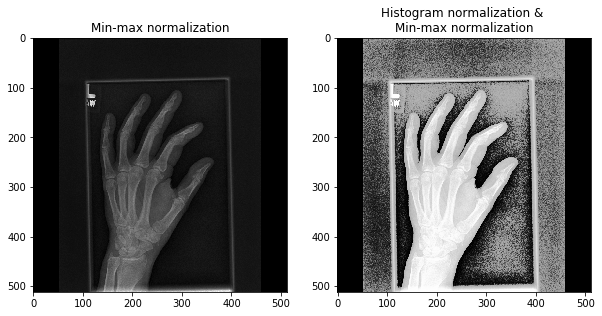

Right before feeding image batches are normalized in the following way:

- Histogram equalisation. After histogram equalisation, the values in image are on scale [0, 255]. With HE, model is observed to train faster.

-

Min-max normalisation. For scaling all values to [0, 1] range.

Result will look like:

References: http://cs231n.github.io/neural-networks-2/#datapre, https://en.wikipedia.org/wiki/Histogram_equalization#Full-sized_image