-

Notifications

You must be signed in to change notification settings - Fork 0

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Compose recording videos #384

Comments

|

Encoding - At minimum want to combine each participant's video with their audio file, potentially want to combine all 3 audio/video files into one file so they happen at the same time. |

|

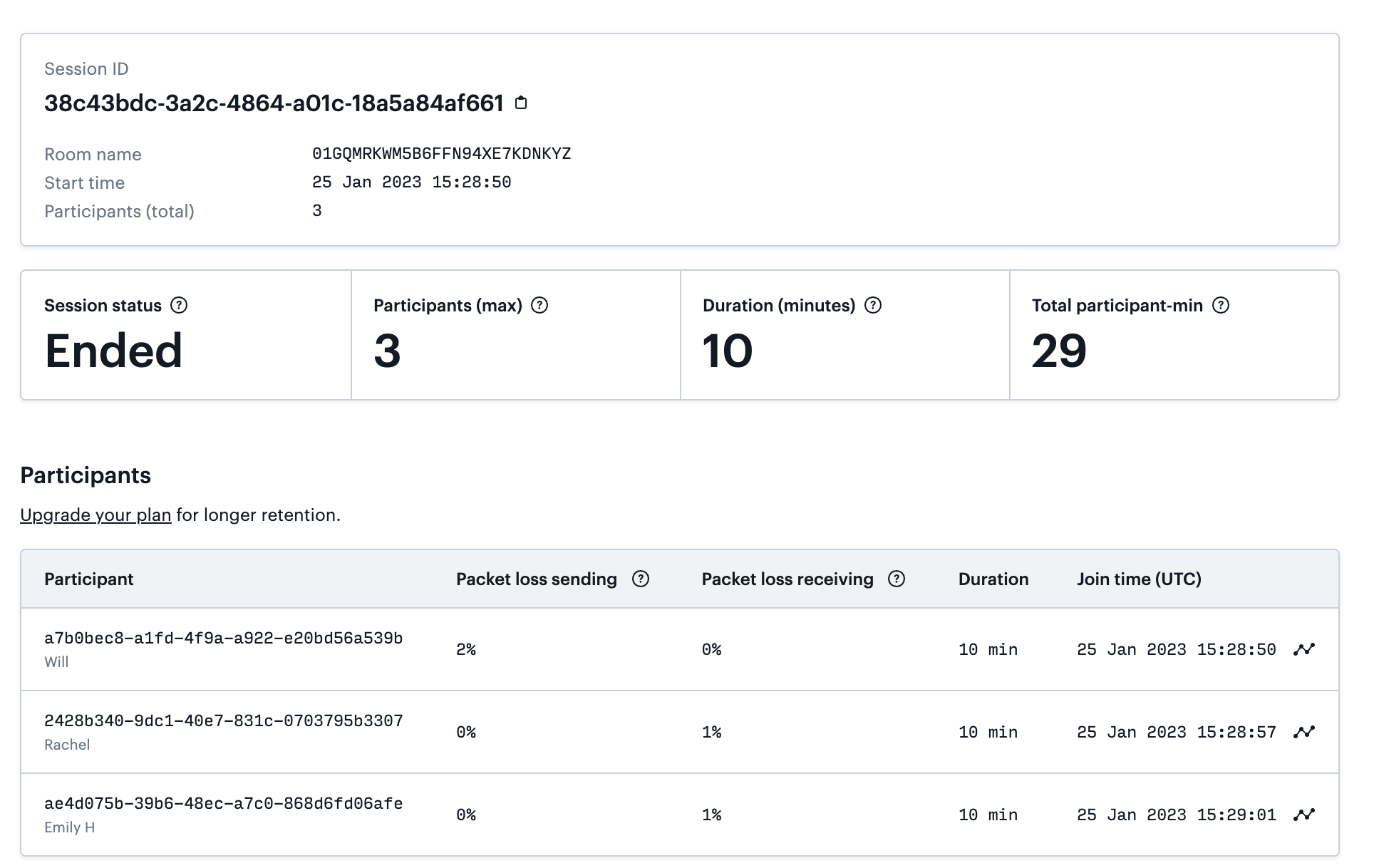

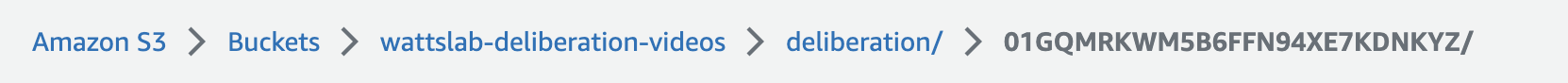

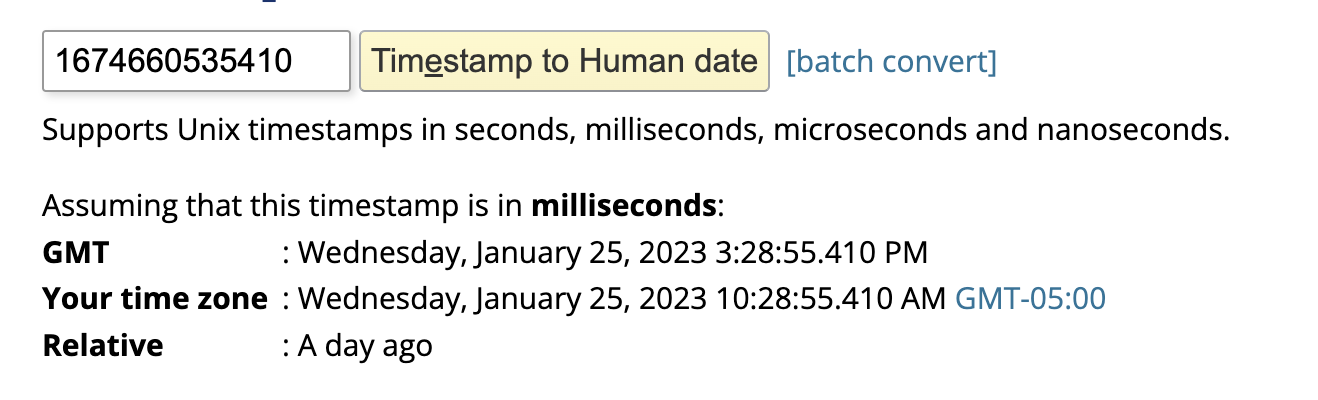

This is the participant data from the recordings i sent you: It looks like the files themselves are in a directory that matches the room name: Filenames seem to have 4 parts:

I would guess that if we tried syncing videos based on the last component of the filename, that may be enough. Not entirely sure, so we should test it. |

|

Displaying multiple video tracks simultaneously: Here is an example of a player that has a separate video track for sign language: https://ableplayer.github.io/ableplayer/demos/video5.html |

|

Playing back videos as recorded from S3 kinda works - we can tell all the videos to skip to a particular time, and they all start playing in sync. Problem is that they don't stay in sync, so we need to resyncronize them periodically. https://bocoup.com/blog/html5-video-synchronizing-playback-of-two-videos |

|

Using ffmpeg seems like the best way to combine things into one file Useful links: Command: Notes: |

|

I put together a little demo app to explore syncing multiple video streams (at this commit: https://github.com/Watts-Lab/deliberation-video-coding/tree/db3b4952252d04816a96e7a7a4b255b9f4e4cce3) All I did was use html5 video tags with hardcoded URLs: ...

<video

id="myVideo8"

preload="auto"

ref={videoElement1}

width="250"

controls

>

<source

src="https://wattslab-video-test-public.s3.amazonaws.com/01GR9ED3G57XJBBA77068BNH90/1675354384929-de8e880d-3a37-4149-baba-b3f1aa77cee1-cam-video-1675354437728"

type="video/webm"

/>

</video>

...and attached a react const videoElement1 = useRef(null);

const videoElement2 = useRef(null);

const videoElement3 = useRef(null);

...Then I could use the refs to start, pause, or seek the videos: const seekTo = (time) => {

console.log(`seeking to ${time}`);

videoElement1.current.currentTime = time;

videoElement2.current.currentTime = time;

videoElement3.current.currentTime = time;

...

};

const startVideos = () => {

console.log(`starting videos`);

videoElement1.current.play();

...

};

const pauseVideos = () => {

console.log(`starting videos`);

videoElement1.current.pause();

...

};This worked surprisingly well, for seeking to times that all videos had in common. The files we have seem to be synchronized so that the "time" in the video file that you seek to is the same for all videos, ie, if you seek to 15 seconds, you get all the videos synced at 15 seconds. There are a few pieces that didn't work first try, however:

We should be able to fix both 1 and 2 with some js in the browser, but it may make sense also to give the videos pre/post padding as needed so that we never try to seek to a part of the conversation that exists in one video but not another. (Assuming we can do this without breaking the timings). |

|

This was in an updated email from daily today: https://docs.daily.co/reference/vcs Does it look useful, @kepstein23 ? |

|

Wrote a python script to merge audio and video files, mostly to share with collaborators while we're getting our own system set up. import json

import glob

filename = "../scienceData/batch_study_2_20230302_prolific_01GTHMZ6YH8X4S5WEMEC06FK77.jsonl"

with open(filename) as f:

entries = [json.loads(line.rstrip()) for line in f]

basepath = "~/Desktop/DeliberationRecordings"

outputpath = "~/Desktop/FormattedDeliberationRecordings"

i = 0

for entry in entries:

if 'recordingRoomName' in entry and 'recordingIds' in entry and len(entry['recordingIds'])>0:

room_name = entry['recordingRoomName']

recordingIds = entry['recordingIds']

for recordingId in recordingIds:

globstring = f'{basepath}/{room_name}/*{recordingId}*'

files = glob.glob(globstring)

if len(files) == 2:

videofile = [file for file in files if "video" in file][0]

audiofile = [file for file in files if "audio" in file][0]

outfile = outputpath+"/"+videofile.strip(basepath).replace("cam-video", "merged")+"_"+str(i)+".mp4"

newdir = "/".join(outfile.split("/")[:-1])

print("video:", videofile)

print("audio:", audiofile)

print("outfile", outfile)

print("newDir", newdir)

print("")

!mkdir {newdir}

!ffmpeg -i {videofile} -i {audiofile} -map 0:v -map 1:a {outfile}

i += 1 |

|

I think we decided not to do this for now, and just do real-time stitching in the interface. |

Encoding

Apparently, you can save a number of video and audio tracks to the same MP4 file:

https://video.stackexchange.com/questions/20933/can-video-have-multiple-streams-like-it-has-2-audio-streams

Streaming

Seems we can also stream directly from the S3 bucket (will probably need to give the player some sort of access token?)

https://stackoverflow.com/a/42176752/6361632

Playback

Option 1:

Use a player that can support displaying multiple channels and/or switching between them.

video.js seems like it may be able to do this: https://videojs.com/guides/video-tracks/, although I don't fully understand what the docs mean.

Option 2: use the HTML5 standard:

Option 3: masking

we could have a different audio track for each participant, and then "mask" parts of the screen if we don't want to show the whole thing.

The text was updated successfully, but these errors were encountered: