New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

About the optimizer and scheduler #5

Comments

|

The Line 44 in 1970e8d

Have a look at it, plz :) |

|

Thanks for you quick reply. My question is that there seems to be no code use the function "CustomCosineAnnealingWarmupRestarts", are there some special usages in the pytorch_lightning? |

|

Oh, I guess I misunderstood your question. swin-transformer-ocr/models.py Line 57 in 1970e8d

Here you can find the usage of It depends on what you wrote in your

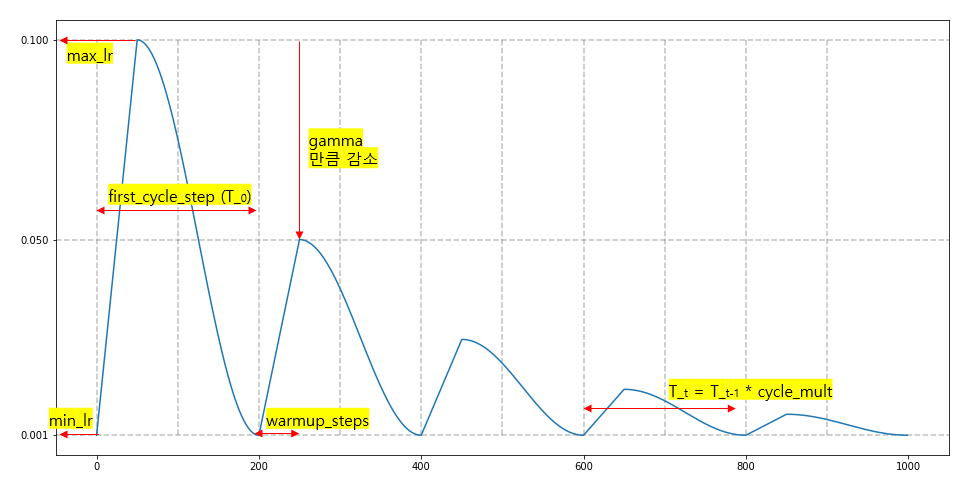

As you can check, This image shows how the learning rate moves with I wrote a tech post in my blog. Though it's in Korean language, you can use browser translation with chrome or edge:) Hope this helps you. |

|

I got it, thanks again! |

There seems to be no code call the function "CustomCosineAnnealingWarmupRestarts"? I wonder if the optimizer and scheduler you used are AdamW and Cosine Annealing?

Thanks!

The text was updated successfully, but these errors were encountered: