Week 2 lecture notes

- Learn word embeddings from large text corpus. (1-100B words)

- Transfer embedding to new task with smaller training set (maybe 100k words)

- Continue to fine-tunethe word embeddings th new data (Optional)

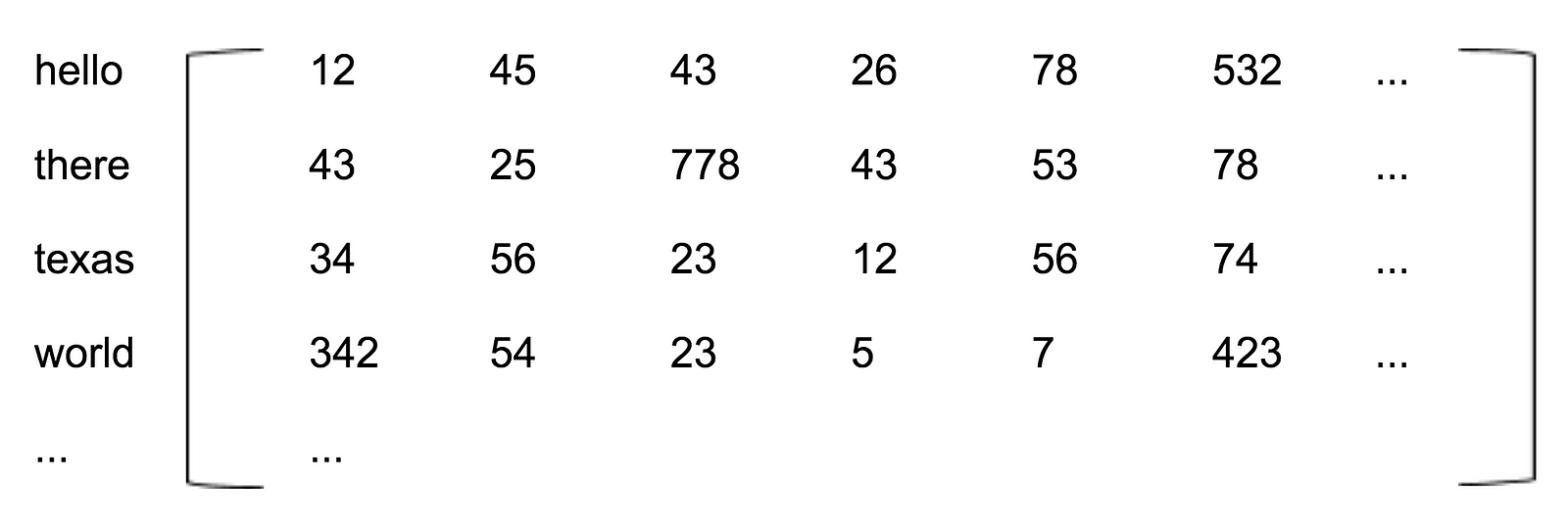

Image Source: "Embeddings: A Matrix of Meaning"

Initialize

$E$ randomly and you're straight in the sense to learn all the parameters of this 300 by 10,000 dimensional matrix and$E$ times this one-hot vector gives you the embedding vector.

The primary problem of this model is computational speed, solutions to this porblem can be

- hierarchical softmax, e.g. Hierarchical Softmax

How do we sample

One thing you could do is just sample uniformly, at random, from your training corpus. When we do that, you find that there are some words that appear extremely frequently. And so, if you do that, you find that in your context to target mapping pairs just get these these types of words extremely frequently, whereas there are other words like orange, apple, and also durian that don't appear that often.

It is suggest to choose

The goal of GloVe model is to minimize

where

-

$N$ stands for # of words in the corpus which equals$10,000$ in the course video -

$i$ stands for$t$ which is target,$\theta_{t}^{T}$ -

$j$ stands for$c$ which is context$e_{c}$ -

$f(X_{ij})$ is a weighting term,$f(X_{ij}) = 0$ if$X_{ij} = 0$

[1] Adam Schwab from Petuum, Inc., "Embeddings: A Matrix of Meaning"

[2] Sebastian Ruder, Part 2: Approximating the Softmax On word embeddings - Hierarchical Softmax