-

Notifications

You must be signed in to change notification settings - Fork 885

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

self hosted runner is not accepting jobs from queue. #592

Comments

|

EDIT: In our case, it turned out to be a misconfiguration on our side. As soon as the runner exited, we rebooted the machine, which caused the update to fail. I'll leave this comment here in case it helps someone else regardless. Original:

|

|

We are seeing the same issue, our setup has two jobs which first one is scaling up the runner if needed and wait for it, then second job is running the actual pipeline, but some time the second job doesn't start when the runner state is Edit: Adding 60s sleep time at the end of the first job and combining with |

|

We are having the same issue. If jobs are queued when all runners are |

|

+1, we have this issue as well, we have an offline runner for each repo, then schedule the most recent runner version when we detect a workflow. |

|

It behaves as if the workflows/runs are scheduled onto specified runners when they are created/triggered, so that they wouldn't get rescheduled when new runners are added. |

|

Having this issue right now. I have idle workers "listening for jobs" and nothing happens. Workflows are not started. I tried cancelling and restarting but it doesn't seem to help. I started to see this today. Anyone else experiencing this at the moment? |

|

Same for us. Restarting didn't do anything, but I manually downloaded actions-runner-linux-x64-2.273.1.tar.gz and untar'd right on top of the existing install and then restarted the daemon. That got jobs flowing again. I have a feeling the updater was having issues updating from 2.273.0 -> 2.273.1 and the server side was not placing jobs on runners running a previous version. This has happened to me quite a number of times in various ways since I've started using github actions (5 or 6?) where the runner tried to pick up a new release and jobs stopped running because of that and needed manual intervention. |

|

It's back! 2.273.1 -> 2.273.2 auto update killed our runner. Upon manual restart, the instance is sitting around idle, yet jobs are waiting to be picked up. Manual untar of 2.273.2 right on top of my current install + service restart fixed it again. This is the cause of the runner dying in the first place (I've seen this 3 or 4 times): Is there a reason /dev/null isn't being used in _update.sh? Is this to be platform agnostic? |

|

We’re aware of the current issue where runners added after queuing a run will not pick up the jobs if the added runner is not the latest version. We’re rolling out the fix and will update the issue again once it’s deployed everywhere. In meantime, you can unblock yourselves by adding the latest runner every time. |

|

Is that all right now ? My Workflow also hang in queued. My Self-host version is : |

|

Fix has been deployed. And new runners (with older versions) should be able to pick the jobs that are queued before adding them. |

|

@lanen It seems like a different issue. Did you check on the UI, if the runner exists or it's deleted? |

|

@lokesh755 i am still facing same issue (runner version : v2.273.5) runners didn't pick the jobs that are queued before adding them. |

|

Same happens to me with the last verison 2.276.1, I have been able to solve adding a delay in the creation of a fargate that runs the runner, but something happens when 2 jobs fire to close and one of them is missed |

|

Have a similar issue specifically with the organization setup. |

|

@PavelSusloparov Could you provide your org and repo details? |

|

Same issue here with |

|

We also had the same issue running version 2.277.1 on Ubuntu ( |

|

I have self-hosted runner on ARM machine and in about 50% of cases it does not pick up the job, no matter if it is queued or not. Cancelling the run and starting again makes it pick up the job. It is very annoying that I have to cancel and re-run all jobs almost for every commit because of this issue. Runner version |

|

Hi @lokesh755 it is still valid issue on but at the same time new 2 runners were spin up. |

|

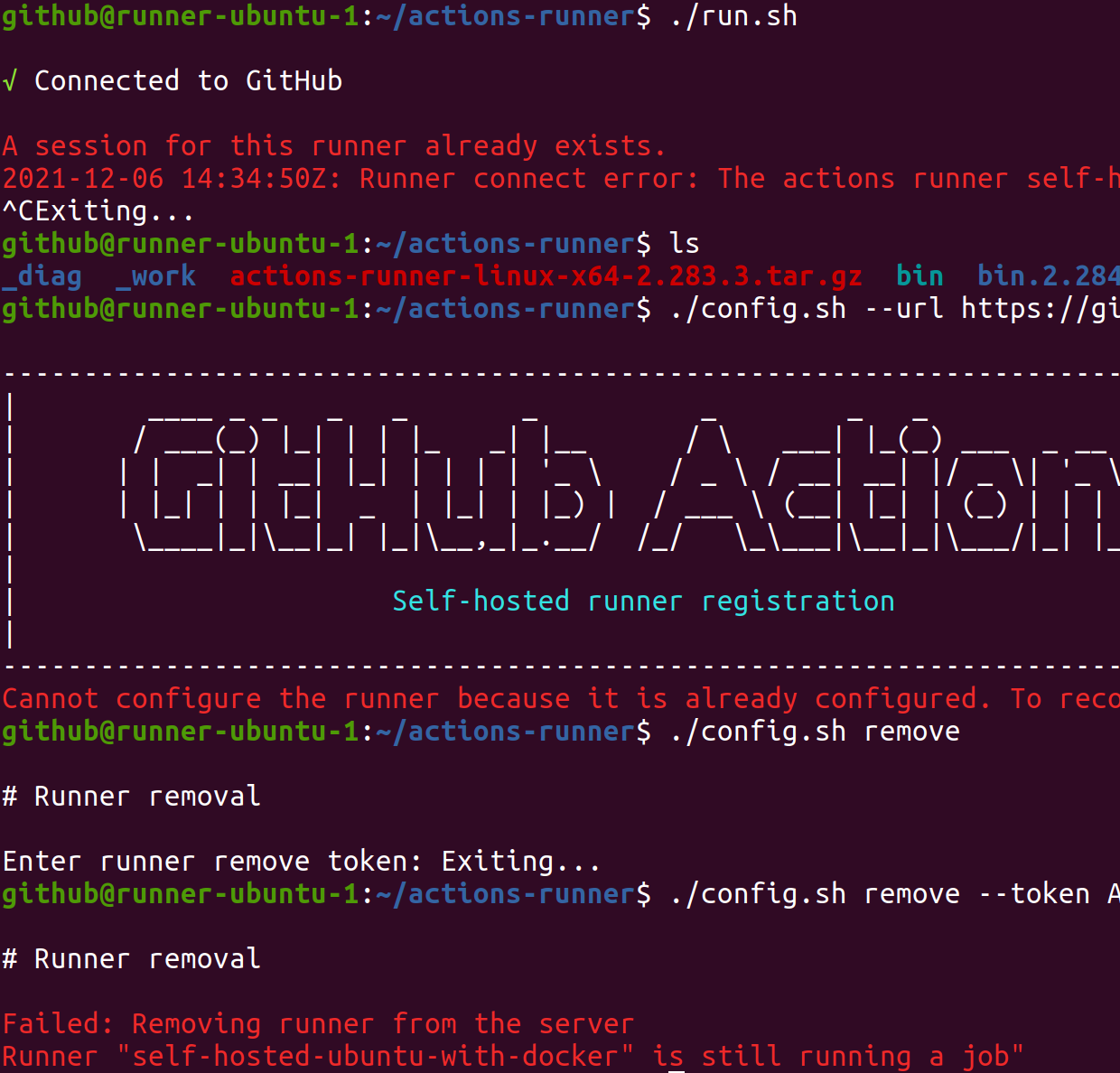

I've just re-created the runner. First problem is that it does not pick up the job: Second problem is that I am not able to delete the old offline runner from the web-ui: |

|

Because of this issue I nowadays don't use official github's runner for self-hosted machines. I switched to using this alternative runner and it works perfectly for me. It can even be installed via Debian package. |

|

We had the same problem. The solution was very obscure. A support person asked me "did you recently change this repo from private to public?" -- yes, i did... why would that matter? There is a security setting in your runner group (something i never configured) which, by default, prevents a self-hosted runner in that group from picking up jobs from public repos. Change it to suit your needs, heeding the warning. |

Describe the bug

Self hosted idle runner is not consuming queued jobs (runner version 263, release version).

To Reproduce

Steps to reproduce the behavior:

This was working perfectly for a few months, but got recently broeken.

Expected behavior

Runner in the idle status should consume jobs (labels are matching).

Runner Version and Platform

GitHub cloud + runner version 263

OS of the machine running the runner? OSX/Windows/Linux/...

What's not working?

No error message, see behaviror above.

Job Log Output

If applicable, include the relevant part of the job / step log output here. All sensitive information should already be masked out, but please double-check before pasting here.

Runner and Worker's Diagnostic Logs

n/a

reference: our setup is available here: https://github.com/philips-labs/terraform-aws-github-runner

The text was updated successfully, but these errors were encountered: