-

Notifications

You must be signed in to change notification settings - Fork 15

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Some questions #1

Comments

Good luck |

model_points = np.array([

(0.0, 0.0, 0.0), # Nose tip

(0, -63.6, -12.5), # Chin

(-43.3, 32.7, -26), # Left eye, left corner

(43.3, 32.7, -26), # Right eye, right corner

(-28.9, -28.9, -24.1), # Left Mouth corner

(28.9, -28.9, -24.1) # Right mouth corner

])Take the chin for example, its proportion between y and z is 63.6/12.5=5.088 |

|

The proportion to a single point can change if you use a different model. The important part is that all the proportions change in the same way. Try your points, start with only head pose calculation and continue from there |

Hi, @amitt1236, your implementation of gaze estimation is the most accurate I have seen so far ! After studied the code, I have some questions:

I run the code on my computer, and output the result to a video below:

https://streamja.com/49vwG

As shown in the video, my thicker red line jitters a lot, so do you apply some smooth strategy to the gaze point ?

Your code have provided some standard face 3d modol points like

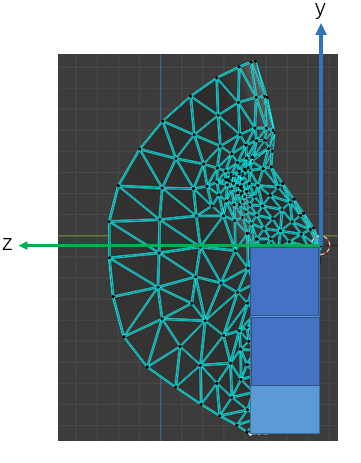

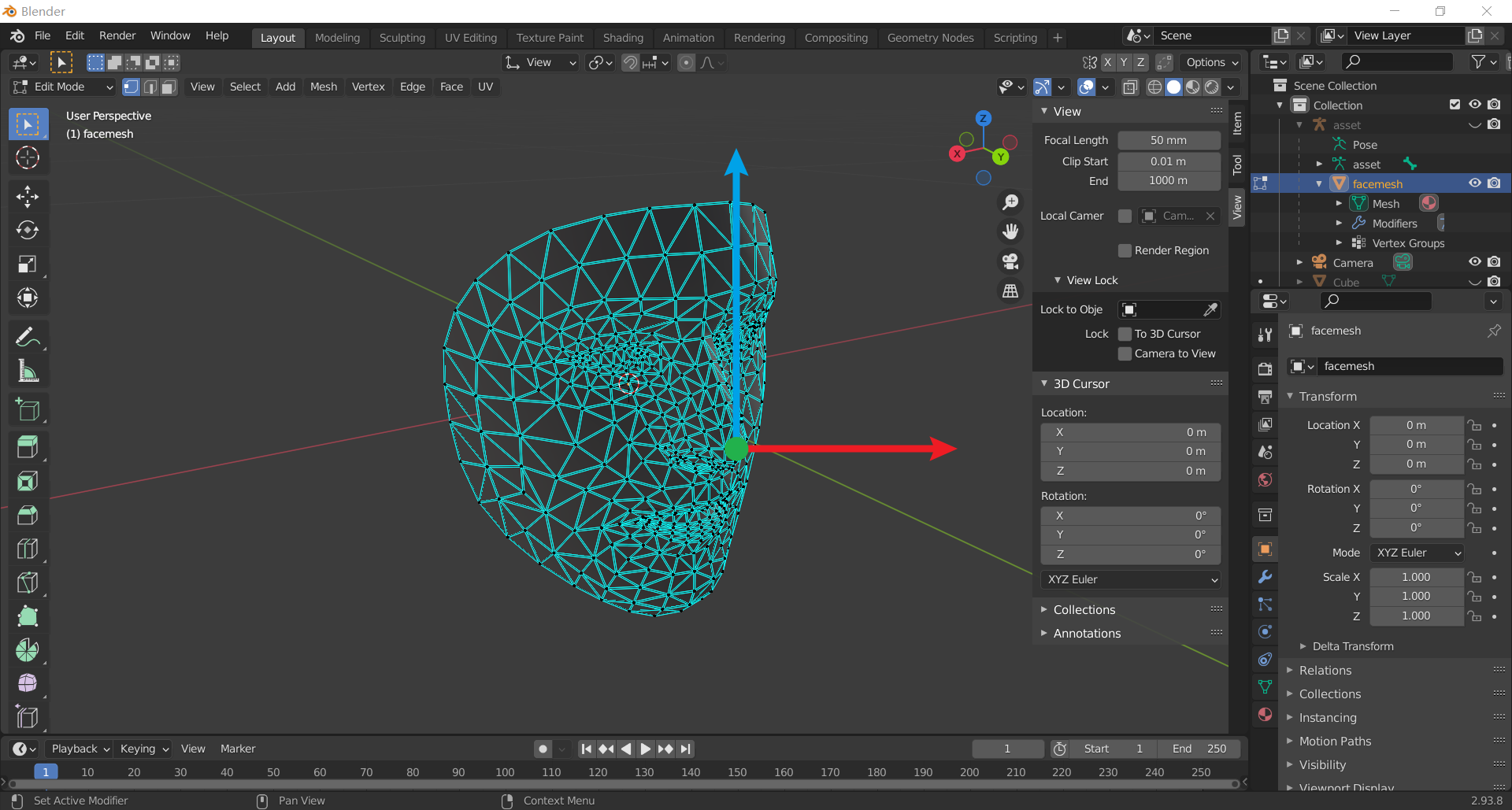

model_pointsandEye_ball_center_left, how do you get these coordinates ? I am thinking if I change the coordinate system like rotate along z axis:(Actually this is the first time I use blender)

How can get the new coordiantes of these points ?

The text was updated successfully, but these errors were encountered: