-

Notifications

You must be signed in to change notification settings - Fork 1.8k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

data row is smaller than a column index, inter schema representation is probably out of sync with real database schema #732

Comments

|

这个问题有跟进吗? 使用原生debezium同样会遇到一样的问题。 有经验处理吗? |

不知道怎么处理, 我们估计要换成canal来做数据同步了。 我考虑是不是mysql版本的问题,你们也是用mysql 5.6吗? |

mysql 5.7 同样的问题。 |

使用flink-cdc 和 原生debezium都会遇到这个报错吗? |

可以把你使用的环境信息以及报错信息提个bug, 看社区有没有开发者可以帮看下。 |

|

@1105220927 @SEZ9 完整的 flink sql 能贴一下吗? 作业是 initial 模式启动的么(先全量再增量)? |

我这里是使用streaming api来写的,代码基本没什么额外的操作。 作业模式是schema_only. |

我这边是直接用的debezium 配置 "snapshot.mode":"schema_only_recovery" |

|

@1105220927 @SEZ9 这个问题在重启后是稳定复现的吗? 可以尝试database.history.store.only.monitored.tables.ddl 改成 false 看看。 |

|

目前只能通过配置 table.include.list 把有问题的表去除掉。。。 |

是的。 对于有问题的db,启动必现。 |

我这边的问题定位到了,不知道是不是适用你们的场景。 原因是修改了binlog_row_image为FULL后,需要重启所有的长连接,如果是从库的话,需要重启从库实例,才会生效。 否则虽然修改了参数,但binlog格式仍然有问题 |

|

@1105220927 修改binlog_row_image以后,不重启实例, |

还不是,我这边数据库一直都是 binlog_row_image | FULL ,有问题的是个别表。 目前产线有1000+表,有2张有问题。咨询了debezium 社区回复说' Just please bearin mind if the database history topic is recereated around a schema change then you have a problem as the unprocessed binlog can still contain the old schema.' 准备进一步排查下 存schema的那个topic信息 |

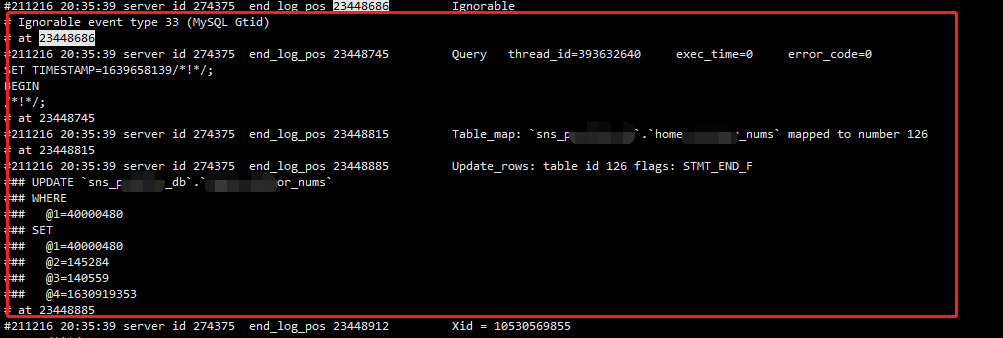

是的。 数据库参数是对的,但是update的binlog格式是这样的: 因为这个参数修改只对新连接生效,不知道之前修改参数的时候,哪里的流程出了问题,导致没有生效。 |

好吧,那可能还不是同一个原因。我这边是通过mysqlbinlog工具对比出问题的binlog position的具体日志格式发现的问题。 |

|

请问这个bug有人修复吗?我也遇到同样的问题了 |

|

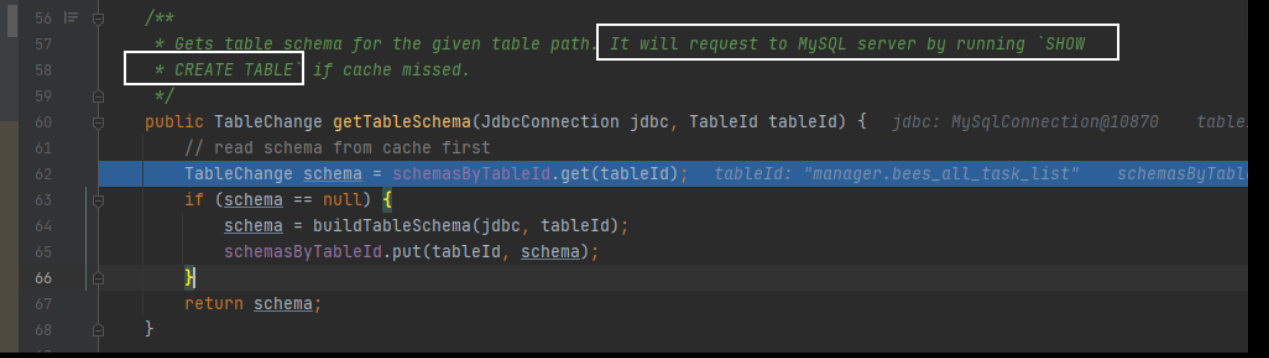

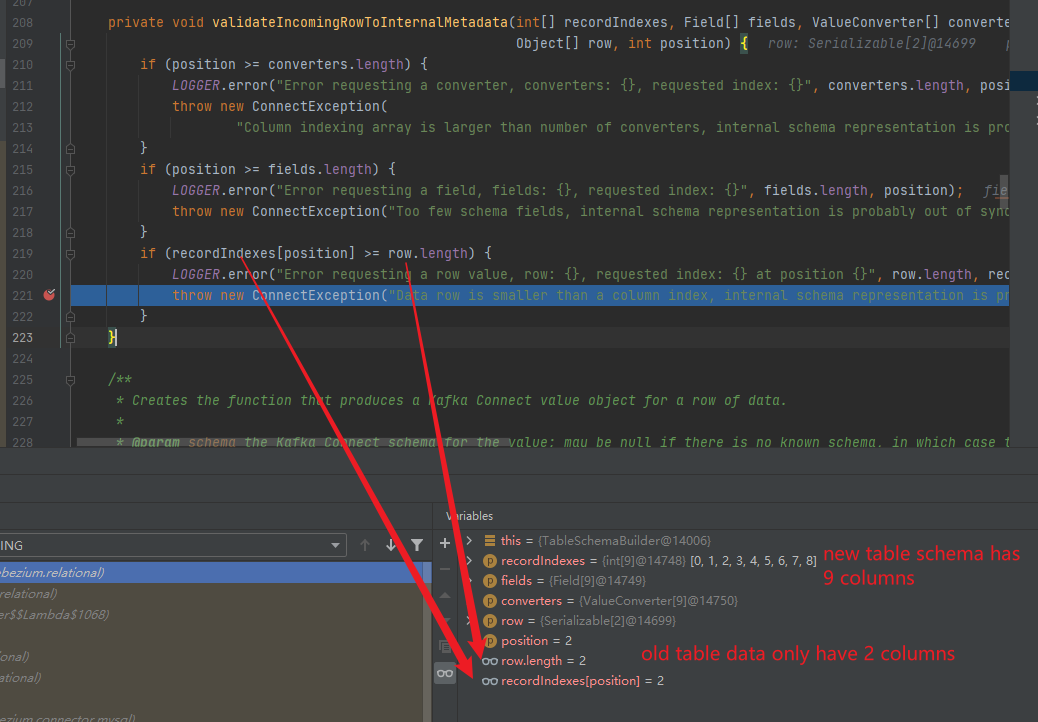

Thanks all for the detail feedback, there're two reasons lead to this issue:

It's not easy to fix the issue right now. For (1): What we can do is only to skip the un-parsable binlog data and limit all DB users do not change the |

|

Getting this error for Db2 connectors as well : Caused by: org.apache.kafka.connect.errors.ConnectException: Data row is smaller than a column index, internal schema representation is probably out of sync with real database schema There has not been any change to the table schema but still fails. |

|

Closing this issue because it was created before version 2.3.0 (2022-11-10). Please try the latest version of Flink CDC to see if the issue has been resolved. If the issue is still valid, kindly report it on Apache Jira under project |

Describe the bug

error: data row is smaller than a column index, inter schema representation is probably out of sync with real database schema

Environment :

To Reproduce

这个实例的任务一启动就会触发报错。

尝试使用更高版本的flink-cdc(2.0.1/2.0.2/2.1.0),手动修改掉MysqlValidator中对mysql版本的限制,仍会触发该报错。

查看该位置的binlog情况,与表结构是对应的并没有什么异常:

对应的flink报错日志如图:

麻烦大佬帮看下

The text was updated successfully, but these errors were encountered: