New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[SUPPORT] HoodieMultiTableDeltastreamer - Bypassing SchemaProvider-Class requirement for ParquetDFS #2406

Comments

|

I think the command line parameters are not passed correctly --hoodie-conf hoodie.datasource.write.keygenerator.class=org.apache.hudi.keygen.CustomKeyGenerator On a related note, your record key and partition path are both same. This is ok if you are testing out a sample dataset but wont scale in real world as you would end-up with one record per directory. |

|

Thanks for your response @bvaradar. Entering the parameters in that way did the trick for this particular issue. However, I ran into another error shortly after(NullPointerException) that I assume is the same as the one seen here: https://issues.apache.org/jira/browse/HUDI-1200 As suggested in that ticket I pivoted to using TimeBasedKeyGenerator, but again I ran into another issue: I believe this is the same as the issue seen here: https://issues.apache.org/jira/browse/HUDI-1150 As per that ticket this might be caused by null values in the timestamp partition field. So I pivoted again, and opted for SimpleKeyGenerator on the same data with 3 simple fields instead, which worked great! But when I loaded the data on Spark and checked the field in question there were no null values, only 1970-01-01 values. Could the null values automatically have been converted to those values at some part in the process? Is it fine if I keep this ticket open while I troubleshoot further? If you'd rather I close this and open another ticket for a different issue please let me know. |

|

No worries. Should be fine to keep this open |

|

@SureshK-T2S Is there anything else related to this issue that needs to be discussed further ? |

|

Hello, thank you guys for giving me time with this. I have since had an issue with MultiTableDeltaStreamer, in particular getting it to work with ParquetDFS Data Source. Getting an issue due to the SchemaProvider or lack of one. Command: S3 properties: Table1 properties: Table2 properties: Error: Reaching final steps of my setup, really hoping to be able to get this resolved and go live soon! |

|

looks like you are required to set "--schemaprovider-class" @pratyakshsharma : can you please follow up on this ticket. |

|

Thanks for your response. Till now using HoodieDeltaStreamer, I have not had to specify the Schema Provider Class when using ParquetDFS source. Looking at the Schema Providers here, I was thinking NullTargetSchemaRegistryProvider would be good here but I experienced the following error: I tried adding hoodie.deltastreamer.schemaprovider.registry.url to the props with blank value but it gave me a malformed URL error. Please let me know if I should be using a different schema provider class or approach. |

|

cc @pratyakshsharma could you please help out in this issue? |

|

Hi guys, any ideas on this one? |

|

looks like there could be a bug. Here is the reason: As you might have figured out, I don't have exp with this code base before. So, will have to write tests to ensure the fix works. But in the mean time, if you have access to StructType (schema), then you can try using RowBasedSchemaProvider to unblock for now. |

|

Have put up a fix here: #2577. The aforementioned fix works. |

|

Also, looks like we don't have good coverage of tests in multi table delta streamer. have added a jira here. |

|

Closing this for now. Please do reach out to us if you need more help. Happy to help you out. Thanks for helping improve Hudi for better :) |

|

Hi @nsivabalan, I downloaded the latest version(0.7.0) of hudi-utilities-bundle.jar from MAVEN reporisitory(https://mvnrepository.com/artifact/org.apache.hudi/hudi-utilities-bundle_2.11/0.7.0) and tried to run the spark-submit multi table delta streamer command without providing the schema provider class(hope this is not mandatory now after this fix #2577). But still receiving the same error mentioned above by Suresh. SPARK SUBMIT COMMAND Do I miss anything here or the above PR is not merged in 0.7.0 version maven jar. Thank you.. |

|

yes, as you could see from commit, it was merged 2 to 3 weeks back. We have an upcoming release in a week or two. So, you should have it in 0.8.0. If you want to verify the fix, you can pull in latest master and try it out. 0.7.0 does not have this fix. |

|

Thanks @nsivabalan for confirming. |

I am attempting to create a hudi table using a parquet file on S3. The motivation for this approach is based on this Hudi blog:

https://cwiki.apache.org/confluence/display/HUDI/2020/01/20/Change+Capture+Using+AWS+Database+Migration+Service+and+Hudi

To first attempt usage of deltastreamer to ingest a full initial batch load, I attempted to use parquet files used in an aws blog at s3://athena-examples-us-west-2/elb/parquet/year=2015/month=1/day=1/

https://aws.amazon.com/blogs/aws/new-insert-update-delete-data-on-s3-with-amazon-emr-and-apache-hudi/

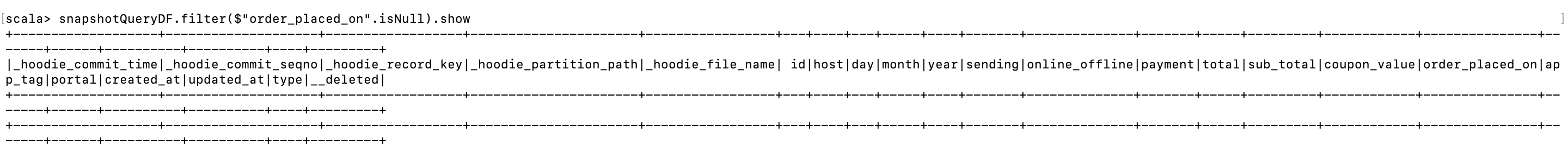

At first I used the spark shell on EMR to load the data into a dataframe and view it, this happens with no issues:

I then attempted to use Hudi Deltastreamer as per my understanding of the documentation, however I ran into a couple of issues.

Steps to reproduce the behavior:

Stacktrace:

This gives rise to a different error:

Expected behavior

I've clearly specified the partition path field in hoodie.datasource.write.partitionpath.field=request_timestamp:TIMESTAMP. However this consistently fails for me even on other parquet files. I assumed the problem might be that it needs to be added in the dfs-source.properties file, so I'd added the following to that file:

However that didn't fix anything. I also added the location of the file under --props, however it couldn't find the file even though I am able to display the contents of the file in terminal using cat.

Suspecting the choice of key generator being the issue, I tried several other partitioners including Custom, Complex and TimeBased. However it wasn't able to load class for any of them.

Please let me know if I am doing anything wrong here.

Environment Description

Hudi version : 0.6.0

Spark version : version 2.4.7-amzn-0 Using Scala version 2.11.12

Hive version :

Hadoop version : Hadoop 2.10.1-amzn-0

Storage (HDFS/S3/GCS..) : S3

Running on Docker? (yes/no) : no

The text was updated successfully, but these errors were encountered: