New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Generating 2d bounding boxes for the dataset-images, missing mesh_vertices_hdf5_file #18

Comments

|

Hi! Can you provide a bit more detail about what exactly you want? If you only need 2D bounding boxes in image space, you can generate them yourself from the segmentation images we provide. If you need 3D bounding boxes in world space, where one axis is always aligned with the world-space gravity, we provide those too. We don't provide the mesh files you're referring to. In order to obtain these files, you need to purchase the assets yourself. It costs about $6K to purchase the entire dataset, or roughly $15 per scene, and scenes can be purchased in small batches. Rendering our entire dataset costs roughly $51K, and we describe our rendering pipeline in detail in our arXiv paper. |

|

Hi @mikeroberts3000, Thanks for your reply! Just like you mentioned we only need 2D bounding boxes in image space. Do you already have any script to generate the bounding boxes from the segmentation images? |

|

We don't provide code for this. Is there something preventing you from simply computing 2D bounding boxes directly from the segmentation masks? |

|

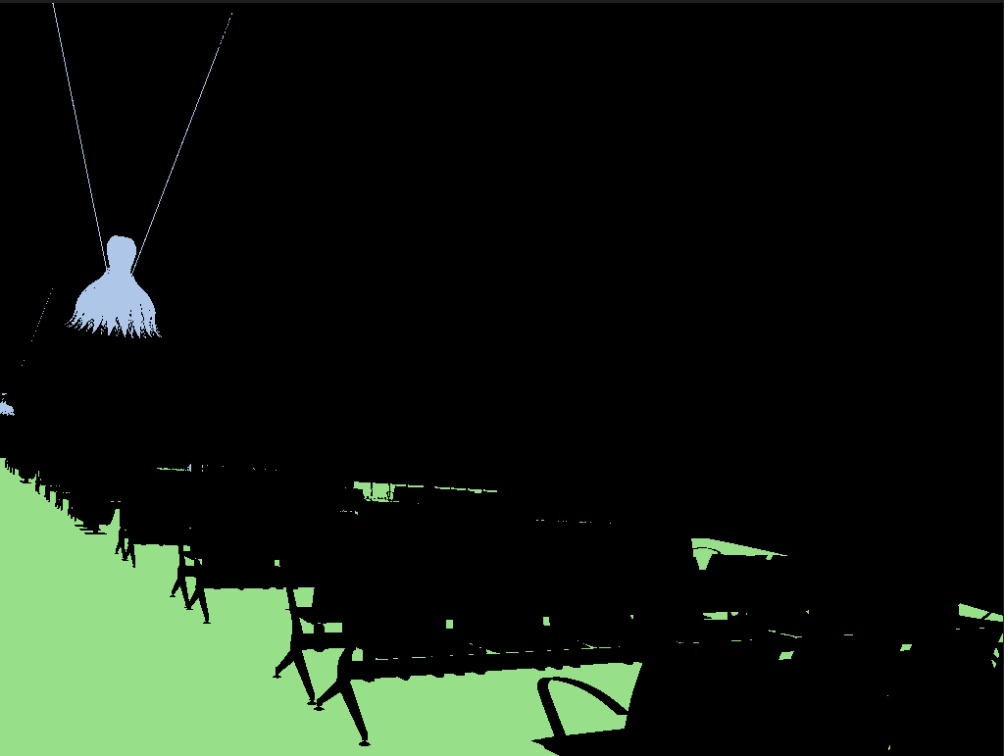

Hi @mikeroberts3000, I tried generating 2D bounding boxes using the semantic segmentation map. However, due to the presence of some missing annotations, I am facing some issues. For instance, this is the semantic segmentation map of The original image looks like It is clearly evident that there are many chairs here in the scene, but they have not been marked. Also, due to the presence of the chairs, the floor annotation in the semantic map has speckles here and there. Thus when I am generating 2D bounding boxes, they have a weird appearance. Also, many images have overlapping annotations from the instance segmentation map, which is expected due to the high no of objects in a scene. However, that is leading to too many bounding boxes, giving it a weird look. I tried to generate one here: May I know if you have any suggestions as to how I could obtain bounding box annotations such that they are suitable to train a detector? |

|

|

Thanks for your response @mikeroberts3000

Yes, the floor image is correctly segmented. And I understand that a lot of distinct objects would come up and that is expected. I wondered if there could be a different way of obtaining the 2D bounding boxes -- something like converting them from the 3D bounding boxes? May I know if that would be possible? |

|

Yes, you can compute 2D bounding boxes by projecting each corner of each 3D bounding box into an image. We provide code to do this (see Using the 3D bounding boxes in this way will yield "amodal" 2D bounding boxes, i.e., bounding boxes that ignore occlusions. It is also worth mentioning that the 2D bounding boxes you compute will be conservative, in the sense that they will be the same size or bigger than if you projected the object into 2D with no occlusions. |

Hi, i was trying to generate the 2d bounding boxes for ai_001_001 by using the script - "dataset_generate_bounding_boxes.py".

The script fails with an assertion. The script requires the following files -

I couldn't find these above files in the downloaded dataset nor in the checked-in code.

Do I need to purchase the asset files for creating the 2d bounding boxes?

Also in issue #17 you mentioned that "re-rendering our dataset will cost roughly $51K USD" could you please elaborate what you mean by re-rendering process? And do i have to pay for the re-rendering as well to generate the 2d bounding boxes for the existing images in your dataset.

The text was updated successfully, but these errors were encountered: