New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

train.py crashing due to empty images #952

Comments

|

Hi @JacobGlennAyers - If you say faulty images, do you mean a corrupted |

|

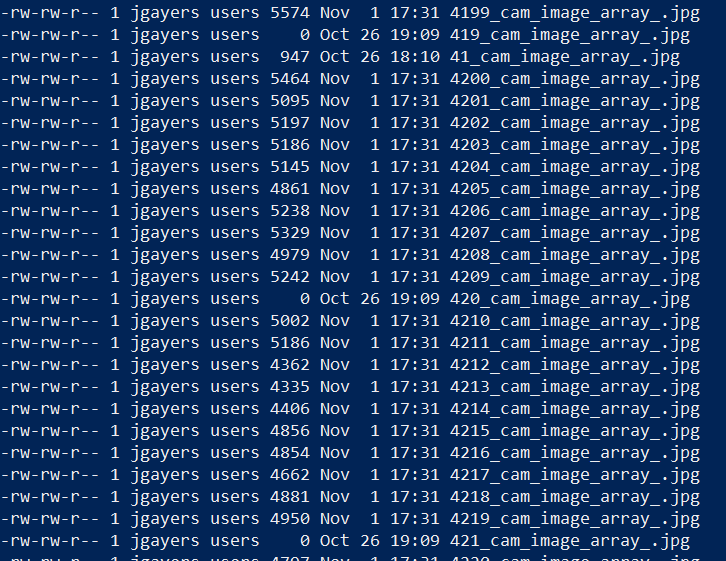

Hi @DocGarbanzo - I believe that they are corrupted .jpg files since they show up in an ls -l command, but are empty (show as 0 bytes) |

|

For a long term solution, do you think it would be best to have some sort of error handling within the train.py script? Or would be more appropriate to have some sort of data sanitation step that removes references to faulty data from the catalogue files? |

|

I have never seen this before. From your listing below it looks like there is a continuous set of images (..., 419, 420, 421,...) which failed to write. The timestamp of these files is also incorrect. To me it looks more like an io issue, because if the image was somehow corrupted, and couldn't be generated from the camera data, then it wouldn't even create the jpg. Also image 41 looks corrupt, can you check if this is a valid jpg or not? Which platform / filesystem did you use for recording? |

|

@DocGarbanzo Yes, it may very well be an IO issue, though, we never encountered this problem with the train script in Donkey 3 (with the old tub directory setup). I guess I can check some older files we collected with Donkey 3 to see if there were any files with this same problem. The hardware has been the same in use of Donkey 3 and Donkey 4. I can check out the files in a bit. We are using a webcam (I can check the model later if that is relevant). We are using Jetson Nano devices with an Ubuntu OS. |

|

I can confirm this happened to me too. I even have a script that cleanup faulty images before training it. I think it is not related to particular hardware. My gut feeling is that this is introduced in v4 but I have no evidence. |

|

@sctse999 Any chance that I could try out that cleanup script on my end? Is it just a short script that loops through the images folder, finds which ones are bad, then deletes the references to them in the relevant catalogue file? |

|

Ok, so if you want to filter corrupted images at the training state, I would not recommend to manually edit the catalog files and their manifest files in order not to damage the integrity of the datastore. The better solution is to set those records as deleted by using the |

|

@DocGarbanzo By corrupt indexes, do you mean with respect to their order in the "images" folder nested in the "data" folder? |

Not necessarily the order, I meant the index of the corrupted jpeg file, so |

|

@DocGarbanzo Thanks for the clarification |

|

@DocGarbanzo A new observation I have made related to this issue is that sometimes the program will randomly generate blank black images. So it seems like the cases of 0 Bytes, are corrupted, though the cases with 947 bytes are just blank black images. My first thought was that these might be from accidental recordings with a lens cap over the webcam, but they appear right in the middle of a stream of proper images, so it seems to be either a hardware or software problem. I will probably make my script find indices < 1000 Bytes for our use-case. Not sure what a more general solution would look like. |

|

@JacobGlennAyers - the most robust solution would be to try loading the image file. You can do this through building the generator or list of |

|

I created this script that checks if the images are either all black (based on the dimensions for our project) or empty (size = 0), loads the filenames into a set, copies the "data" directory into a new directory, and fixes the catalog files to no longer reference the bad images (those that are in the aforementioned set). Definitely not a general solution, but good enough for Jack Silberman's UCSD ECE 148 course. Relevant file can be found here: https://github.com/JacobGlennAyers/donkeycar4_training_fix |

|

There is a PR that prevents the cameras from returning a None image. That should get merged shortly. I would be good to know if that prevents your issue in the first place. #967 |

|

@JacobGlennAyers Pull Request 967 merged. It has fix the for PICAM, USBCAM and CSIC camera configurations that prevents them from returning empty images. If you do a pull on the dev branch you will get the code. Could you give it a try and let us know if that solves your problem? That would allow us to close this issue as done. |

|

@Ezward Hi there! So it is a fix from the source of audio data creation? In that case, I am no longer able to help you out in that domain. I have completed the course that required me to use this software and have consequently disassembled my robot that I used to collect the data. I believe in and around week 4 or 5 (which starts tomorrow) of the course is when a new batch of students will be able to test this out. I will let the professor know that he should task some student with testing out the dev branch of the donkeycar 4 repository. |

Hi there!

We are running Donkey 4 and found that sometimes our webcam would read in some empty data. They would be very few and far between, say about 4 faulty recordings per 8000 images. We found that when we ran the train script on our data folder, it would crash due to the empty images:

Records # Training 4335

Records # Validation 1084

Epoch 1/100

2021-11-04 20:30:23.748365: I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library libcublas.so.10

cannot identify image file 'data/images/492_cam_image_array_.jpg'

failed to load image: data/images/492_cam_image_array_.jpg

2021-11-04 20:30:23.946395: W tensorflow/core/framework/op_kernel.cc:1741] Invalid argument: TypeError: %d format: a number is required, not str

Traceback (most recent call last):

File "/opt/conda/envs/donkey/lib/python3.7/site-packages/tensorflow/python/ops/script_ops.py", line 243, in call

ret = func(*args)

File "/opt/conda/envs/donkey/lib/python3.7/site-packages/tensorflow/python/autograph/impl/api.py", line 309, in wrapper

return func(*args, **kwargs)

File "/opt/conda/envs/donkey/lib/python3.7/site-packages/tensorflow/python/data/ops/dataset_ops.py", line 785, in generator_py_func

values = next(generator_state.get_iterator(iterator_id))

File "/opt/local/donkeycar/donkeycar/pipeline/sequence.py", line 120, in next

return self.x_transform(record), self.y_transform(record)

File "/opt/local/donkeycar/donkeycar/pipeline/training.py", line 60, in get_x

record, self.image_processor)

File "/opt/local/donkeycar/donkeycar/parts/keras.py", line 241, in x_transform_and_process

x_process = img_processor(x_img)

File "/opt/local/donkeycar/donkeycar/pipeline/training.py", line 47, in image_processor

img_arr = self.transformation.run(img_arr)

File "/opt/local/donkeycar/donkeycar/pipeline/augmentations.py", line 120, in run

aug_img_arr = self.augmentations.augment_image(img_arr)

File "/opt/conda/envs/donkey/lib/python3.7/site-packages/imgaug/augmenters/meta.py", line 766, in augment_image

type(image).name),)

TypeError: %d format: a number is required, not str

Traceback (most recent call last):

File "train.py", line 31, in

main()

File "train.py", line 27, in main

train(cfg, tubs, model, model_type, comment)

File "/opt/local/donkeycar/donkeycar/pipeline/training.py", line 145, in train

show_plot=cfg.SHOW_PLOT)

File "/opt/local/donkeycar/donkeycar/parts/keras.py", line 183, in train

use_multiprocessing=False)

File "/opt/conda/envs/donkey/lib/python3.7/site-packages/tensorflow/python/keras/engine/training.py", line 66, in _method_wrapper

return method(self, *args, **kwargs)

File "/opt/conda/envs/donkey/lib/python3.7/site-packages/tensorflow/python/keras/engine/training.py", line 848, in fit

tmp_logs = train_function(iterator)

File "/opt/conda/envs/donkey/lib/python3.7/site-packages/tensorflow/python/eager/def_function.py", line 580, in call

result = self._call(*args, **kwds)

File "/opt/conda/envs/donkey/lib/python3.7/site-packages/tensorflow/python/eager/def_function.py", line 644, in _call

return self._stateless_fn(*args, **kwds)

File "/opt/conda/envs/donkey/lib/python3.7/site-packages/tensorflow/python/eager/function.py", line 2420, in call

return graph_function._filtered_call(args, kwargs) # pylint: disable=protected-access

File "/opt/conda/envs/donkey/lib/python3.7/site-packages/tensorflow/python/eager/function.py", line 1665, in _filtered_call

self.captured_inputs)

File "/opt/conda/envs/donkey/lib/python3.7/site-packages/tensorflow/python/eager/function.py", line 1746, in _call_flat

ctx, args, cancellation_manager=cancellation_manager))

File "/opt/conda/envs/donkey/lib/python3.7/site-packages/tensorflow/python/eager/function.py", line 598, in call

ctx=ctx)

File "/opt/conda/envs/donkey/lib/python3.7/site-packages/tensorflow/python/eager/execute.py", line 60, in quick_execute

inputs, attrs, num_outputs)

tensorflow.python.framework.errors_impl.InvalidArgumentError: TypeError: %d format: a number is required, not str

Traceback (most recent call last):

File "/opt/conda/envs/donkey/lib/python3.7/site-packages/tensorflow/python/ops/script_ops.py", line 243, in call

ret = func(*args)

File "/opt/conda/envs/donkey/lib/python3.7/site-packages/tensorflow/python/autograph/impl/api.py", line 309, in wrapper

return func(*args, **kwargs)

File "/opt/conda/envs/donkey/lib/python3.7/site-packages/tensorflow/python/data/ops/dataset_ops.py", line 785, in generator_py_func

values = next(generator_state.get_iterator(iterator_id))

File "/opt/local/donkeycar/donkeycar/pipeline/sequence.py", line 120, in next

return self.x_transform(record), self.y_transform(record)

File "/opt/local/donkeycar/donkeycar/pipeline/training.py", line 60, in get_x

record, self.image_processor)

File "/opt/local/donkeycar/donkeycar/parts/keras.py", line 241, in x_transform_and_process

x_process = img_processor(x_img)

File "/opt/local/donkeycar/donkeycar/pipeline/training.py", line 47, in image_processor

img_arr = self.transformation.run(img_arr)

File "/opt/local/donkeycar/donkeycar/pipeline/augmentations.py", line 120, in run

aug_img_arr = self.augmentations.augment_image(img_arr)

File "/opt/conda/envs/donkey/lib/python3.7/site-packages/imgaug/augmenters/meta.py", line 766, in augment_image

type(image).name),)

TypeError: %d format: a number is required, not str

Function call stack:

train_function

Our temporary workaround was to run an "ls -l" over the "images" folder and find the faulty recordings, to which we would then delete the problematic catalogues that pointed to those faulty images from the manifest.json file.

The text was updated successfully, but these errors were encountered: