-

Notifications

You must be signed in to change notification settings - Fork 5.5k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Clusters With Same Name In Different Accounts Are Indistinguishable In UI #13793

Comments

|

ping @backstage/warpspeed |

|

I believe the required information is present already in memory, right? Maybe the list could be smart and detect that there are duplicated names, and for those write e.g. the container identifier in parentheses after the name, or something like that. Might you be able to contribute a fix for that? 🙏 |

|

Hello @freben , my team has a few quarterly goals we are trying to wrap up, but I plan to work on issues like this one in October, which is only a few weeks away. That being said this is probably one where I don't know 100% if your suggested solution will be ideal. It depends on what information comes back from the resources and whether that information, such as the container identifier, is helpful to the user. I will see what I can do when I work on this issue. I really appreciate the helpful suggestion though, thank you. 🙏 |

|

So I think the problem is that we might want to display certain characteristics of the cluster in the accordion? These might be: account, region or cloud provider Perhaps an icon for the cloud provider or a chip for the region might make sense? What were you thinking? @freben do we have any other example of showing this detail to users? Maybe we could talk to a designer? |

|

Yes that is similar to my original idea which is to include account name alongside the cluster name. Problem I originally saw with this is that the call to the api service doesn't currently have this information. A separate api call to the aws / cloud provider service might need to be made. But those are of course implementation details and the UX is more important, though I would hesitate to add extra api calls without your guidance. Designer input would of course be appreciated. |

|

A possible solution could be to add a metadata field here https://github.com/backstage/backstage/blob/master/plugins/kubernetes-backend/src/types/types.ts#L141 to populate this information, and retain this information somehow when we call Long term I think we would want to store this information on the cluster entity in the catalog (RFC) . |

|

Yeah that seems like another good option. |

|

No strong preference on my part! I say go with y'all's guts for now on this one. |

|

Made this PR, please check if it is a good solution for this ticket #14712 |

|

I made this PR to address the issue - #15683. The main crux of the issue is that to generate a bearer token for the cluster you must include the cluster name. The implementation was using the field clusterDetails.name for this purposes, meaning that if you had clusters named the same across accounts they all had to have the same name in Backstage. The PR above extends the clusterDetails adding a new field applicable just to #14712 somewhat accomplishes the same thing but is more complex in adding an alias applicable to all auth types. As this problem only affects clusters of type auth AWS I think it makes more sense to limit the scope of change. |

|

I know the other PR was suggested instead to do an RFC to get community input first. @cyrus-mc yours looks backwards compatible and if it supports your use case then I leave it up to the maintainers to comment. It would help my use case as well but not after I switch to the AWS EKS collector. Maybe offering an input function which takes as input the cluster details and hopefully account details as well which would allow people to customize how they want to return the cluster display name. Like for my company personally all accounts have a name configured so if I made a follow-up aws query to get the account name I could join that with the cluster name to make each display name unique. Not everyone has that configuration though. But if it were a function the user can provide anything. I don't know if I have the time to invest more into this right now but might find someone else in my team or a close team to do so soon. |

|

Adding the cloud account name/id will not solve all problems. For instance, if I have multiple clusters with the same name in the same account, but different regions. This means I would have to compile the string from the There is another problem: your engineers might know these clusters under different names from what we can compile with the account name/id. Let's say, we compile the human-readable cluster name from the What if we make the If |

|

Yes your points are all valid and I agree. |

|

This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions. |

|

This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions. |

|

Does it have any clear future? |

|

This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions. |

|

I don't think there is a clear future for this tbh, it could be we require cluster names to be unique, this is currently the case for the proxy endpoint. WDYT @jamieklassen |

|

I do think the "Right Thing" to do here is indeed enforce unique names for clusters (@mclarke47 as you alluded, this would solve the proxy bug #15901 for free). It'd be a breaking change to existing setups, which I don't feel great about. Maybe we could have a separate issue for this validation (and updating existing catalog ingestion mechanisms to obey it) to help people get unblocked for the time being. I suspect that in the long-term, we can get unique unambiguous cluster names for free by fully deprecating clusterLocators and always sourcing from the catalog per #13727. |

|

Why can't we add an optional parameter that will change the name in the UI? It is not related to the authentication and can help people to mitigate this issue. There were a couple of PRs to implement this, they were rejected for various reasons, but the problem is still present. |

|

I agree with @jamieklassen the best solution would be to source the data from the catalog where it is unique. Let's keep this open unitl #13727 is fixed in that case |

|

It does not cover the case when you can't change the cluster name. |

|

@sydorovdmytro as this discussion has evolved, I get the feeling that I think your desired feature has shifted a bit from the initial request -- which, as I understand it, was strictly about ambiguity resulting from identical names. Please forgive me for focusing so narrowly on the problem described in the issue title. Some other maintainers may have suffered from the same interpretation, and this may have confused the discussion. When I re-read your posts here, I get the sense that you are also concerned about a different kind of ambiguity -- resulting from machine-generated (or otherwise "unchangeable") names. This idea of a "cluster nickname" for user-friendliness still does feel worthwhile to me, but I'd like to address it separately from the "uniqueness/ambiguity" topic. In fact, if we did proceed with the catalog-first approach in #13727, the canonical field to use for something like this is

@mclarke47 I'd allow adding a backstage/plugins/kubernetes-backend/src/cluster-locator/ConfigClusterLocator.ts Lines 33 to 42 in 56c4216

and having the catalog clusterLocator read it from backstage/plugins/kubernetes-backend/src/cluster-locator/CatalogClusterLocator.ts Lines 64 to 87 in 56c4216

I realize that this is almost exactly what was done in #14681 and #14712, and I'm sorry those PRs got closed the way they did, but I think that setting the context here (and using the word "title", however pedantic that seems) might make a big difference. @sydorovdmytro have I represented your concern here? Have I missed anything? |

|

thanks @jamieklassen, it makes total sense now! |

|

@jamieklassen I think part of the reason why this problem exists in the first place, is that cluster name is tied to authentication when using As a workaround we have created our own KubernetesAuthTranslator implementationand then override it for kubernetes plugin builder (need to explicitly set all default auth translators if used): |

|

This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions. |

|

I'm going to write a new issue for unique cluster IDs, but until then this is the issue with the most activity on the topic and I don't want to give the impression we've forgotten about it! |

|

This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions. |

Expected Behavior

If a cluster has the same

namein two different AWS accounts (let's say stage and production) I want the UI to have some way to distinguish them. Maybe that would mean it shows the aws account name or maybe the configuration includes a descriptive name or maybe there's a tag on the cluster resource. I am just reporting the problem and don't have a perfect solution yet since it's hard for me to understand all the possible ways one can configure clusters in the different cloud providers. My suggested solution may only work for my own use case.Actual Behavior

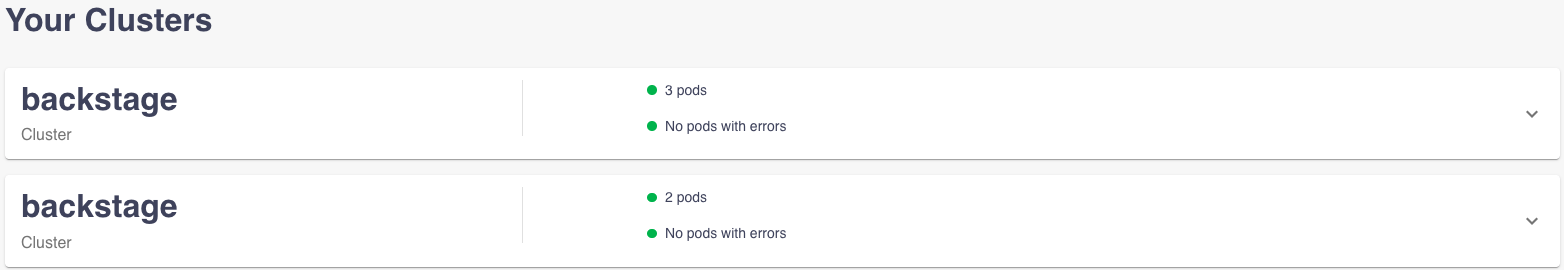

If a cluster with the same name exists in two accounts it shows the same cluster card for both and the only way to distinguish is to expand the full resource yaml details and see some details inside such as the container account id. Below is a screenshot showing two clusters named

backstage.I tried changing the name in the configuration hoping the URL is all it really needs, but the

awsauthentication must rely on the name of the cluster in some way since it does not work if I call it something else. It throws 401 unauthorized errors if I change the name for that specific cluster.Steps to Reproduce

Screenshot above shows an example with two same name clusters. Steps to somewhat reproduce below, will do my best to include configuration as well.

Context

This is a confusing UX for users who would have no way to know which cluster card corresponds to which account's cluster. Let me know if more information is needed.

Your Environment

yarn backstage-cli info:yarn backstage-cli info yarn run v1.22.17 $ /Users/asaferlich/dev/src/github.com/segmentio/backstage-segment/node_modules/.bin/backstage-cli info OS: Darwin 21.6.0 - darwin/arm64 node: v16.14.2 yarn: 1.22.17 cli: 0.18.1 (installed) backstage: 1.5.1 Dependencies: @backstage/app-defaults 1.0.5 @backstage/backend-common 0.15.0 @backstage/backend-plugin-api 0.1.1 @backstage/backend-tasks 0.3.4 @backstage/catalog-client 1.0.4 @backstage/catalog-model 1.1.0 @backstage/cli-common 0.1.9 @backstage/cli 0.18.1 @backstage/config-loader 1.1.3 @backstage/config 1.0.1 @backstage/core-app-api 1.0.5 @backstage/core-components 0.11.0 @backstage/core-plugin-api 1.0.5 @backstage/dev-utils 1.0.5 @backstage/errors 1.1.0 @backstage/integration-react 1.1.3 @backstage/integration 1.3.0 @backstage/plugin-analytics-module-ga 0.1.19 @backstage/plugin-api-docs 0.8.8 @backstage/plugin-app-backend 0.3.35 @backstage/plugin-auth-backend 0.15.1 @backstage/plugin-auth-node 0.2.4 @backstage/plugin-catalog-backend-module-github 0.1.6 @backstage/plugin-catalog-backend 1.3.1 @backstage/plugin-catalog-common 1.0.5 @backstage/plugin-catalog-graph 0.2.20 @backstage/plugin-catalog-node 1.0.1 @backstage/plugin-catalog-react 1.1.3 @backstage/plugin-catalog 1.5.0 @backstage/plugin-github-actions 0.5.8 @backstage/plugin-github-pull-requests-board 0.1.2 @backstage/plugin-home 0.4.24 @backstage/plugin-kubernetes-backend 0.7.1 @backstage/plugin-kubernetes-common 0.4.1 @backstage/plugin-kubernetes 0.7.1 @backstage/plugin-org 0.5.8 @backstage/plugin-pagerduty 0.5.1 @backstage/plugin-permission-common 0.6.3 @backstage/plugin-permission-node 0.6.4 @backstage/plugin-permission-react 0.4.4 @backstage/plugin-proxy-backend 0.2.29 @backstage/plugin-scaffolder-backend 1.5.1 @backstage/plugin-scaffolder-common 1.1.2 @backstage/plugin-scaffolder 1.5.0 @backstage/plugin-search-backend-module-pg 0.3.6 @backstage/plugin-search-backend-node 1.0.1 @backstage/plugin-search-backend 1.0.1 @backstage/plugin-search-common 1.0.0 @backstage/plugin-search-react 1.0.1 @backstage/plugin-search 1.0.1 @backstage/plugin-shortcuts 0.3.0 @backstage/plugin-stack-overflow 0.1.4 @backstage/plugin-techdocs-backend 1.2.1 @backstage/plugin-techdocs-module-addons-contrib 1.0.3 @backstage/plugin-techdocs-node 1.3.0 @backstage/plugin-techdocs-react 1.0.3 @backstage/plugin-techdocs 1.3.1 @backstage/plugin-user-settings 0.4.7 @backstage/release-manifests 0.0.5 @backstage/test-utils 1.1.3 @backstage/theme 0.2.16 @backstage/types 1.0.0 @backstage/version-bridge 1.0.1 ✨ Done in 0.96s.Note: This issue should probably have the

k8s-pluginlabel but I don't think I can add it myself.The text was updated successfully, but these errors were encountered: