This repo contains scripts and a tool to reproduce the openCL delegate issue with models that output results from their intermediate nodes. The openCL delegate generates all zero outputs in such cases.

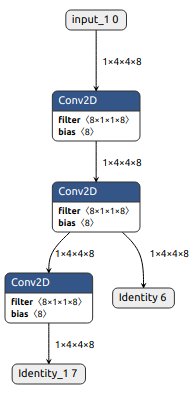

Here is a very simple example. Consider the following tflite model:

If you infer this model using the openCL delegate, you will get all zeros for the Identity output (also, you will get wrong results from Identity_1).

Our experiments have revealed that the solution for this issue is to use an identity/neutral node (e.g. Relu) and build a fake branch:

This fake branch helps us to get correct results from both Identity and Identity_1 outputs.

model_filesfolder contains a very simple model (direct_output.h5) representing the above-mentioned issue and its corresponding tflite version (fp32_direct_output.tflite).- You can also use

generate_dummy_model.pyto build the model and useconvert_model.pyto convert it to tflite.

- You can also use

We have implemented a small tool to feed an input to our sample tflite model using openCL delegate.

- Linux host computer

- Connectivity to the target device via adb

- Android NDK, version 22 or later

- CMake 3.18 or later

- Unzip the

tensorflow_lite_cpp_2_9_1_nightly.zipfile inside thetflite_inference_toolfolder. - In a terminal, from

tflite_inference_toolfolder:

$ mkdir build

$ cd build

$ cmake -G "Unix Makefiles"

-DCMAKE_SYSTEM_NAME=Android

-DANDROID_ABI=arm64-v8a

-DANDROID_STL=c++_shared

-DANDROID_NATIVE_API_LEVEL=27

-DCMAKE_VERBOSE_MAKEFILE=ON

-DCMAKE_TOOLCHAIN_FILE=<path-to-ndk>/build/cmake/android.toolchain.cmake

-DCMAKE_BUILD_TYPE=Release

-DTensorFlowLite_ROOT=../tensorflow_lite_cpp_2_9_1_nightly ..

$ make-

Here, you must replace with the absolute path of the ndk installed on your computer. If you installed NDK through Android studio, it is typically located at:

/home/<username>/Android/Sdk/ndk/<version>/on Linux -

tensorflow_lite_cpp_2_9_1_nightlyis TensorflowFlow Lite library (nightly version) package.

WARNING: This step will write to your /data/local/tmp folder on device. Please make sure existing files in that folder are backed up as needed.

In a terminal, from tflite_inference_tool folder:

$ ./run_me.shThe output should be something like this:

INFO: Created TensorFlow Lite delegate for GPU.

INFO: Initialized TensorFlow Lite runtime.

VERBOSE: Replacing 3 node(s) with delegate (TfLiteGpuDelegateV2) node, yielding 1 partitions.

INFO: Initialized OpenCL-based API.

INFO: Created 1 GPU delegate kernels.

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, NOTE: If in main.cpp you change line 22 to TfLiteModel* model = TfLiteModelCreateFromFile("./fp32_indirect_output.tflite"); (which means using the model with the fake branch) and build and run the project again, you will get non-zero (correct) results.