This is the offical repository for the implementation of our ICIP2021 pulication, SM3D. Official version is available at https://ieeexplore.ieee.org/document/9506302. Preprint version is available at https://arxiv.org/abs/2111.12643

@INPROCEEDINGS{9506302,

author={Li, Runfa and Nguyen, Truong},

booktitle={2021 IEEE International Conference on Image Processing (ICIP)},

title={SM3D: Simultaneous Monocular Mapping and 3D Detection},

year={2021},

pages={3652-3656},

doi={10.1109/ICIP42928.2021.9506302}}

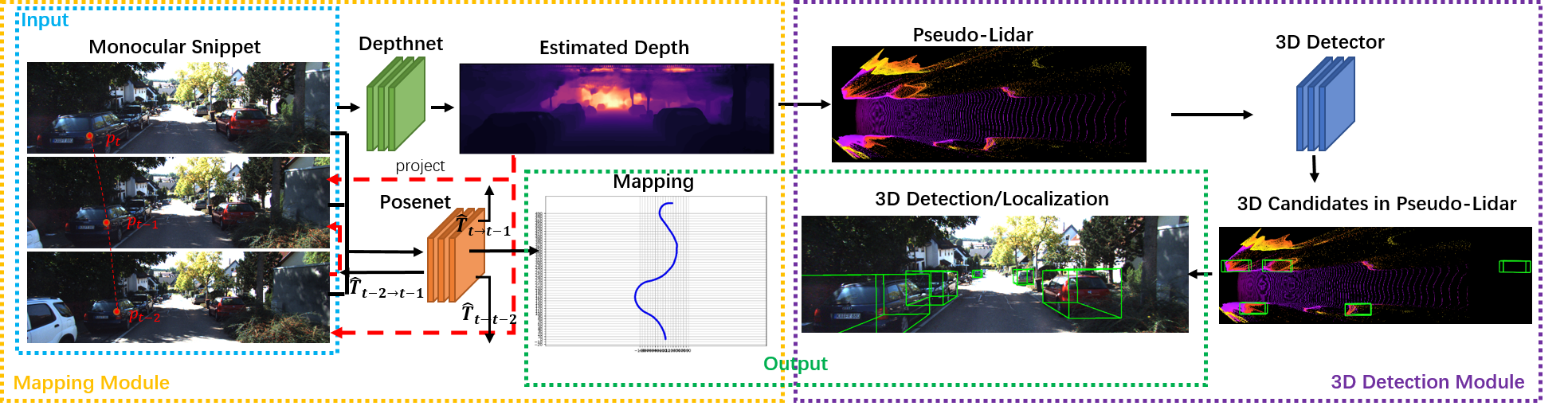

Mapping and 3D detection are two major issues in vision-based robotics, and self-driving. While previous works only focus on each task separately, we present an innovative and efficient multi-task deep learning framework (SM3D) for Simultaneous Mapping and 3D Detection by bridging the gap with robust depth estimation and "Pseudo-LiDAR" point cloud for the first time. The Mapping module takes consecutive monocular frames to generate depth and pose estimation. In 3D Detection module, the depth estimation is projected into 3D space to generate "Pseudo-LiDAR" point cloud, where LiDAR-based 3D detector can be leveraged on point cloud for vehicular 3D detection and localization. By end-to-end training of both modules, the proposed mapping and 3D detection method outperforms the state-of-the-art baseline by 10.0% and 13.2% in accuracy, respectively. While achieving better accuracy, our monocular multi-task SM3D is more than 2 times faster than pure stereo 3D detector, and 18.3% faster than using two modules separately.

Overview of our SM3D. Mapping Module: Jointly learning and estimating depth and pose. 3D Detection Module: Jointly learning and estimating depth and 3D detection. Input: Monocular snippet. Output: Mapping/3D Detection & Location.

Overview of our SM3D. Mapping Module: Jointly learning and estimating depth and pose. 3D Detection Module: Jointly learning and estimating depth and 3D detection. Input: Monocular snippet. Output: Mapping/3D Detection & Location.

Here is a demo video of our SM3D testing on KITTI dataset. The top video shows the original input monocular RGB frames, with 3D detection; The middle video shows the depth estimation; The bottom one shows the ego motion mapping over time.