-

Notifications

You must be signed in to change notification settings - Fork 249

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Logging information appears to overload the application-insights endpoint and kills app #1007

Comments

|

@paulgaskell

The problem is most likely similar to this issue, in which the ingestion endpoint is being overloaded. See this for an explanation and possible workaround.

You can try to extend the timeout of the log handler like so: If this doesn't work, you can using a custom logger to handle your logging of telemetry. The reason you are seeing these logs is because you are mostly likely wrapping the root logger with the azure log handler and so EVERY log that is made with the logging library is sent to app insights. See this example for how to setup a simple logger by name, so only logs under that logger will be sent.

We can possibly remove the warning messages in the actual handler whenever these errors occur, but they do provide some valuable debugging information. We could probably set the log levels of these messages to "DEBUG" so customers won't be able to see them if they don't explicitly set their loggers to that level. Would that be something that makes sense for your use case? |

|

@lzchen thanks for getting back to me. I think you are right it's overloading the endpoint, I ran some tests over the weekend when I hit my main api at 2 min intervals and 5 min intervals I see this behaviour, but not when I hit it at 10 min intervals. I've tried increasing the timeout, this doesn't work, I tried 30 seconds and 300 seconds. We are not putting the handler in the root logger we put it in the named loggers for each service - and I've seen this error when we put through like 200 log messages so I don't think the rate is really that high. What is backoff and fail strategy for the handler? Once the Azure endpoint connection times out does it just infinitely retry at 30 second intervals? What about the new logging info does this just pile up in memory as it retrys? |

Interesting, if you are using named loggers, they should not be logging logs from the SDK since it is using it's own named logger.

The default export interval is every 15s, meaning the logs that are batched up will try to be sent every 15s. If the connection times out for a "send", it will be stored in local storage (in the file system), and will be sent with the next batch if possible (if does not exceed max batch size which is 100). This will be retried infinitely until it is either sent successfully, or the failed telemetry exceeds the retention period and gets removed (default 7 days). There is no backoff strategy for the handler because we do not have an SLA for the ingestion service. With that being said, it is expected for some telemetry to be retried due to the ingestion endpoint being overloaded. However, is the current issue still the spamming of the "log error" message, or is it okay since the rate isn't that high? |

|

@lzchen hmm then maybe we are doing something wrong on init of the logger. Pseudocode for what we do at the moment is This is that same for all loggers for all services on the platform. Is this not the intended use? Ok - the behaviour I see is that after running the service for a couple of hours that warning appears in the container logs, CPU utilisation for that pod jumps to max and stays there indefinitely and the service stops responding to requests. So something is eating all the CPU and preventing traffic to the container but all I see in the logs are those warning messages that it is retrying. Any ideas? |

Hmm, that looks totally find to me. The weird part is that the logger that is responsible for sending out the "WARNING" messages uses a separate named logger as well. So unless you are using the same name as that (which is unlikely because we use name, or you have the handler attached to the root logger, it should not be sending these logs as telemetry to appinsights. So something is eating all the CPU and preventing traffic to the container but all I see in the logs are those warning messages that it is retrying. Any ideas? It could possibly be either CPU is being taken up by the actual retrying of the failed to send telemetry (the REAL logs you want to send), or CPU is being taken up by sending the "spammed logs" (the WARNINGS). We should find out which of these are the case. If it is the former, there might be an issue with the way we are handling retry logic. If it is the latter, we have to find out what is causing these "spammed logs" to be sending in the first place and how to actually omit them from being sent. I think a way you can test the above is to actually set your logger's logging level to CRITICAL, and change ALL of the "normal logs" (the ones that you actually want to see telemetry for) to use |

|

@lzchen - ah no sorry those are the container logs not the app-insights logs so that makes sense. Ok will give the critical thing a go in the morning and get back to you - thank you for the suggestion. |

|

@lzchen - been running this morning with Is there some way of me setting the default log behaviour of the Opencensus package, something like |

I believe Python logging library logging levels are defined in such a way that the LOWER levels are ignored, so I think you would want to set your normal logs to WARNING and the AzureLogHandler to INFO or DEBUG. I believe the name was use for the logger that is responsible for those "spammed messages" is |

|

@lzchen - bit more information. I was wrong before after 6 hours running ok it broke again - same behaviour as on the initial graph where the CPU for the service spikes to 99% and we can't make new connections to the service and have to kill it. This time we saw no log messages as these were suppressed. I then set the log level for the opencensus root logger to critical only by calling This suppressed the opencensus logs but again had the same issue. Finally I tried removing the AzureHandler from the service that kept breaking, this ran for about 12 hours then we had the same issue as before on one of the other services that still has AzureHandler on it but get lower traffic. So I think the log messages are a symptom and not the issue here. There is some process that is failing and causing AzureHandler to massively spike in CPU usage. I'm going to set the AzureHandler logs to debug and see what comes out this morning. But again any help/suggestions would be welcome. |

|

Adding a bit more colour - this is the method error that throws the error when the issue starts; line 46 in transport It is hitting the second catch all exception with

Which seems to suggest the Azure endpoint drops. If this throws an error I think it gets kicked back to line 68 in BaseLogHandler here which is called from Worker here. that Worker thread is running an infinite |

|

@paulgaskell Something that may help with your use case is if we disable retry logic altogether. You may have some dropped telemetry due to overloaded endpoint but it might help with the cpu usage. Would that be something that would work for your business logic? |

|

Hi @lzchen |

|

@elsahu |

|

@lzchen |

|

@lzchen Hi Leighton, were you able to diagnose this, we would like to try out any release which can resolve this behaviour. Thanks |

|

@varunbalupuri |

|

Any update on this issue? In our case we only experience this issue from our cluster. When I run locally, this error doesn't appear (even though exactly at the same time the service running in the cluster gets this error). Does App Insights limit number of requests from a specific IP? |

|

@TalalMahmoodChaudhry |

|

Thanks. Is there any way to increase the limit? |

|

@TalalMahmoodChaudhry |

|

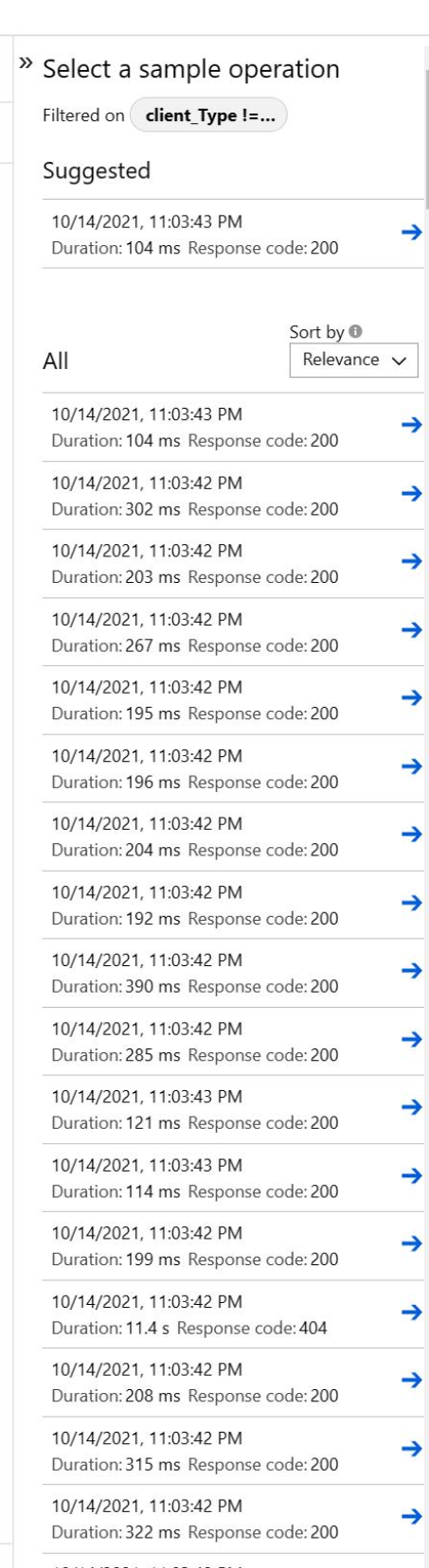

We have hit a similar issue. Running on Azure Web Apps with no load - just a health probe at random times we get CPU spikes and the app stops responding. CPU usage can bee seen here that correlates with the halt in requests: This is a separate deployment, similar behavior: This has only happened since adding this code: microsoft/AzureTRE#1039 Initially I thought it was ddos, but just seems to be request being logged multiple times, there were about 5000 requests logged in a short period of time (we only have the app gw health probe running): @lzchen any guidance would be appreciated - it might be an issue with our code. I will send you an IM - we have this deployed, happy to modify code and to help troubleshoot. |

|

@marrobi |

|

Seems to have been fixed with tiangolo/fastapi#433 (comment) |

We are using Python 3.6 deployed on Azure Kubernetes, we've just implemented the AzureHandler as advised in the docs and are sending logging information with messages and some custom dimensions. This all works fine, we see all the information coming up in app-insights as expected. We also run an integration testing service that pings all our APIs in the background so the logging is continuously being recorded throughout the day.

We are seeing one of our services getting overloaded with what appear to be AzureHandler warnings that block traffic to this container. In the container logs this shows up like;

This occurs with spikes in CPU usage for this container like;

So my guess is there is either a bad message being created that is continuously retried and backs up traffic, or the endpoint just gets overloaded and backs up traffic. I would expect/like that there is some way we can control this behaviour through AzureHandler params somehow.

So my question is 3 fold really - do you know what is causing this to happen? What can we do to mitigate it? And should there be some default handling of this situation in the Handler do you think?

The text was updated successfully, but these errors were encountered: