New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Opening corrupt Aperio SVS file causes Out-of-Memory error #102

Comments

|

Thank you. You are right, unpacking bytes or ASCII type values is very inefficient and not necessary. This is going to be fixed in the next version. |

|

Thanks for the quick reply. And thank you so much for all the great work you pour into tifffile! |

|

I'm not able to reproduce this with a simple example: tifffile.imwrite('test.tif', shape=(1,1), dtype='uint8', extratags=[(1, 2, 3942661888, b'1'*3942661888)])Opening the file with TiffFile uses ~4GB. The peak usage is ~8GB, so at some point there's a copy made, probably during decoding bytes to string: t = TiffFile('test.tif')In the case of your corrupted file with 3126 tags, there are probably many large tag values such that the process is running out of memory at some point. |

|

That sounds plausible. I'll try to build a test case for this file and will report back. What would be a good way to determine that a file is corrupt without risking to run out of memory on a machine? |

I just implemented that too, putting the limit to 8, but there really is no guarantee that will work for corrupt files. Also, it would be nice to at least inspect the structure of corrupt tags. |

|

Do you know the codes and types of the three large tags? I don't think it's the ASCII one mentioned earlier that's causing the issue. Maybe one of the large tag values is getting unpacked to Python integers. |

|

You're right: |

|

Should be mitigated by v2021.11.2, which delay-loads non-essential TIFF tag values. |

|

Tested with all corrupted files that were problematic and it works perfectly now. |

Hi @cgohlke

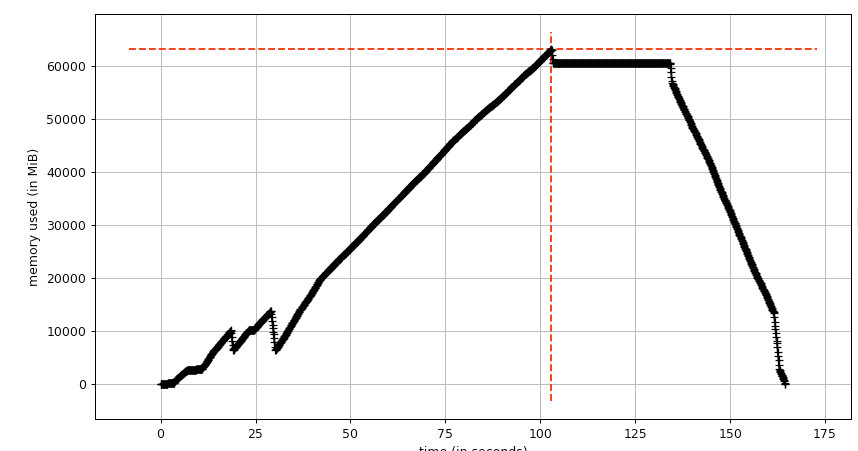

I encountered a corrupted AperioSVS file that causes a machine with 64GB of ram to run out-of-memory when trying to instantiate the TiffFile instance. Creating the instance causes a dramatic increase in process memory usage in the TiffTag loading part of tifffile, which just causes the server to kill the python process.

Ideally I would expect the code to just crash early, so that cleanup can happen in python.

Sadly I can't share the file, so here's some additional information:

The filesize is 5958078846 bytes and it's recognized as

TIFF.CLASSIC_LEtifffile detects

tagno=3126tags, of which almost all raise an unknown tag data type error.Then for tag tagno=2327 it calls

TiffTag.from_filewithoffset=3256275384andheader=b'\x01\x00\x02\x00\x00?\x00\xeb5\x05\x92\x1b'Which due to the large

countcauses a ~4.000.000 character string to be unpacked later in this code path and due to some string ops causes a massive increase in Memory consumption.I was wondering if there are upper bounds on the amount of data a TiffTag can contain, and this codepath could error early.

Cheers,

Andreas 😃

The text was updated successfully, but these errors were encountered: