New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

maxProcessing config parameter in ns instead of ms #1132

Comments

|

That However, I think you should keep The If you have enabled JMX, can you take a |

|

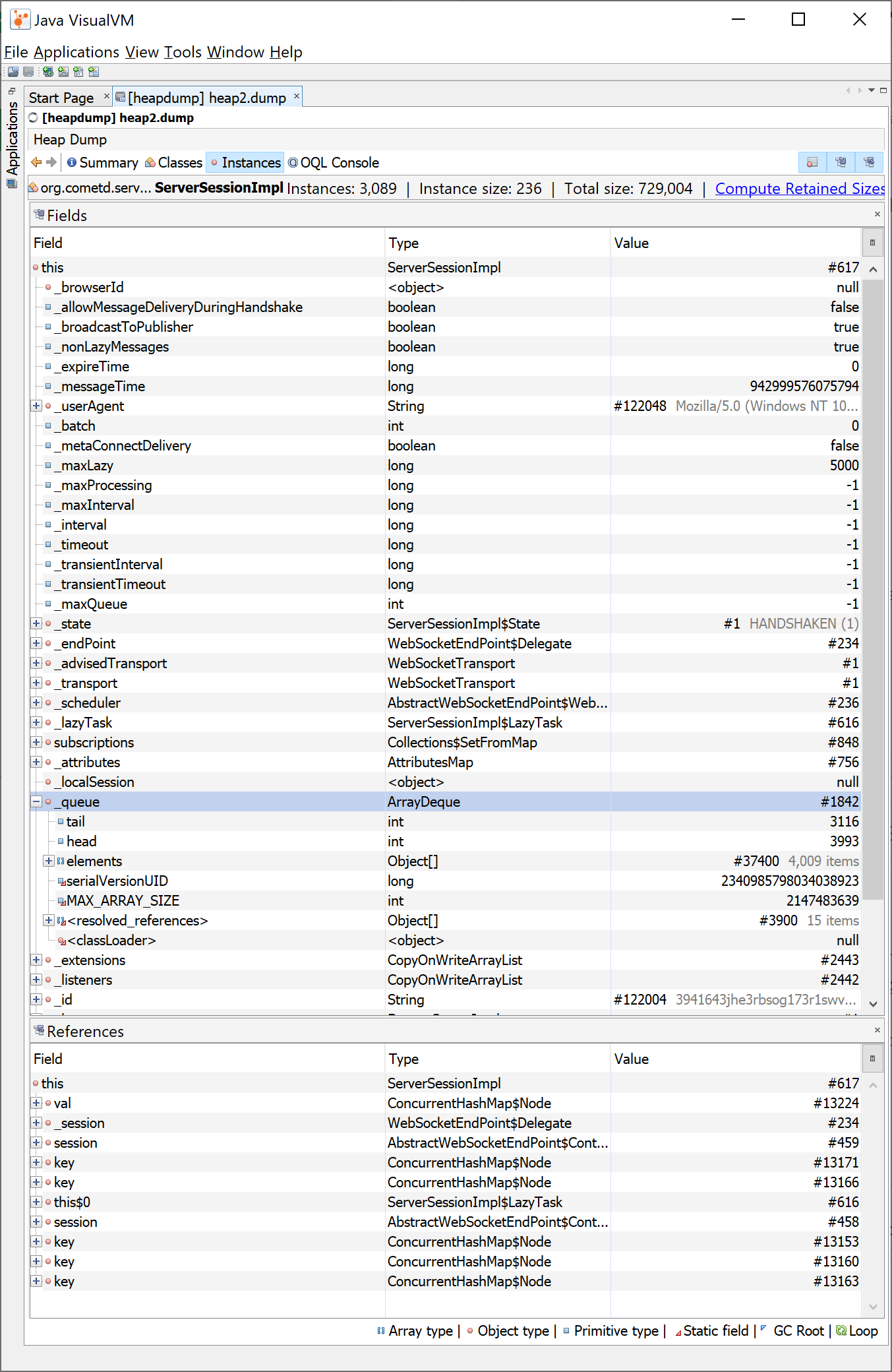

I'm using the default value of maxInterval (10000), but it does not seem to remove all old sessions. I haven't enabled JMX, but I used jattach to dump the heap: For example, here is a ServerSessionImpl from a websocket client that appears to no longer be connected, with 4009 ServerMessageImpl instances in the It has expireTime of 0. Based on the code here, it looks like I need to use maxProcessing in order to get CometD to sweep the session: Line 158 in d4536b9

|

|

This may be a symptom of a different problem. What transport do you use? Can you also take a thread dump? The only time that The extreme where this time becomes excessively long is when there are a ton of messages to write, the connection goes TCP congested, and the client does not read or otherwise communicate with the server, so |

|

We are using the default transports. The client shown in the heap dump above appears to be using the WebSocketTransport. My guess is that this happens when a client connects and then vanishes for one reason or another. We then continue publishing messages to channels that the client had subscribed to, which get added to the ServerSessionImpl's _queue but the session never expires. Here is the thread dump (from the same time as the heap dump above): |

|

It would really help to understand the state of the sessions if you can take a I would like to understand how things ended up in the situation you're facing. |

|

I can't share the heap dump since it is from our production servers and contains some sensitive information. I could run OQL in VisualVM to extract specific information from the heap dump. |

|

It seems plausible that the orphaned ServerSessionImpl instances might be associated with the same aborted Websocket connections that generate an EofException in #1094 (just speculating) |

|

While you can be TCP congested and leave sessions with When the idle timeout fires on the server, the connection is closed and those blocked writes are aborted, which means that The JVM stack trace you posted above shows a completely idle server, which is probably the result of idle timeouts, which would then have set #1094 is an issue about logging, so while I understand that it's your same case, I still struggle at understanding how it is possible that you have sessions with What is your idle timeout value (on the Jetty server connector)? |

|

After enabling the maxProcessing setting, I'm no longer seeing a rapid increase in memory usage. I can see from the logs that the swept sessions are all in the HANDSHAKEN state (e.g. |

|

@youngj I have investigated this further and found a bug, filed as #1134. Note that this session went through 268422 What clients are you using? Also, any reason to subclass |

|

I subclassed ServerSessionImpl to patch the maxProcessing time interval bug so I could fix the memory leak without needing to compile a patched version of CometD. The memory leak occurred when I was using ServerSessionImpl. MyServerSessionImpl.java: public class MyServerSessionImpl extends ServerSessionImpl {

private static final Logger _logger = LoggerFactory.getLogger(MyServerSessionImpl.class);

private static Field maxProcessingField;

static {

try {

maxProcessingField = ServerSessionImpl.class.getDeclaredField("_maxProcessing");

maxProcessingField.setAccessible(true);

} catch (Exception ex) {

_logger.warn("error getting _maxProcessing field", ex);

}

}

public MyServerSessionImpl(BayeuxServerImpl bayeux) {

super(bayeux);

}

@Override

protected boolean handshake(ServerMessage.Mutable message) {

boolean res = super.handshake(message);

try {

long maxProcessing = (long) maxProcessingField.get(this);

if (maxProcessing > 0) {

maxProcessing = maxProcessing * 1000000;

maxProcessingField.set(this, maxProcessing);

}

} catch (Exception ex) {

_logger.warn("error updating _maxProcessing field", ex);

}

return res;

}

}MyBayeuxServerImpl.java: public class MyBayeuxServerImpl extends BayeuxServerImpl {

@Override

public ServerSessionImpl newServerSession() {

return new MyServerSessionImpl(this);

}

}MyCometDServlet.java: public class MyCometDServlet extends CometDServlet {

@Override

protected BayeuxServerImpl newBayeuxServer() {

return new MyBayeuxServerImpl();

}

}The cycle printed in the logs is a property of the scheduler that appears to always increase over time, not a number of cycles for that particular session. The client is a version of the CometD JavaScript library that is probably from CometD 2.6. Normally it does follow |

|

We are seeing similar issues after upgrading from 5.0.2 to 5.0.10. The symptoms are similar: after running a server for several days some ServerSessionImpl are not removed. It doesn't happen to all sessions though. When we stop new incoming connections to the server the amount of server sessions doesn't drop to zero even after many hours. Our current way of coping with this is to set a max queue option so that we can clean at least some sessions. Configuration:

Clients run cometd 5.0.10 from npm. We couldn't find the root cause and couldn't replicate the issue ourselves but we have an assumption: clients initiate a connection but then a page reload happens hence leaving session in an unfinished setup. There could be several consequent page reloads. An example of a session that hit max queue limit despite us setting maxInterval (notice that lastConnected is null) session.setAttribute( "startedAt", OffsetDateTime.now() );

session.addListener( new ServerSession.QueueMaxedListener() {

@Override

public boolean queueMaxed( ServerSession session, Queue<ServerMessage> queue, ServerSession sender,

ServerMessage message ) {

LOG.warn(

"=====>>> Session queue maxed for cometdSession {}."

+ " Disconnecting cometd session isConnected={} isHandshook={} startedAt={} lastConnected={}.",

cometdSessionId,

session.isConnected(), session.isHandshook(),

session.getAttribute( "startedAt" ), session.getAttribute( "lastConnected" ) );

session.disconnect();

return false;

}

} );

session.addListener( new ServerSession.HeartBeatListener() {

@Override

public void onResumed( ServerSession session, ServerMessage message, boolean timeout ) {

// This is called when server sends /meta/connect to the client

}

@Override

public void onSuspended( ServerSession session, ServerMessage message, long timeout ) {

// This is called when server receives /meta/connect response from the client

session.setAttribute( "lastConnected", OffsetDateTime.now() );

}

} );We will be interested to test the latest fixes once they are released to maven central. |

|

In order to test the fixes I would also need a new version to be released to Maven Central. |

When running CometD 7.0.5 in production, CometD was continually increasing its memory usage. From a heap dump, it appears that much of the memory usage was due to old ServerSessionImpl instances holding references to a large number of ServerMessageImpl instances and their related data. I thought that CometD would use the

timeoutconfiguration parameter to remove old ServerSessionImpl instances, but it appears that this is not the case. The CometD documentation specifies amaxProcessingsetting at https://docs.cometd.org/current/reference/#_java_server_configuration which seems like it would resolve this apparent memory leak: "The maximum period of time, in milliseconds, that the server waits for the processing of a message before considering the session invalid and removing it."However, the actual implementation adds the value from maxProcessing setting to a time which is measured in nanoseconds:

cometd/cometd-java/cometd-java-server/cometd-java-server-common/src/main/java/org/cometd/server/ServerSessionImpl.java

Line 159 in d4536b9

cometd/cometd-java/cometd-java-server/cometd-java-server-common/src/main/java/org/cometd/server/BayeuxServerImpl.java

Line 1298 in d4536b9

As a result the maxProcessing setting would also need to be defined in nanoseconds, not milliseconds as specified in the documentation.

Although ServerSessionImpl uses a

longfor the_maxProcessingvariable, setting the maxProcessing config setting larger than 2^31 nanoseconds causes a NumberFormatException which causes CometD to not work at all.Tested with:

How to reproduce:

Set maxProcessing configuration setting to 2100000000 and verify that sessions are purged every 2.1 seconds:

Set maxProcessing configuration setting to 2200000000 and verify that clients are unable to connect at all:

The text was updated successfully, but these errors were encountered: