New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Is coturn possible to running on kubernetes? #738

Comments

|

I Think it's possible, because I can run in docker using the network from host, but I can't be affirmative, have a project I liked because uses a image from woahbase/alpine-coturn and I liked to be simple way to run coturn in docker, if u like or wan't to try this is the repo http://gitlab.com/jam-systems/jam |

|

@geovanipfranca thank for answer! Now i succeed to running coturn on kubernetes. |

|

Would you mind sharing what you've done and what are the difficulties you identified in the process? |

|

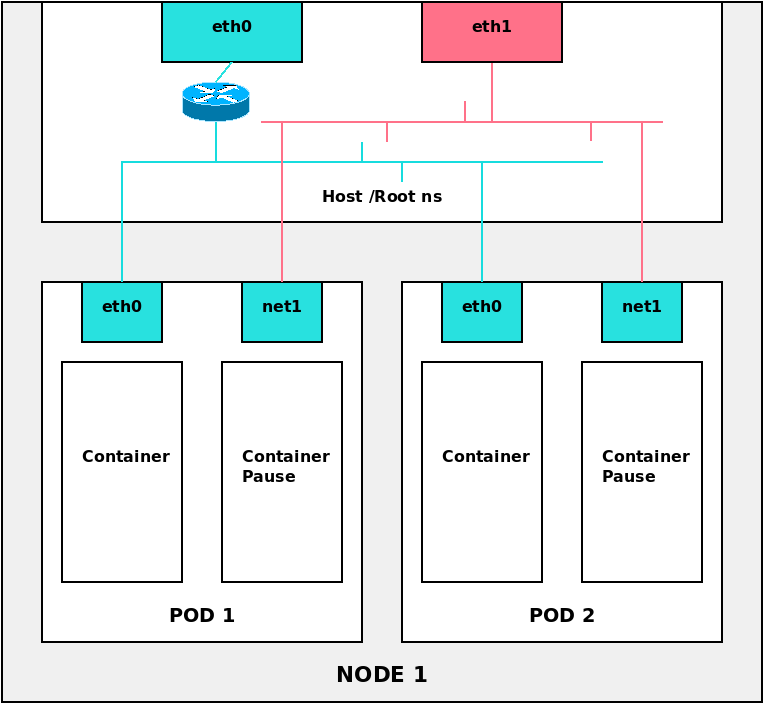

There is multiple way. @fcecagno I think there is more scalable solution, that I will describe here, To avoid one node one pod you can also use k8s with multus + whereabouts cni + sbr (source based routing) 192.168.121.0/24 is a global ipv4 address range config map Pod config: It is a direct switched/routed interface for coturn and you should listen only on multus secondary net1 interface with coturn and mgmt interface cli should listen on normal k8s interface (eth0).. Take care on ip filtering on net1! |

|

Hi all. Before, My company have a problem with specific azure public IP. Plus, k8s doesn't provide tcp/udp ip adresss with loadbalancer type service. So i made public udp IP for 3478 and internal IP for 3478(udp/tcp), 30000-65535(udp). Guys thanks for helping me. |

|

@nowjean Yes it could work but it not very effective to run through NAT the media, and so it adds some extra latency/jitter because of the middle boxes and NAT. Please consider that such setup as you described, if I understand correctly where media(rtp/rtcp) porst are behind a NAT can experience issues if ICE agent connection check frequency and NAT connection keep alive timeout does not match. AFIK NAT and connection tracking keeping only open the ports because of the ICE STUN connection checks, and so may it could happen that it cause an issue if the keep-alive STUN checks frequency longer than NAT connection tracking timeout. This only depends on ICE agent connection check frequency and NAT box timeout value. Hopefully they match but we should take care about such possibility in such setup.. |

|

@misi Anyway, my coturn server is not behind NAT but on the AKS(Azure Kubernetes). and my client is behind NAT(like a company network). Coturn deployed with k8s deployment resource with 3 replicas. I did long-run test for coturn server with licode more than 4hours. Everything works fine. Network traffic load balancing to each pods and Connection was perfect. Plus, Trickle ICE test also passed. (https://webrtc.github.io/samples/src/content/peerconnection/trickle-ice/) The advantage of coturn on kunbernetes is not only easy deployment but also reducing public IPs. AFAIK Coturn need to public IP per one server. But on k8s only one public ip needed because each pod have internal ip. k8s Service can load balancing to pods. I have not been test for autoscaling coturn pod yet. |

|

@nowjean I got coturn working for Chrome, but it fails on firefox. Thank you! |

|

Hi @nowjean , I am intending to deploy something similar into AKS too. Just curious if you have a Load-Balancer sitting in-front of your AKS Cluster for WebRTC traffic? |

|

@robincher No. I deployed Load Balacer in AKS. In detail, It is not just loadbalancer but loadbalancer type of Kubernetes Sevice. |

|

@nowjean all right got it :) , basically it's the managed LoadBalancer when AKS is created. Shall keep it simple for now. |

|

https://github.com/dharmendrakariya/coturn-on-kubernetes Please have a look, this might help. |

Hi, |

|

@russovincenzo Hi, I have a helm chart for coturn. So, It is too log to comment this area. But, I think @dharmendrakariya 's yaml files are almost same as mine. (https://github.com/dharmendrakariya/coturn-on-kubernetes). The different things are Deployment and Service type that depends on your environment. [Service Type] [Deployment] Thanks. |

Hi.

I tying to deploy coturn on aks(azure kubernetes). And using licode to media server. (K8s deployment, 3 replica pods)

It seems like working well. But, only in behind NAT enviroment client(like company network) can't connect with coturn server correctly.

I already open firewall with 3478 udp/tcp and 30000-65532 udp port.

If i change kubernetes coturn to azure vm, it's working well in company network.

Actually in kubernetes, there is no way to get a public ip which support simultaneously tcp/udp port.

So, i have two public ips. And i set udp ip to external ip in turnserver.conf

Everything working well(loadbalacing to coturn deployment, streaming, and so on..) except NAT env.

If i use only udp ip, is it possible to running coturn?(with no-tcp option)

Or is there any setting to two ips on coturn config???

Sorry for too long explain and silly question.

Thanks.

The text was updated successfully, but these errors were encountered: