Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

- Loading branch information

1 parent

1ec73a0

commit 1f0ae54

Showing

19 changed files

with

562 additions

and

2 deletions.

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -1,2 +1,25 @@ | ||

| # Self-Driving-Car- | ||

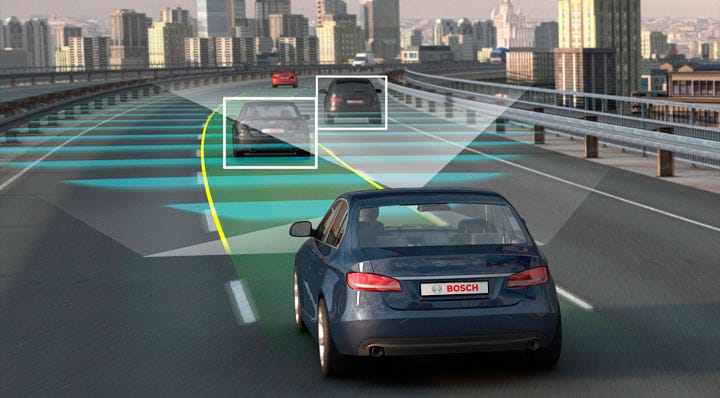

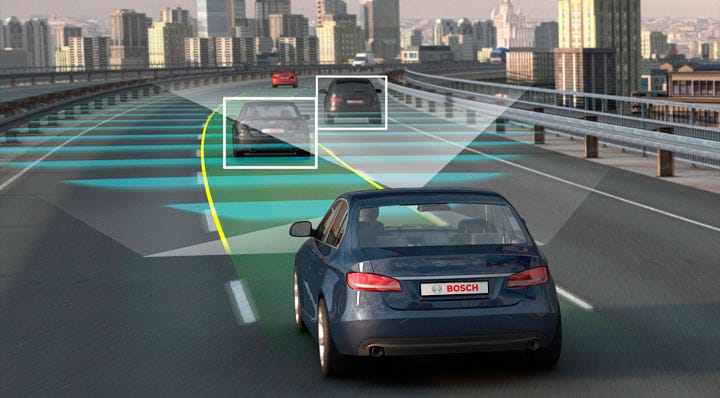

| A End to End Model which predicts the steering wheel angle based on the video/image | ||

| # Self Driving Car (End to End CNN/Dave-2) | ||

|  | ||

| Please so refer the [Self Driving Car Notebook](./Self_Driving_Car_Notebook.ipynb) for complete Information and Visualization | ||

|

|

||

| A TensorFlow/Keras implementation of this [Nvidia paper](https://arxiv.org/pdf/1604.07316.pdf) with some changes. | ||

|

|

||

| ### How to Use | ||

| Download Dataset by Sully Chen: [https://drive.google.com/file/d/0B-KJCaaF7elleG1RbzVPZWV4Tlk/view] | ||

| Size: 25 minutes = 25{min} x 60{1 min = 60 sec} x 30{fps} = 45,000 images ~ 2.3 GB | ||

|

|

||

| Note: You can run without training using the pretrained model if short of compute resources | ||

|

|

||

| Use `python3 train.py` to train the model | ||

|

|

||

| Use `python3 run.py` to run the model on a live webcam feed | ||

|

|

||

| Use `python3 run_dataset.py` to run the model on the dataset | ||

|

|

||

| To visualize training using Tensorboard use `tensorboard --logdir=./logs`, then open http://0.0.0.0:6006/ into your web browser. | ||

|

|

||

| ### Credits & Inspired By | ||

| (1) https://github.com/SullyChen/Autopilot-TensorFlow<br> | ||

| (2) Research paper: End to End Learning for Self-Driving Cars by Nvidia. [https://arxiv.org/pdf/1604.07316.pdf]<br> | ||

| (3) Nvidia blog: https://devblogs.nvidia.com/deep-learning-self-driving-cars/ <br> | ||

| (4) https://devblogs.nvidia.com/explaining-deep-learning-self-driving-car/ |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,240 @@ | ||

| { | ||

| "cells": [ | ||

| { | ||

| "cell_type": "markdown", | ||

| "metadata": { | ||

| "colab": { | ||

| "autoexec": { | ||

| "startup": false, | ||

| "wait_interval": 0 | ||

| } | ||

| }, | ||

| "colab_type": "code", | ||

| "collapsed": true, | ||

| "id": "XHFnthirwlfn" | ||

| }, | ||

| "source": [ | ||

| "# Self Driving Car using (End to End CNN/Dave-2)\n", | ||

| "\n", | ||

| "* Used convolutional neural networks (CNNs) to map the raw pixels from a front-facing camera to the steering commands for a self-driving car. This powerful end-to-end approach means that with minimum training data from humans, the system learns to steer, with or without lane markings, on both local roads and highways. The system can also operate in areas with unclear visual guidance such as parking lots or unpaved roads.\n", | ||

| "* The system is trained to automatically learn the internal representations of necessary processing steps, such as detecting useful road features, with only the human steering angle as the training signal. We do not need to explicitly trained it to detect, for example, the outline of roads.\n", | ||

| "* End-to-end learning leads to better performance and smaller systems. Better performance results because the internal components self-optimize to maximize overall system performance, instead of optimizing human-selected intermediate criteria, e. g., lane detection. Such criteria understandably are selected for ease of human interpretation which doesn’t automatically guarantee maximum system performance. Smaller networks are possible because the system learns to solve the problem with the minimal number of processing steps.\n", | ||

| "* It is also called as DAVE-2 System by Nvidia\n", | ||

| "\n", | ||

| "Watch Real Car Running Autonoumously using this Algorithm\n", | ||

| "https://www.youtube.com/watch?v=NJU9ULQUwng" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "markdown", | ||

| "metadata": {}, | ||

| "source": [ | ||

| "### Credits & Inspired By\n", | ||

| "(1) https://github.com/SullyChen/Autopilot-TensorFlow<br>\n", | ||

| "(2) Research paper: End to End Learning for Self-Driving Cars by Nvidia. [https://arxiv.org/pdf/1604.07316.pdf]<br>\n", | ||

| "(3) Nvidia blog: https://devblogs.nvidia.com/deep-learning-self-driving-cars/ <br>\n", | ||

| "(4) https://devblogs.nvidia.com/explaining-deep-learning-self-driving-car/" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "markdown", | ||

| "metadata": {}, | ||

| "source": [ | ||

| "### Conclusions from the paper\n", | ||

| "* This demonstrated that CNNs are able to learn the entire task of lane and road following without manual decomposition into road or lane marking detection, semantic abstraction, path planning, and control.The system learns for example to detect the outline of a road without the need of explicit labels during training. \n", | ||

| "* A small amount of training data from less than a hundred hours of driving was sufficient to train the car to operate in diverse conditions, on highways, local and residential roads in sunny, cloudy, and rainy conditions. \n", | ||

| "* The CNN is able to learn meaningful road features from a very sparse training signal (steering alone).\n", | ||

| "* More work is needed to improve the robustness of the network, to find methods to verify the robust- ness, and to improve visualization of the network-internal processing steps." | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "markdown", | ||

| "metadata": {}, | ||

| "source": [ | ||

| "### Other Larger Datasets you can train on\n", | ||

| "(1) Udacity: https://medium.com/udacity/open-sourcing-223gb-of-mountain-view-driving-data-f6b5593fbfa5<br>\n", | ||

| "70 minutes of data ~ 223GB<br>\n", | ||

| "Format: Image, latitude, longitude, gear, brake, throttle, steering angles and speed<br>\n", | ||

| "(2) Udacity Dataset: https://github.com/udacity/self-driving-car/tree/master/datasets [Datsets ranging from 40 to 183 GB in different conditions]<br>\n", | ||

| "(3) Comma.ai Dataset [80 GB Uncompressed] https://github.com/commaai/research<br>\n", | ||

| "(4) Apollo Dataset with different environment data of road: http://data.apollo.auto/?locale=en-us&lang=en<br>\n", | ||

| "### Some other State of the Art Implementations\n", | ||

| "Implementations: https://github.com/udacity/self-driving-car<br>\n", | ||

| "Blog: https://medium.com/udacity/teaching-a-machine-to-steer-a-car-d73217f2492c<br><br>" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "code", | ||

| "execution_count": 3, | ||

| "metadata": {}, | ||

| "outputs": [], | ||

| "source": [ | ||

| "# Imports\n", | ||

| "import numpy\n", | ||

| "import matplotlib.pyplot as plt\n", | ||

| "from __future__ import division\n", | ||

| "import os\n", | ||

| "import random\n", | ||

| "\n", | ||

| "from scipy import pi\n", | ||

| "from itertools import islice\n" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "code", | ||

| "execution_count": 5, | ||

| "metadata": { | ||

| "colab": { | ||

| "autoexec": { | ||

| "startup": false, | ||

| "wait_interval": 0 | ||

| } | ||

| }, | ||

| "colab_type": "code", | ||

| "id": "vgcc6iQobKHi" | ||

| }, | ||

| "outputs": [ | ||

| { | ||

| "name": "stdout", | ||

| "output_type": "stream", | ||

| "text": [ | ||

| "Completed processing data.txt\n" | ||

| ] | ||

| } | ||

| ], | ||

| "source": [ | ||

| "# read images and steering angles from driving_dataset folder\n", | ||

| "\n", | ||

| "DATA_FOLDER = './driving_dataset/' # change this to your folder\n", | ||

| "TRAIN_FILE = os.path.join(DATA_FOLDER, 'data.txt')\n", | ||

| "\n", | ||

| "\n", | ||

| "split =0.8 #Train = 80%\n", | ||

| "X = []\n", | ||

| "y = []\n", | ||

| "with open(TRAIN_FILE) as fp:\n", | ||

| " for line in fp:\n", | ||

| " path, angle = line.strip().split()\n", | ||

| " full_path = os.path.join(DATA_FOLDER, path)\n", | ||

| " X.append(full_path)\n", | ||

| " \n", | ||

| " # converting angle from degrees to radians\n", | ||

| " y.append(float(angle) * pi / 180 )\n", | ||

| "\n", | ||

| "\n", | ||

| "y = np.array(y)\n", | ||

| "print(\"Completed processing data.txt\")\n", | ||

| "\n", | ||

| "split_index = int(len(y)*0.8)\n", | ||

| "\n", | ||

| "train_y = y[:split_index]\n", | ||

| "test_y = y[split_index:]\n", | ||

| "\n", | ||

| " " | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "markdown", | ||

| "metadata": {}, | ||

| "source": [ | ||

| "### PDF of steering angle in radians in train and test " | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "code", | ||

| "execution_count": 9, | ||

| "metadata": {}, | ||

| "outputs": [ | ||

| { | ||

| "data": { | ||

| "image/png": "iVBORw0KGgoAAAANSUhEUgAAAXcAAAD8CAYAAACMwORRAAAABHNCSVQICAgIfAhkiAAAAAlwSFlzAAALEgAACxIB0t1+/AAAADl0RVh0U29mdHdhcmUAbWF0cGxvdGxpYiB2ZXJzaW9uIDIuMi4yLCBodHRwOi8vbWF0cGxvdGxpYi5vcmcvhp/UCwAAEbJJREFUeJzt3W2MpWV9x/Hvr7vrsy6NTCLdhzk0Els1KjpBrElDxKZICbwQU0zqUzWbGlEhJq3ahAVf1bQRNBjNKlasxIcgNavBKkaN+gJ0wRWF1WYrc2QrDSvIIvUpa/99cc7ocPbMnjMzZ/bMXPv9JCd7P1xzn//NLr+5znXu+7pTVUiS2vIH0y5AkjR5hrskNchwl6QGGe6S1CDDXZIaZLhLUoMMd0lqkOEuSQ0y3CWpQZun9cannnpqdTqdab29JG1It99++0+ramZUu6mFe6fTYd++fdN6e0nakJJ0x2nnsIwkNchwl6QGGe6S1CDDXZIaZLhLUoMMd0lqkOEuSQ0aGe5JHpfkW0m+m+SuJFcNafPaJIeT7O+/3rA25UqSxjHOTUy/Bl5SVY8k2QJ8M8kXqurWgXafqqpLJ1+iJGm5Rvbcq+eR/uqW/sunaq93nQ4kvZfTPEgnnbHG3JNsSrIfuB+4papuG9Ls5UnuTHJjkh1LHGdXkn1J9h0+fHgVZWukbheqeq/uWHcrS2rIWOFeVb+tqucB24Gzkjx7oMnngE5VPQf4MnD9EsfZU1VzVTU3MzNy3htJ0got62qZqnoI+Bpw3sD2B6rq1/3VDwEvmEh1kqQVGedqmZkkp/SXHw+8FPjBQJvTFq1eCByYZJGSpOUZ52qZ04Drk2yi98vg01X1+STvAvZV1V7gLUkuBI4CDwKvXauCJUmjpWo6F77Mzc2V87mvoaT3ZergsqQNLcntVTU3qp13qEpSgwx3SWqQ4S5JDTLcJalBhrskNchwl6QGGe6S1CDDXZIaZLhLUoMMd0lqkOEuSQ0y3CWpQYa7JDXIcJekBhnuktQgw12SGmS4S1KDDHdJapDhLkkNMtwlqUEjwz3J45J8K8l3k9yV5KohbR6b5FNJDia5LUlnLYqVJI1nnJ77r4GXVNVzgecB5yU5e6DN64GfVdXTgauBd0+2TEnScowM9+p5pL+6pf+qgWYXAdf3l28Ezk2SiVUpSVqWscbck2xKsh+4H7ilqm4baLINuBegqo4CR4CnTrJQSdL4xgr3qvptVT0P2A6cleTZA02G9dIHe/ck2ZVkX5J9hw8fXn61kqSxLOtqmap6CPgacN7ArkPADoAkm4GtwINDfn5PVc1V1dzMzMyKCpYkjTbO1TIzSU7pLz8eeCnwg4Fme4HX9JcvBr5SVcf03CVJJ8bmMdqcBlyfZBO9XwafrqrPJ3kXsK+q9gLXAf+W5CC9Hvsla1axJGmkkeFeVXcCZw7ZfsWi5V8Br5hsaZKklfIOVUlqkOEuSQ0y3CWpQYa7JDXIcJekBhnuktQgw12SGmS4S1KDDHdJapDhLkkNMtwlqUGGuyQ1yHCXpAYZ7pLUIMNdkhpkuEtSgwx3SWqQ4S5JDTLcJalBhrskNWhkuCfZkeSrSQ4kuSvJW4e0OSfJkST7+68rhh1LknRibB6jzVHgbVV1R5InA7cnuaWq7h5o942qumDyJUqSlmtkz72q7quqO/rLPwcOANvWujBJ0sota8w9SQc4E7htyO4XJfluki8kedYEapMkrdA4wzIAJHkS8Bngsqp6eGD3HcBsVT2S5Hzgs8AZQ46xC9gFsHPnzhUXLUk6vrF67km20Av2G6rqpsH9VfVwVT3SX74Z2JLk1CHt9lTVXFXNzczMrLJ0SdJSxrlaJsB1wIGqes8SbZ7Wb0eSs/rHfWCShUqSxjfOsMyLgVcB30uyv7/tncBOgKr6IHAx8MYkR4FfApdUVa1BvVqNTge6XZidhfn5aVcjaQ2NDPeq+iaQEW2uBa6dVFFaI90uVEGO+9cpqQHeoSpJDTLcJalBhrskNchwl6QGGe6S1CDDXZIaZLhLUoMMd0lqkOEuSQ0y3CWpQYa7JDXIcJekBhnuktQgw12SGmS4S1KDDHdJapDhLkkNMtwlqUGGuyQ1yHCXpAYZ7pLUoJHhnmRHkq8mOZDkriRvHdImSd6X5GCSO5M8f23KlSSNY/MYbY4Cb6uqO5I8Gbg9yS1VdfeiNi8Dzui/Xgh8oP+nJGkKRvbcq+q+qrqjv/xz4ACwbaDZRcDHqudW4JQkp028WknSWMbpuf9Okg5wJnDbwK5twL2L1g/1t9038PO7gF0AO3fuXF6lGqlzTYfukS4ABeSq/G5Z0sll7C9UkzwJ+AxwWVU9PLh7yI8ckylVtaeq5qpqbmZmZnmVaqTukS61u6jdvf/0i5clnVzGCvckW+gF+w1VddOQJoeAHYvWtwM/WX15kqSVGOdqmQDXAQeq6j1LNNsLvLp/1czZwJGqum+JtpKkNTbOmPuLgVcB30uyv7/tncBOgKr6IHAzcD5wEPgF8LrJlypJGtfIcK+qbzJ8TH1xmwLeNKmiJEmr4x2qktQgw12SGmS4S1KDDHdJapDhLkkNMtwlqUGGuyQ1yHCXpAYZ7pLUIMNdkhpkuEtSgwx3SWqQ4S5JDTLcJalBhrskNchwl6QGGe6S1CDDXZIaZLhLUoMMd0lq0MhwT/KRJPcn+f4S+89JciTJ/v7rismXKUlajs1jtPkocC3wseO0+UZVXTCRiiRJqzay515VXwcePAG1SJImZFJj7i9K8t0kX0jyrAkdU5K0QuMMy4xyBzBbVY8kOR/4LHDGsIZJdgG7AHbu3DmBt5YkDbPqnntVPVxVj/SXbwa2JDl1ibZ7qmququZmZmZW+9aSpCWsOtyTPC1J+stn9Y/5wGqPK0lauZHDMkk+AZwDnJrkELAb2AJQVR8ELgbemOQo8EvgkqqqNatYkjTSyHCvqleO2H8tvUslJUnrhHeoSlKDDHdJapDhLkkNMtwlqUGGuyQ1yHCXpAYZ7pLUIMNdkhpkuJ8E5rcCCczOTrsUSSeI4X4SOP1yoArm56ddiqQTxHCXpAYZ7pLUIMNdkhpkuEtSgwx3SWqQ4S5JDTLcJalBhrskNchwl6QGGe6S1KCR4Z7kI0nuT/L9JfYnyfuSHExyZ5LnT75MSdJyjNNz/yhw3nH2vww4o//aBXxg9WVJklZjZLhX1deBB4/T5CLgY9VzK3BKktMmVaAkafkmMea+Dbh30fqh/jZNQ6fj9L6S2DyBY2TIthraMNlFb+iGnTt3TuCtdYxutze9r6ST2iR67oeAHYvWtwM/GdawqvZU1VxVzc3MzEzgrbUis7O93n2nM+1KJK2RSYT7XuDV/atmzgaOVNV9Eziu1sr8fK933+1OuxJJa2TksEySTwDnAKcmOQTsBrYAVNUHgZuB84GDwC+A161VsZKk8YwM96p65Yj9BbxpYhVJklbNO1QlqUGGuyQ1yHCXpAYZ7pLUIMNdkhpkuEtSgwx3SWqQ4S5JDZrExGFa52a3zpKrjp3fzenFpHYZ7ieB+cvmh++4ctiEnpJa4LCMJDXIcJekBhnuktQgw12SGmS4S1KDvFpmA+pc06F75NinKM1unQV8upIkw31D6h7pUruXuEr9ci9vlOSwjCQ1yXCXpAYZ7pLUIMNdkho0VrgnOS/JD5McTPL2Iftfm+Rwkv391xsmX6okaVwjr5ZJsgl4P/AXwCHg20n2VtXdA00/VVWXrkGNkqRlGqfnfhZwsKp+VFW/AT4JXLS2ZUmSVmOccN8G3Lto/VB/26CXJ7kzyY1Jdgw7UJJdSfYl2Xf48OEVlCtJGsc44T7srpjBO2g+B3Sq6jnAl4Hrhx2oqvZU1VxVzc3MzCyvUknS2MYJ90PA4p74duAnixtU1QNV9ev+6oeAF0ymPEnSSowT7t8GzkhyepLHAJcAexc3SHLaotULgQOTK1GStFwjr5apqqNJLgW+CGwCPlJVdyV5F7CvqvYCb0lyIXAUeBB47RrWrGE6Heh2YXZ22pVIWgfGmjisqm4Gbh7YdsWi5XcA75hsaVqWbhfKR15L6vEOVUlqkOEuSQ0y3DeqTgeS3p+SNMBw36gWxti7E3jykr8opOYY7iex+a30Qh0m94tC0rpguJ/ETr+cXqjPz0+7FEkT5jNUT2KzW2fJVb+fXaKAXBVmt84yf9n81OqStHqG+0nsmAC/MtTuelTgS9qYHJaRpAYZ7pLUIMNdkhpkuEtSg/xCdaPpdKguzv4o6bjsuW803S65krW5Nn12FhLuuXryh5Z0Ytlz3+j6gTyRnnz/F0YnXgopbXSG+0bn3aWShnBYRpIaZM99Hetc06F75NGTeRW9aQMk6XgM97W2+NmmyxxC6R7pUrsHHp13ZU7IvC/DpiCY3TrL/DWs+HwknTiG+1pbmHf9OF9SDuuhw3R76Mf8UqEf+F1Gno+k6Rsr3JOcB7wX2AR8uKr+aWD/Y4GPAS8AHgD+uqrmJ1vqxrE4rBdmWqz+9mG97qE99PWmf339/FY4/apwz9beVTXzW+GcK51FUlpvRn6hmmQT8H7gZcAzgVcmeeZAs9cDP6uqpwNXA++edKEbyUJYLwR27S6YnWX+8u7GeOLRwuWVi2vtfwLpPNQ7r85D1Vs/wtBPHZKma5yrZc4CDlbVj6rqN8AngYsG2lwEXN9fvhE4N1nDz+0Lj4VbHD6Ltw3bvtaBuuj960p+X8fC9efz83SuniVXwvxD3UfVeu97N4085jHHW0vz872hl+p/mhjxvgvzwg++Otd01r5WSUONMyyzDbh30foh4IVLtamqo0mOAE8FfjqJIo+xMI4NvwvA+a1w+pW/b3LP1d3ezTizs722C0E5TP/LwYXhlHuuhs6R5ZW0+P2XetjF77btfvT27UvVtlD7NI360nThE8lQXbi8/3dz+cpLmNTDQ5b6bmNSfMiJ1pPUiPBI8grgL6vqDf31VwFnVdWbF7W5q9/mUH/9v/ptHhg41i5gV3/1GcAPJ3UiE3Aqa/XLaHo8p/WvtfMBz2mtzVbVzKhG4/TcDwE7Fq1vB36yRJtDSTYDW4EHBw9UVXuAPWO85wmXZF9VzU27jknynNa/1s4HPKf1Ypwx928DZyQ5PcljgEuAvQNt9gKv6S9fDHylRn0kkCStmZE99/4Y+qXAF+ldCvmRqrorybuAfVW1F7gO+LckB+n12C9Zy6IlScc31nXuVXUzcPPAtisWLf8KeMVkSzvh1uVw0Sp5Tutfa+cDntO6MPILVUnSxuOskJLUIMN9kST/nOQHSe5M8u9JTpl2TSuR5LwkP0xyMMnbp13PaiXZkeSrSQ4kuSvJW6dd06Qk2ZTkO0k+P+1aJiHJKUlu7P9/dCDJi6Zd02okubz/b+77ST6R5HHTrmlchvuj3QI8u6qeA/wn8I4p17NsY04XsdEcBd5WVX8KnA28qYFzWvBW4MC0i5ig9wL/UVV/AjyXDXxuSbYBbwHmqurZ9C4o2TAXixjui1TVl6rqaH/1VnrX9G8040wXsaFU1X1VdUd/+ef0AmPbdKtavSTbgb8CPjztWiYhyVOAP6d39RxV9Zuqemi6Va3aZuDx/ft3nsCx9/isW4b70v4W+MK0i1iBYdNFbPggXJCkA5wJ3DbdSibiGuDvgf+bdiET8sfAYeBf+0NNH07yxGkXtVJV9d/AvwA/Bu4DjlTVl6Zb1fhOunBP8uX++Nng66JFbf6R3lDADdOrdMWGTaDTxCVRSZ4EfAa4rKoennY9q5HkAuD+qrp92rVM0Gbg+cAHqupM4H+BDfudT5I/pPep93Tgj4AnJvmb6VY1vpPuYR1V9dLj7U/yGuAC4NwNepftONNFbDhJttAL9huq6qZp1zMBLwYuTHI+8DjgKUk+XlUbJjyGOAQcqqqFT1U3soHDHXgpcE9VHQZIchPwZ8DHp1rVmE66nvvx9B9K8g/AhVX1i2nXs0LjTBexofSnj74OOFBV75l2PZNQVe+oqu1V1aH3d/SVDR7sVNX/APcmeUZ/07nA3VMsabV+DJyd5An9f4PnsoG+ID7peu4jXAs8FrilPx39rVX1d9MtaXmWmi5iymWt1ouBVwHfS7K/v+2d/Tuntb68Gbih37H4EfC6KdezYlV1W5IbgTvoDdN+hw10p6p3qEpSgxyWkaQGGe6S1CDDXZIaZLhLUoMMd0lqkOEuSQ0y3CWpQYa7JDXo/wFaPA9+u0YblAAAAABJRU5ErkJggg==\n", | ||

| "text/plain": [ | ||

| "<Figure size 432x288 with 1 Axes>" | ||

| ] | ||

| }, | ||

| "metadata": { | ||

| "needs_background": "light" | ||

| }, | ||

| "output_type": "display_data" | ||

| } | ||

| ], | ||

| "source": [ | ||

| " \n", | ||

| "plt.hist(train_y, bins=50, density=True, color='green', histtype ='step');\n", | ||

| "plt.hist(test_y, bins=50, density=True, color='red', histtype ='step');\n", | ||

| "plt.show()" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "markdown", | ||

| "metadata": {}, | ||

| "source": [ | ||

| "### Model 0: Baseline Model [Predicted Value = Mean Value]" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "code", | ||

| "execution_count": 10, | ||

| "metadata": {}, | ||

| "outputs": [ | ||

| { | ||

| "name": "stdout", | ||

| "output_type": "stream", | ||

| "text": [ | ||

| "Test_MSE(MEAN):0.191127\n", | ||

| "Test_MSE(ZERO):0.190891\n" | ||

| ] | ||

| } | ||

| ], | ||

| "source": [ | ||

| "#y_test_pred = mean(y_train_i) \n", | ||

| "train_mean_y = np.mean(train_y)\n", | ||

| "\n", | ||

| "# Predicted value as mean value\n", | ||

| "print('Test_MSE(MEAN):%f' % np.mean(np.square(test_y-train_mean_y)) )\n", | ||

| "\n", | ||

| "#Predicted value as zero\n", | ||

| "print('Test_MSE(ZERO):%f' % np.mean(np.square(test_y-0.0)) )" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "markdown", | ||

| "metadata": { | ||

| "collapsed": true | ||

| }, | ||

| "source": [ | ||

| "##### We are getting quite okay Mean Squared Error(MSE) with even dumb model of predicted value as mean value or 0" | ||

| ] | ||

| } | ||

| ], | ||

| "metadata": { | ||

| "accelerator": "GPU", | ||

| "colab": { | ||

| "collapsed_sections": [], | ||

| "default_view": {}, | ||

| "name": "Self_driving_car.ipynb", | ||

| "provenance": [], | ||

| "version": "0.3.2", | ||

| "views": {} | ||

| }, | ||

| "kernelspec": { | ||

| "display_name": "Python 3", | ||

| "language": "python", | ||

| "name": "python3" | ||

| }, | ||

| "language_info": { | ||

| "codemirror_mode": { | ||

| "name": "ipython", | ||

| "version": 3 | ||

| }, | ||

| "file_extension": ".py", | ||

| "mimetype": "text/x-python", | ||

| "name": "python", | ||

| "nbconvert_exporter": "python", | ||

| "pygments_lexer": "ipython3", | ||

| "version": "3.6.6" | ||

| } | ||

| }, | ||

| "nbformat": 4, | ||

| "nbformat_minor": 1 | ||

| } |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,60 @@ | ||

| import scipy.misc | ||

| import random | ||

|

|

||

| xs = [] | ||

| ys = [] | ||

|

|

||

| #points to the end of the last batch | ||

| train_batch_pointer = 0 | ||

| val_batch_pointer = 0 | ||

|

|

||

| #read data.txt | ||

| with open("driving_dataset/data.txt") as f: | ||

| for line in f: | ||

| # Saving the location of each image in xs | ||

| xs.append("driving_dataset/" + line.split()[0]) | ||

| #the paper by Nvidia uses the inverse of the turning radius, | ||

| #but steering wheel angle is proportional to the inverse of turning radius | ||

| #so the steering wheel angle in radians is used as the output | ||

|

|

||

| # Converting steering angle which we need to predict from radians | ||

| # to degrees for fast computation | ||

| ys.append(float(line.split()[1]) * scipy.pi / 180) | ||

|

|

||

| #get number of images | ||

| num_images = len(xs) | ||

|

|

||

| # To change the train and test ratio do it here | ||

| # Here Train -> 80% and Test -> 20% | ||

| train_xs = xs[:int(len(xs) * 0.8)] | ||

| train_ys = ys[:int(len(xs) * 0.8)] | ||

|

|

||

| val_xs = xs[-int(len(xs) * 0.2):] | ||

| val_ys = ys[-int(len(xs) * 0.2):] | ||

|

|

||

| num_train_images = len(train_xs) | ||

| num_val_images = len(val_xs) | ||

|

|

||

| def LoadTrainBatch(batch_size): | ||

| # Train batch pointer allows we use subsequent ahead images for train | ||

| # Rather than using random images from train data as we train the model | ||

| # on batches of data rather thatn whole data at each epoch | ||

| global train_batch_pointer | ||

| x_out = [] | ||

| y_out = [] | ||

| for i in range(0, batch_size): | ||

| #Resizing and Converting image in ideal form to train | ||

| x_out.append(scipy.misc.imresize(scipy.misc.imread(train_xs[(train_batch_pointer + i) % num_train_images])[-150:], [66, 200]) / 255.0) | ||

| y_out.append([train_ys[(train_batch_pointer + i) % num_train_images]]) | ||

| train_batch_pointer += batch_size | ||

| return x_out, y_out | ||

|

|

||

| def LoadValBatch(batch_size): | ||

| global val_batch_pointer | ||

| x_out = [] | ||

| y_out = [] | ||

| for i in range(0, batch_size): | ||

| x_out.append(scipy.misc.imresize(scipy.misc.imread(val_xs[(val_batch_pointer + i) % num_val_images])[-150:], [66, 200]) / 255.0) | ||

| y_out.append([val_ys[(val_batch_pointer + i) % num_val_images]]) | ||

| val_batch_pointer += batch_size | ||

| return x_out, y_out |

Binary file not shown.

Binary file not shown.

Binary file not shown.

Oops, something went wrong.