-

Notifications

You must be signed in to change notification settings - Fork 91

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Bibliographic data #284

Comments

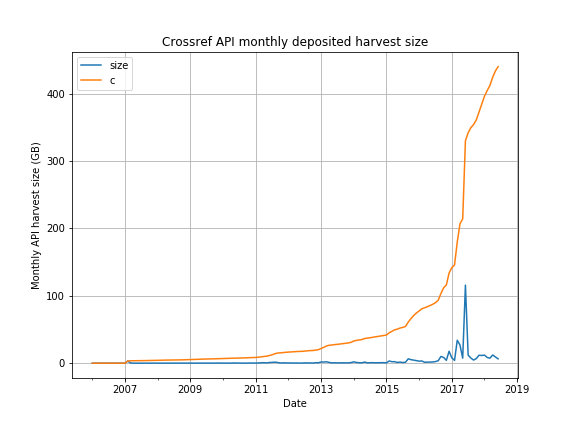

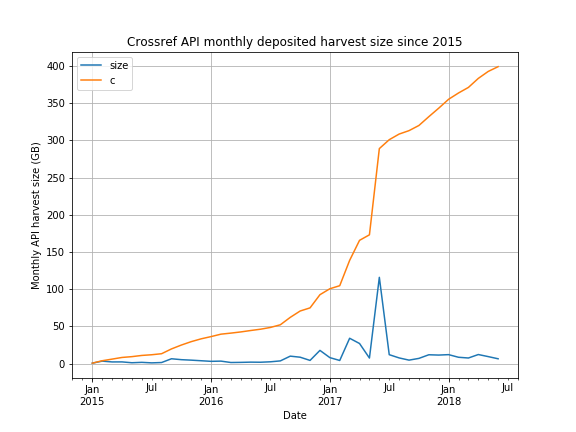

We have been working with the crossref API (for a search infrastructure project) since early 2015 and we found it to be excellent to work with concerning usability and documentation. We were interested in complete dumps as well, so we implemented a monthly harvest as part of our metadata gathering and processing infrastructure, which is based on luigi. The basic operation is as follows: Use a filter (we used deposit, but should switch to You end up with some directory full of JSON files. This is how the monthly harvest sizes developed over the years: Zoomed in a bit (from 2015): Now, seeing all these files as a single data set, there are quite some duplicates (redeposited files, through publisher updates or API changes). It would not be too complicated to run some additional data store, which could hold only the most recent record (by overwriting records via DOI). We opted for another approach, which allows us to not have to maintain an external data store and still have only the latest version of each DOI at hand: We just iterate over all records and keep the last. This currently means iterating over 400G of JSON, but that's actually not that bad. After a few sketches, the functionality went into a custom command line tool, span-crossref-snapshot. That tools is a bit ugly (implementation-wise), as it tries hard to be fast (parallel compression, advanced sort flags, custom fast filter tool with awk fallback, and so on). It is quite fast and despite the awkward bits quite reliable. In the end, we do have a file |

… from old datahub - refs datahubio/datahub-v2-pm#214 Also refs #29

|

I've created |

|

@miku this is really awesome info - thank-you. Are you hosting the data anywhere - can you share it? |

No, at the moment, we only use the crossref data for building an index for a couple of institutions. |

|

FWIW: In addition to sources already listed in the spreadsheet, a few more sources may be found here at https://archive.org/details/ia_biblio_metadata. |

|

@miku very useful - thank-you! |

|

There's an upload of this dataset at: https://archive.org/details/crossref-2022-11-02 (139M docs). |

Awesome page in progress at: https://datahub.io/collections/bibliographic-data

What we Cover

Datasets we want to cover:

Tasks

State of play

I compiled a detailed spreadsheet (as of 2016) of the state of play here:

https://docs.google.com/spreadsheets/d/1Sx-MKTkAhpaB3VHiouYDgMQcH81mKwQtaK2-BXYbKkE/edit#gid=0

Context

These are the kind of questions / user stories I had

User stories:

Aim of research

The text was updated successfully, but these errors were encountered: