New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

QST: Question regarding documentation #988

Comments

|

Hi @borisRa, you can see an example of using the three suites in our use-case example: https://docs.deepchecks.com/en/stable/examples/use-cases/phishing_urls.html When to use each built-in suite:

|

|

Thanks !

I mean from the explanation above , model_evaluation() should use model and train_test_validation() just validating the split distributions . Thanks ! |

You are right, for the checks in the train_test_validation() suite it is not necessary to pass the model, but if passed the model will be used to calculate feature importance for prioritization of different features in the check displays. |

Thanks ! My code : and the error is : Used this page as an example : https://docs.deepchecks.com/en/stable/examples/guides/save_suite_result_as_html.html How can I fix this ? Thanks ! |

|

@borisRa Probably you are on an older version of |

ver 0.5.0 ( the last one from pypi) |

|

@borisRa Sorry I haven't looked at your code properly! |

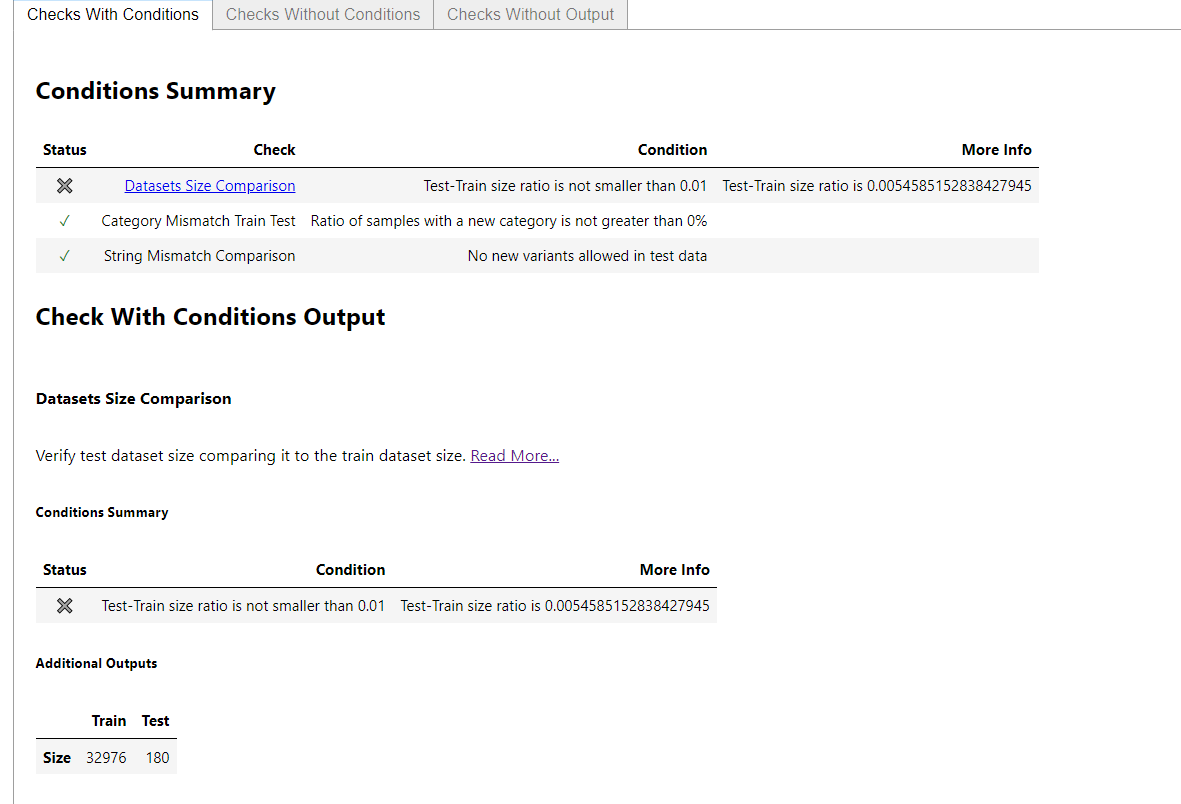

Thanks this worked ! Now getting a HTML without any plots to compare distributions . why ? |

|

@borisRa Do you see there are tabs? Please have a look in all 3 of them |

Thanks ! |

Research

I have searched the [deepchecks] tag on StackOverflow for similar questions.

I have asked my usage related question on StackOverflow.

Link to question on StackOverflow

https://docs.deepchecks.com/en/stable/user-guide/when_should_you_use.html#when-should-you-use-new-data

Question about deepchecks

Hi,

Based on your documentation page => https://docs.deepchecks.com/en/stable/user-guide/when_should_you_use.html#when-should-you-use-new-data

There are 4 phases :

1)New Data: Single Dataset Validation -> For these purposes you can use the single_dataset_integrity()

2)After Splitting the Data: Train-Test Validation -> For these purposes you can use the -> train_test_validation()

3)After Training a Model: Analysis & Validation ->model_evaluation()

4)General Overview: Full Suite -> full_suite()

The question where can I see the examples of using this 4 options ?

Thanks,

Boris

The text was updated successfully, but these errors were encountered: