New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

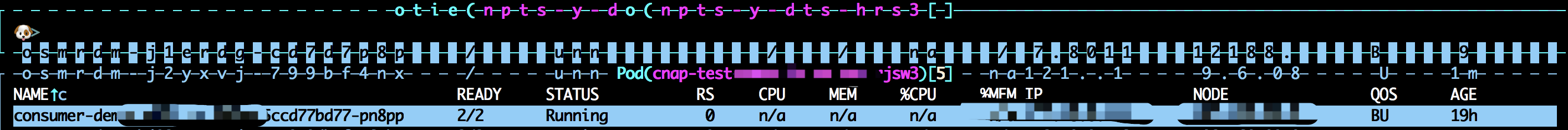

UI display messed up #448

Comments

|

@HawickMason Thank you for the report! Looks like something is indeed toast with the display but hard to tell from the pic. This is a pretty common scenario so I am guessing an issue specific to your pod or cluster. Could you see if there is anything in the K9s logs? Also does this happen on any pods or specific ones? Thank you for the details! |

|

Thanks for your time~ Updates:

Does that ring any bell? Looking forward for your reply again~ Thanks again for what you've done~ |

|

@HawickMason Thank you for posting back and this great report! I am not seeing anything in this log dump. It looks like the metrics server is not running on your cluster but that should not trigger the behavior. Could you try running k9s with debug log level as so |

|

Updates: Relate issue: And whne I set the LC_CYTPE to anything other than zh_CN.UTF-8, the UI looks clean and neat and everything works fine~ So I guess, I can close this issue now, and hopes anyone else who is encountering the problem like me can fix this |

|

@HawickMason Thank you for researching this and posting the details here! Good to know... |

Describe the bug

The GUI of my k9s is all messed up, with a 'space' on the border of 'Resource View', as shown in the pic

*

To Reproduce*

Steps to reproduce the behavior:

Just start your k9s , goes into a pod to see the log and return to the main page, or press the ':' to fire up the command mode will results in the picture above

Expected behavior

A neat & clear UI display as shown in the doc and the demo videos

Screenshots

See picture above

Versions (please complete the following information):

Additional context

Same thing happened in the previous release of k9s on my computer.

FYI: My terminal immulator is Iterm2

The text was updated successfully, but these errors were encountered: