New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Run GNNExplainer #24

Comments

|

Hi, thank you for your issue. First, the Tap package can be installed by the command Second, if you want to explain the graph level predictions, you can simply set the explain_graph attribute to True |

|

One more thing, there will be a default sigmoid function for the edge_masks value in the propagation method in the |

|

Many thanks for your help! I have everything installed now! Nevertheless, I am encountering a few issues when running the code:

Any hint on how to make any of these work? I'd like to explore the examples before I get to try my own data, so I might follow-up on the explanation bit and edge masks once I manage to get the code running 😃 . Thanks again! |

|

Hi, I have the following suggestions for the issues.

|

|

Many thanks again! Following that issue, the second point works well. Now, when I try to run: I tried reinstalling everything from scratch to check if that was the issue, but it persisted. Do you have any hint of what may be happening? |

|

Small update: using I think I will close the issue, I will be exploring the code the next couple of days and I might reopen it with more questions. 😃 Thanks! |

|

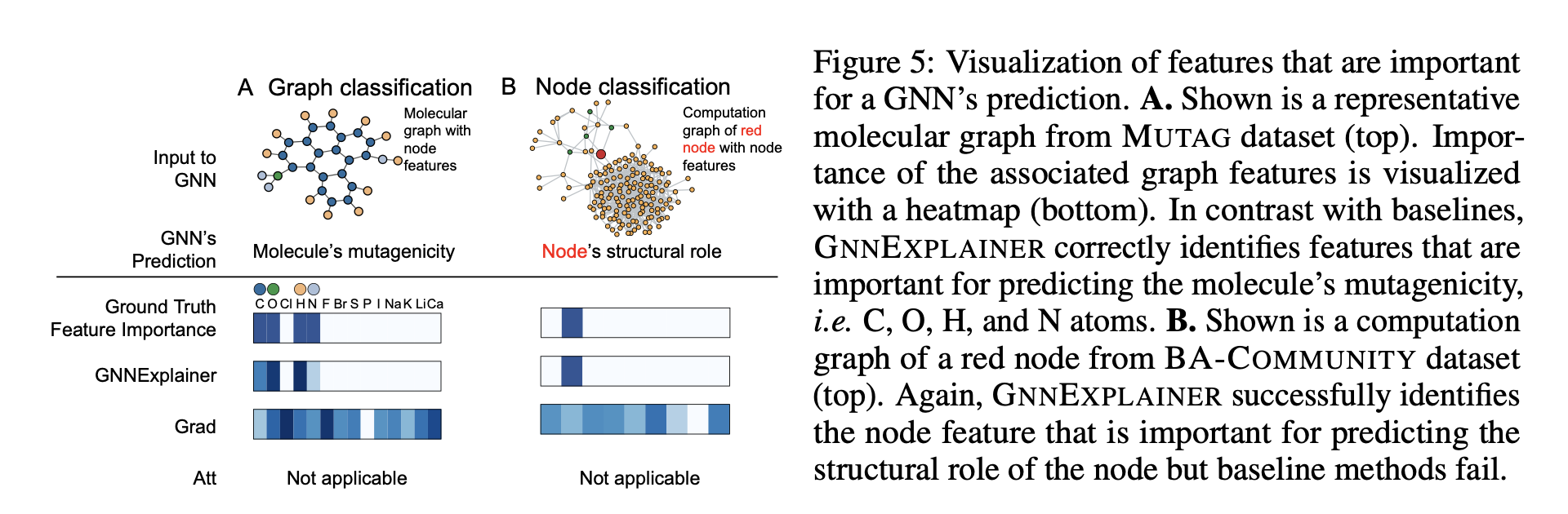

I am exploring the code on TOX21 dataset and I would like to understand a bit better the outputs (both the edge masks and the features masks). For the edge masks, is it the masks variable in sample_explain the one I should be looking at? For the feature masks, I don't really know where to find them. Would you mind pointing me? Is there a general feature mask for all the cohort? or is it one per graph? Ultimately, I am looking to get something as Figure 5 in GNNExplainer paper: https://arxiv.org/pdf/1903.03894.pdf |

|

For the GNNExplainer class, you can extract the node mask and edge mask from the class attribute For the figure, GNNExplainer provides the visualization as shown in README.md. It's not the same as the GNNExplainer paper, but I think it can help you with the figure to some extend. |

I am trying to run the GNN Explainer code. Although I have used the install.batch to generate the conda environment, I am having issues with the packages. Namely with tap (in the line "from tap import Tap") . I tried installing it myself and I can import tap but doesn't seem to find anything called Tap on it. Could you guide me here please?

Also, I was going through the example and I was wondering which settings should I change to run it on the graph level predictions. Is there an example for that? And, is it possible to get the feature importances and the important subgraphs? I didn't see any outputs in the explain functions.

Thanks in advance!

The text was updated successfully, but these errors were encountered: