New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Duplicati does not close FTP connection correctly to backup backend #3230

Comments

|

From a quick look at the source code, it could well be that duplicati does not close connections properly. Is there anyway you can monitor this on your ftp server, i.e., log files, to see if duplicati issues any disconnect commands? Do your ftp servers have a different max number of concurrent logins (apparently one has a limit of 10 concurrent logins)? You could test this by simply logging in multiple times simultaneously. |

|

@verhoek , thx for checking and suggesting the tests. 421 10 users (the maximum) are already logged in, sorry For me the only conclusion could be, that Duplicati does not always close correctly the FTP session P.S. Duplicati running on one single PC is the only appliaction accessing that NAS via |

|

I spent some time on this issue and as far as I can see, there are some issues come from the .net ftp library that is used. I noticed similar issues you mentioned when passive mode was turned off: the ftp library cannot perform the list operation properly, an exception is thrown, and the connection is never closed. I don't see a way at the moment to close the connection, since the library wraps it and I don't have direct access to it. Duplicati is retrying, the same thing happens until max. number of users is hit. Do you use passive mode, is there any firewall between your duplicati client and your NAS? |

|

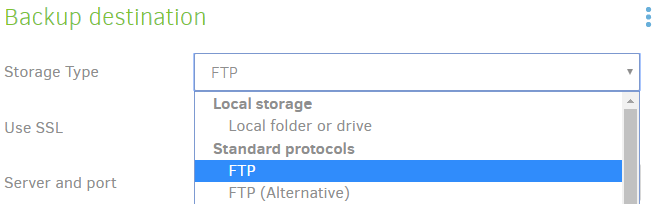

Hi, sorry for the delay. I'm using "FTP (Alternative)" not sure if this is the passive one. There is no firewall involved. |

|

After ignoring that problem for a while, I did have a need to dive deeper into it, as it made my backups failing all the time. I found that the max users error on ftp happens mainly during compress actions of duplicati on local 1gbit networks. In that situation duplicate is downloading many old backup sets in a short time and with a high frequency. As the issue is not happening (that often) from a system doing backup to the same NAS from remote via a 8-10mbit line, where downloads during a compress take significantly longer, I see a relation to the number of up/downloads taken within a certain time. As a conclusion ( which I could not prove as the NAS does not offer access to its backend) I’m strongly sure that during long(er) running downloads of single files, the NAS will timeout open FTP sessions, which were not closed before and by that freeing up sessions, which would allow next operation without failing due to unavailable sessions. To validate that, I did throttle the up/download rate in duplicati for my local (normal 1gbit) backup to an around 8mbit connecting (800kb/sec). After re-running the backup with that setting, the backup and compress did work without any issue. Clearly it was taking much longer, but it does work now. So, the network speed, the number of duplicati ftp actions per time, the timeout of ftp sessions on the NAS, the number of max. open sessions on the NAS and finally the size of the duplicati backup set and the implied time to up/download them in all their combination cause the error to happen or not. I see the throttling as a workaround, while the two root causes are in duplicate and the NAS. Duplicati should close ftp sessions correctly and the NAS limit of 10 session contributes the rest to the issue. Best way to resolve to problem is securely close all sessions from duplicate site. All other aspects (max. number of sessions on the NAS, if 10 is enough or not, different story, timeout duration of ftp sessions) are valid and any change, would only shift the problem. Any chance this could be addressed in duplicati? |

"FTP" looks better on this account, compared to "FTP (Alternative)". I've tested with FileZilla Server set up for only 2 simultaneous connections, and it works fine with a small test backup (including occasional compacts). One concern I have (skip down to talk of developer expertise need) is that 1 doesn't work because Duplicati seems to do a second upload request before the first one is done, even before parallel uploads were added.

is probably the reason you see the odd behavior of more connections to the server than you might expect. This is even more fun to watch at the packet level, which clearly shows what's on what connection. Looking at the FileZilla Server logs is an easier way to see the action. I wondered about all the connecting initially... What does EnableThreadSafeDataConnections do? explains why this opened additional connections. There doesn't seem to be much direction on the proper way to close them, and Duplicati development would also need to see whether Duplicati's use of FluentFTP falls within the current guidelines to set that value to false. Development of FluentFTP has changed hands, and the current developer has done fixes then changed the default of that from true to false, so possibly it's safe for Duplicati unless somehow different threads access the same connection when multiple clients are used. Someone who knows the area should look, but there's quite a backlog of issues, so I don't know when that will happen. This issue is rarely seen (I'm not sure why). Multiple file uploads keep re-opening connection #40 seems to be the FluentFTP issue and it links to the fix. Short-term solution might be to see if regular FTP works better than Alternative. Another quick DIY if you're willing to change code is to change that line to |

|

Thx for that detailed reply. Actually I'm not an expert to experiment myself with all these switches. For me, my workaround (throttling the connections) does serve the purpose, but I hope my observations and explainations do help the developers some day to dive deeper into the topic and resolve it in general. |

I'm not certain any switches are needed, though they might be. You could just try changing to FTP:

makes me a little nervous until the below gets fixed. Please update that issue if your backup gets it: Upload throttle corrupts backup, especially OneDrive. Analyzed, with code proposed. #3787 |

|

went into 2.0.4.32 Canary as part of a general FTP (Alternative) fix, which means 2.0.5.1 Beta and 2.0.6.x Beta should be OK now. reduced to 1 let me backup with a connection limit of 2. 1 was failing because it seems to keep the pre-backup I haven't tried forcing the sort of @harald14 If you haven't already gone back to a more normal setup without a throttling workaround, can you try newer release? |

Forced this by increasing configured remote volume size, which makes the existing volumes look too empty, so does a compact. |

|

No response. Closing as presumed fixed. |

Environment info

Description

It is not clear to me right now if this will be a duplicati bug, a WD MyCloud NAS bug or a duplicati feature request.

Here is my issue:

My Backup source is about 250GB, ~70000 files, Retention 1 year, backups 4 times a week, around 160 version kept currently, backup size around the same as the source, no significant changes of the source over time, delta per day some MB or few 100MB new files

I have two WD MyCloud NAS systems (slightly different firmware, but I cannot bring them to the same version), which I use to store backups of that same computer. I connect to both of them with FTP, same source, same settings, everything the same, just two targets as additional security.

One of them works perfectly fine with duplicati.

The second did the same until I've got recently issues with the duplicati backup database of that target. I did try DB repair, which did fail, finally I tried to delete the DB and DB recreate. The recreation does move on well for a while (I see CPU load, disk activity and networkload),

After the a while the CPU load, disk and network goes down to 0% activity and after some further time the DB recreation does fail with the following error or multiple of them.

I see the following possible reasons for that:

Steps to reproduce

Run a DB rebuild (FTP) of a large, backup with a lot of files and many versions

Feature Request

Add a duplicati parameter, which allows to control the max. number of concurrently opened FTP sessions.

The text was updated successfully, but these errors were encountered: