New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

nodejs-ClusterClientNoResponseError-client-no-response.md #466

Comments

|

是不是 app worker 挂了,看看 common-error 最上方的错误? |

|

看起来是 app worker 长时间没有发送心跳,先看看 common.log 里还有没有其他的错,或者直接附上 common.log |

|

有同样的问题 |

|

// common-error.log // egg-web.log // egg-agent.log |

|

@popomore @gxcsoccer 是不是cluster watcher 有问题? |

|

感觉像 |

|

@atian25 本地开发环境在 ubuntu16.10 下也同样出现上面的 ClusterClientNoResponseError 错误,有时导致nodejs响应比较慢。 |

|

@itsky365 把日志上传一下吧 最好能有代码 |

|

@itsky365 试试把本地 node_modules 删除重现安装,看是否还有问题 |

|

// index.js // app/controller 删除了node_modules目录,npm install 后运行再看看日志。 除非用了 ccap 生成的验证码 有冲突? |

|

如果还有问题 ps aux | grep node 把结果贴下 |

|

$ ps aux | grep node 结果如下,启动了2个node项目,一个work采用eggjs开发,一个code采用vue2 webpack开发 |

|

@itsky365 还有问题? |

|

@gxcsoccer alpha 上刚刚部署了 node_modules 下,观看一天 common-error.log 才知道结果呢 |

|

@itsky365 我大概知道原因了,今天我会更新一版 |

alpha上运行的结果 |

|

@itsky365 最迟明天发新版本 |

|

@itsky365 重新安装部署一下(删掉 |

|

@itsky365 怎么样? |

现在还报这样的错误,只是比 egg@rc-2 、cluster-client@1.5.0 少些 运行环境 egg@rc-3 cluster-client@1.5.1 |

|

@atian25 看了下 |

|

这块要 @gxcsoccer |

|

@gxcsoccer @atian25 升级到 cluster-client@1.5.2 还是报 ClusterClientNoResponseError 错误,能解决吗? |

|

@itsky365 帖一下最新的日志呢? |

|

@gxcsoccer 让我研究下哈 |

|

agent 是否做了耗时的 job? 我的心跳是 20s 一次,检查连接是否在 60s 内都没有流量(若是就会报错,断开连接),正常 idle 的时间不可能超过 80s,但是日志显示为 3562922ms(将近一个小时) 2018-02-10 08:41:34,018 ERROR 52798 nodejs.ClusterClientNoResponseError: client no response in

3562922ms exceeding maxIdleTime 60000ms, maybe the connection is close on other side.

|

|

笔记本休眠确实有😓 |

|

休眠那是必然的... 你的网卡断了但程序还在跑 |

|

@gxcsoccer 这种异常情况,判断一下在 local 环境,不打印错误日志好了。 |

|

线上服务器也有这问题,根本没法工作 |

|

+1 影响日志查找,但又好像不影响 Egg 运行 |

2018-04-26 17:58:04,449 ERROR 55124 nodejs.ClusterClientNoResponseError: client no response in 634937ms exceeding maxIdleTime 60000ms, maybe the connection is close on other side.

at Timeout.Leader._heartbeatTimer.setInterval [as _onTimeout] (work/node_modules/_cluster-client@2.1.0@cluster-client/lib/leader.js:74:23)

at ontimeout (timers.js:475:11)

at tryOnTimeout (timers.js:310:5)

at Timer.listOnTimeout (timers.js:270:5)

name: 'ClusterClientNoResponseError'

pid: 55124其实,是因为egg-logrotator源码里有存活期的判断 this._heartbeatTimer = setInterval(() => {

const now = Date.now();

for (const conn of this._connections.values()) {

const dur = now - conn.lastActiveTime;

if (dur > maxIdleTime) {

const err = new Error(`client no response in ${dur}ms exceeding maxIdleTime ${maxIdleTime}ms, maybe the connection is close on other side.`);

err.name = 'ClusterClientNoResponseError';

conn.close(err);

}

}

}, heartbeatInterval);判断了存活时间。

为了测试日志分割,不能等一个小时吧,那不是傻吗? |

|

还是有这么问题,看了这么多次的回复,还是不知道怎么解决! @gxcsoccer @atian25 @popomore |

|

@gxcsoccer 我这边必现的。 "egg": "^2.7.1" |

|

@itsky365 请问老哥,这块最终解决了么? |

|

可以在报错前,再调一次服务,看看是不是存活。若此时还不存活且超时,就报错。而不是只判断超时。 |

|

还没有解决吗 |

|

检查你们的代码,应该是某段逻辑耗尽了 CPU,检查是不是有重 CPU 操作,或者 sync 等同步操作。 |

前段时间找了半天才发现问题,因为同事在模板引擎不小心写了死循环导致的,谢谢大佬解答 |

|

@fhj857676433 可以描述一下是什么样的死循环吗? |

|

我这边服务器上部署了4个egg应用, 运行一段时间后集体报错: |

前端写的 类似 while(true)一样的逻辑 判断写错了 |

|

可以检查下自己的代码 有没有长时间运行的那种 |

|

@fhj857676433 我这边场景比较奇怪, 同样的代码部署在三台服务器, 仅在一台服务器报错, 其它两台正常 |

|

代码中有轮询Java接口的定时任务, 如果这里报错的话, 是否会抛出 const Subscription = require('egg').Subscription;

const apollo = require('../../lib/apollo');

const path = require('path');

class Update extends Subscription {

static get schedule() {

return {

interval: process.env.APOLLO_PERIOD ?

process.env.APOLLO_PERIOD + 's' : '30s',

type: 'worker',

};

}

// subscribe 是真正定时任务执行时被运行的函数

async subscribe() {

const apolloConfig = require(path.join(this.app.config.baseDir, 'config/apollo.js'));

const result = await apollo.remoteConfigServiceSikpCache(apolloConfig);

if (result && result.status === 304) {

this.app.coreLogger.info('apollo 没有更新');

} else {

apollo.createEnvFile(result);

apollo.setEnv();

this.app.coreLogger.info(`apollo 更新完成${new Date()}`);

}

}

}

module.exports = Update; |

有这个可能 加个超时的处理吧 |

|

@fhj857676433 感谢, 我会再检查一下 |

|

我是一啟動 ,10分鐘 準時 斷線 ,一直找不到原因,你們會嗎? nodejs.ClusterClientNoResponseError: client no response in 77978ms exceeding maxIdleTime 60000ms, maybe the connection is close on other side. node version v11.0.0 |

如果需要长时间运行呢,或者有没有其他方案让程序长时间跑起来 |

异步执行就行了, 别影响主线程 |

|

就心创建了一个项目,然后写了一个简单的post,dev模式下没问题,start模式下,就包这个错,找了一天也没找到为啥 |

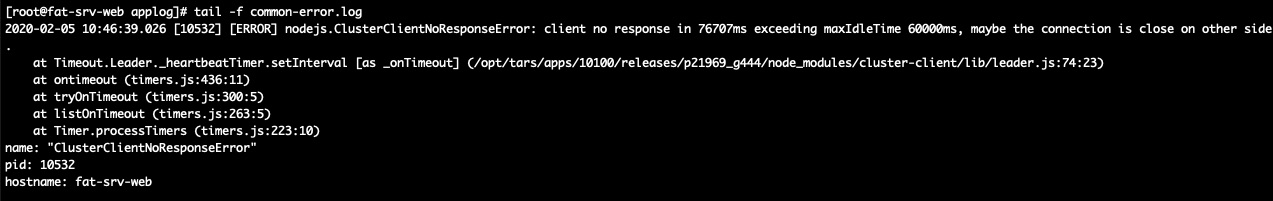

egg.js部署在alpha环境下,发现时常出现卡的现象,首次卡差不多在5-20秒之间。查看日志记录如下:

egg-agent.log

common-error.log

此日志文件记录内容最多

web.log

此日志文件为空

启动命令为

The text was updated successfully, but these errors were encountered: