A combination of various neural code models for tackling programming-related tasks (like LogVar, function name prediction, and VarMisUse) using deep neural networks. The principal implementation is the Hierarchic model, that is designed especially for tasks involving data-flow-alike information-propagation requirements. We also provide a full implementation for code2seq by Alon et al., and a partial implementation for Learning to Represent Programs with Graphs By Allamanis et al.

This project consists the full implementation of the Hierarchic Code Encoder in PyTorch. The idea of the hierarchic model is to (i) shorten distances between related procedure's elements for effective information-propagation; (ii) utilize the effective paths-based information-propagation approach; (iii) scalability - efficiently support lengthy procedure; and (iv) provide a fine-grained exploration framework to identify the relevant elements/relations in the code's underlying structure that most benefit the model for the overall task.

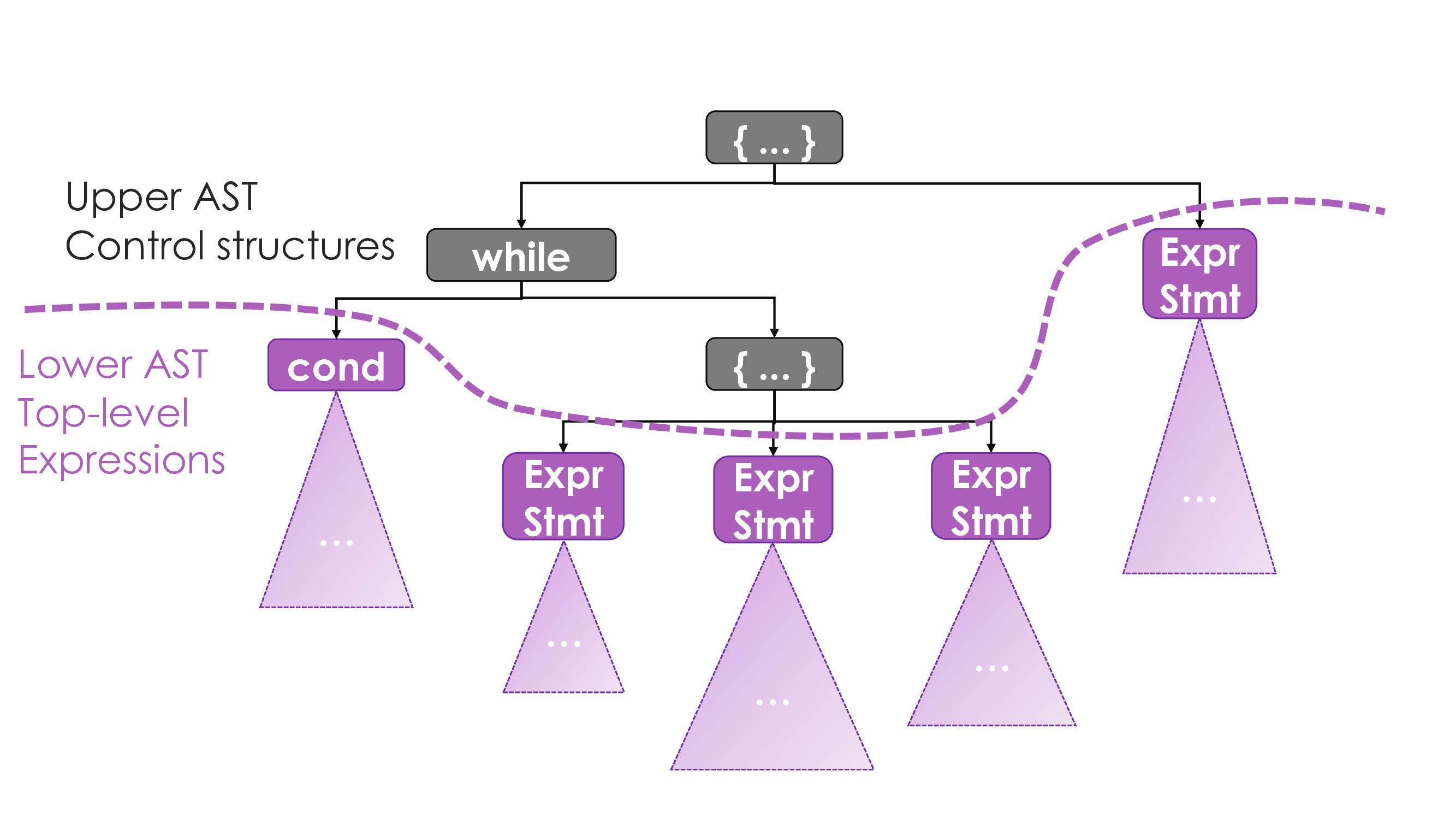

The procedure's code is being broken at the statement level to form the two-level hierarchic structure. The upper level consists control structures (loops, if-stmts, block-stmts), while the lower level consists of expression-statements / conditions. In fact, each statement in the lower-level is associated to a node in the procedure's statement-level CFG (control-flow graph).

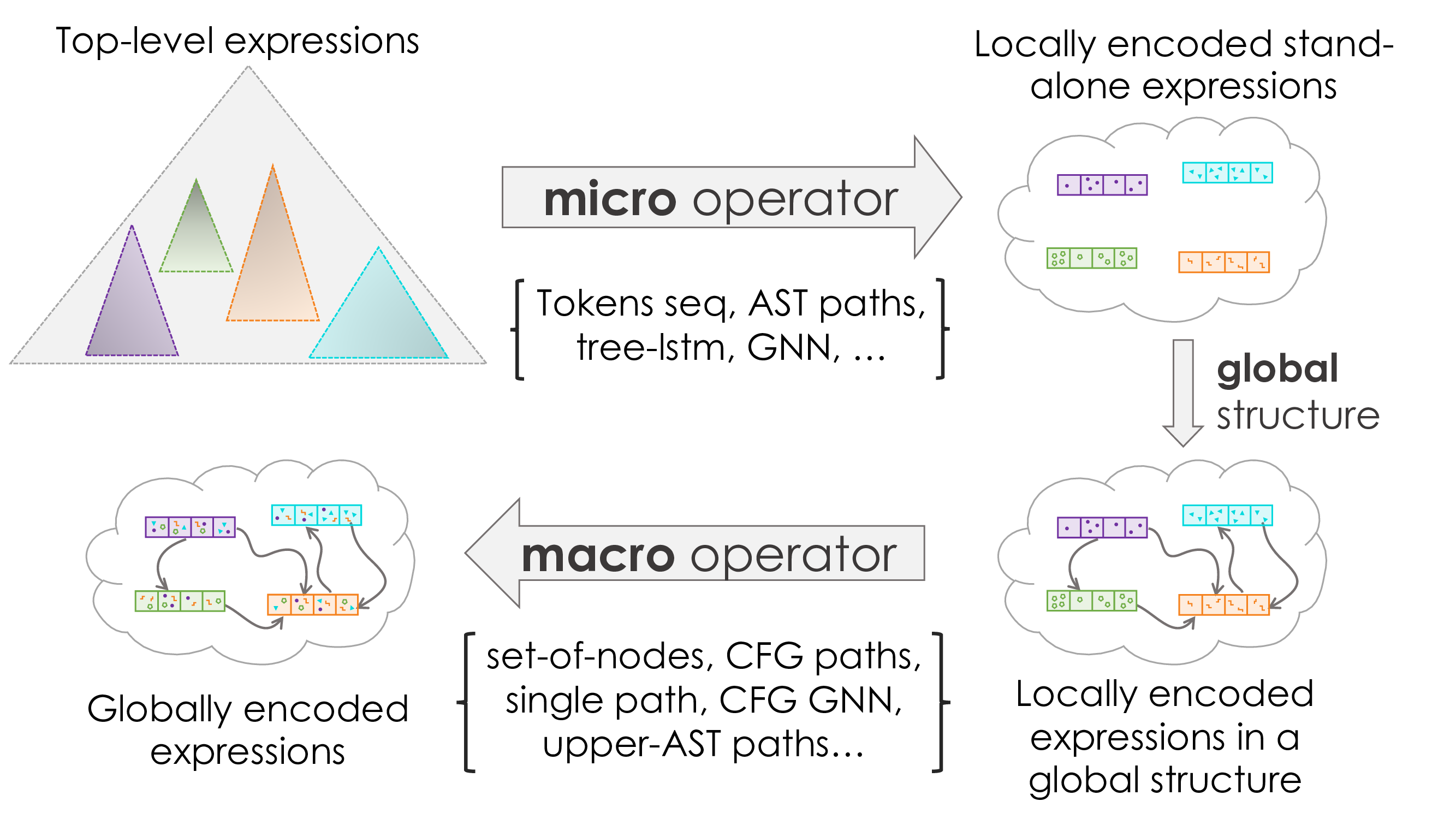

The hierarchic encoder first applies a micro operator to obtain a local encoding for each individual statement (CFG node), then it employs a macro operator to propagate information globally (between CFG nodes), then it updates the local encodings by mixing it with the globally-aware encodings of the related CFG nodes, and finally it employs the micro operator once again. The micro operator is the local independent encoder of the statements (statements are being encoded stand-alone in the first stage). We supply multiple options for the micro operator, including the followings: paths-based AST (w/wo collapse stage), TreeLSTM AST, GNN AST, AST leaves, flat tokens sequence. The macro operator is responsible for the global information propagation between CFG nodes. We supply the following macro operators: paths-based CFG, GNN over CFG, upper control AST (paths-based, GNN, TreeLSTM, leaves only), single sequence of CFG nodes (ordered by textual appearance in code), set of CFG nodes.

The entire set of parameters for the execution is formed as a nested classes structure rooted at the class ExecutionParameters. The ExecutionParameters includes the ExperimentSetting, which includes the CodeTaskProperties, the NDFAModelHyperParams, the NDFAModelTrainingHyperParams, and the DatasetProperties.

Because this project practically covers many different models, the class NDFAModelHyperParams and its sub-classes have many optional and choice fields to choose the concrete model to be used. The relevant modules are loaded dynamically upon execution according to these parameters. For example, (TODO)...

Generally, each module has its own parameters class that is used wherever this model is needed. For example, the module CFGPathsMacroEncoder uses the dedicated parameters class CFGPathsMacroEncoderParams, while the latter has a field of class SequenceEncoderParams because CFGPathsMacroEncoder uses the modeule SequenceEncoder accordingly. That is, two parallel trees are constructed - one is the tree of parameters (which is constructed independently to the second tree), and the other is the practical modules tree (that is constructed w.r.t the params tree).

The main executable script is ndfa.py. The parameters can be specified as arguments to the script or as a yaml file. If a trained model is being loaded, its hyper-parameters NDFAModelHyperParams are being loaded as well and the given ones (through arguments / yaml file) would be ignored.

As this projects covers implementation of a variety of different models, each model requires different preprocessed data. However, in our implementation, the single class MethodCodeInputTensors and its sub-classes cover all the necessary preprocessed tensors to support all possible models. Practically, if the entire preprocessed data (union of all fields needed for all possible models) were to be used, the data loading would become a bottleneck for the training/evaluating process. Thus, we allow most of the fields to be optional and we supply the functionality to emit the dedicated preprocessed dataset that matches a chosen model. Unless --keep_entire_preprocessed_dataset is stated, only the tensor fields that are relevant to the given model parameters are stored in the the output preprocessed data. For training a model, unless --no-use_compatible_pp_data_if_exists is set, the data loader seeks for a compatible preprocessed data. It uses the lighter preprocessed dataset out of all datasets that comprise of all the required fields for the chosen model.

The preprocessed dataset is stored as a persistant key-value data-structure, where the key is a simple index in the range [0..|dataset|-1]. This allows random-access to examples by their index, as necessary for shuffling the data while training. We use facebook's RocksDB embedded database as our default (and prefered) key-value store backend, while we also support other options like dbm and archives (zip, tar) possibly with compressions. RocksDB shown the best random-access read performance for our workload.

A preprocessed example is stored as a nested data classes structure, where each field is a tensor, a python's premitive (numeric/str), or an instance of another such data class. All the classes in this hierarchy inherit from TensorsDataClass to support advanced batching functionalities for flatened data.

Our input data is formed of several types of elements; that is, each pre-processed example contains multiple kinds of entities: CFG nodes, AST nodes, tokens, symbols, identifiers, and paths. Some of the hierarchic method's calculations involve elements of multiple kinds, and an element can participate in multiple calculations. We typically use the index of an element to address it in the computation. For example, constructing the local encodings of a CFG node requires combining all AST paths of the top-level expression sub-AST associated with it. To do so, we store dedicated mappings from the CFG node indices to their associated sub-AST node indices. Another example is a mapping between the identifiers' indices and the AST leaves (terminals) they appear in. Moreover, the AST paths themselves are stored as sequences of AST nodes indices. The same goes for the CFG paths as well.

Usually, training and evaluating neural networks is performed over batches of examples, following the SIMD (single instruction multiple data) computational scheme to maximize the utility of the accelerated processing units and make the training feasible under the available resources. However, the preprocessed example is stored on its own, while it should reoccur in various batches during training. Therefore, the batching takes place during data loading. Whenever a collection of examples are being collated into a batch, continuous tensors are being created containing all the elements in the batch. As a result, the indices of these elements are updated. Thus, the references to them have to be fixed accordingly to retain the indexing consistency.

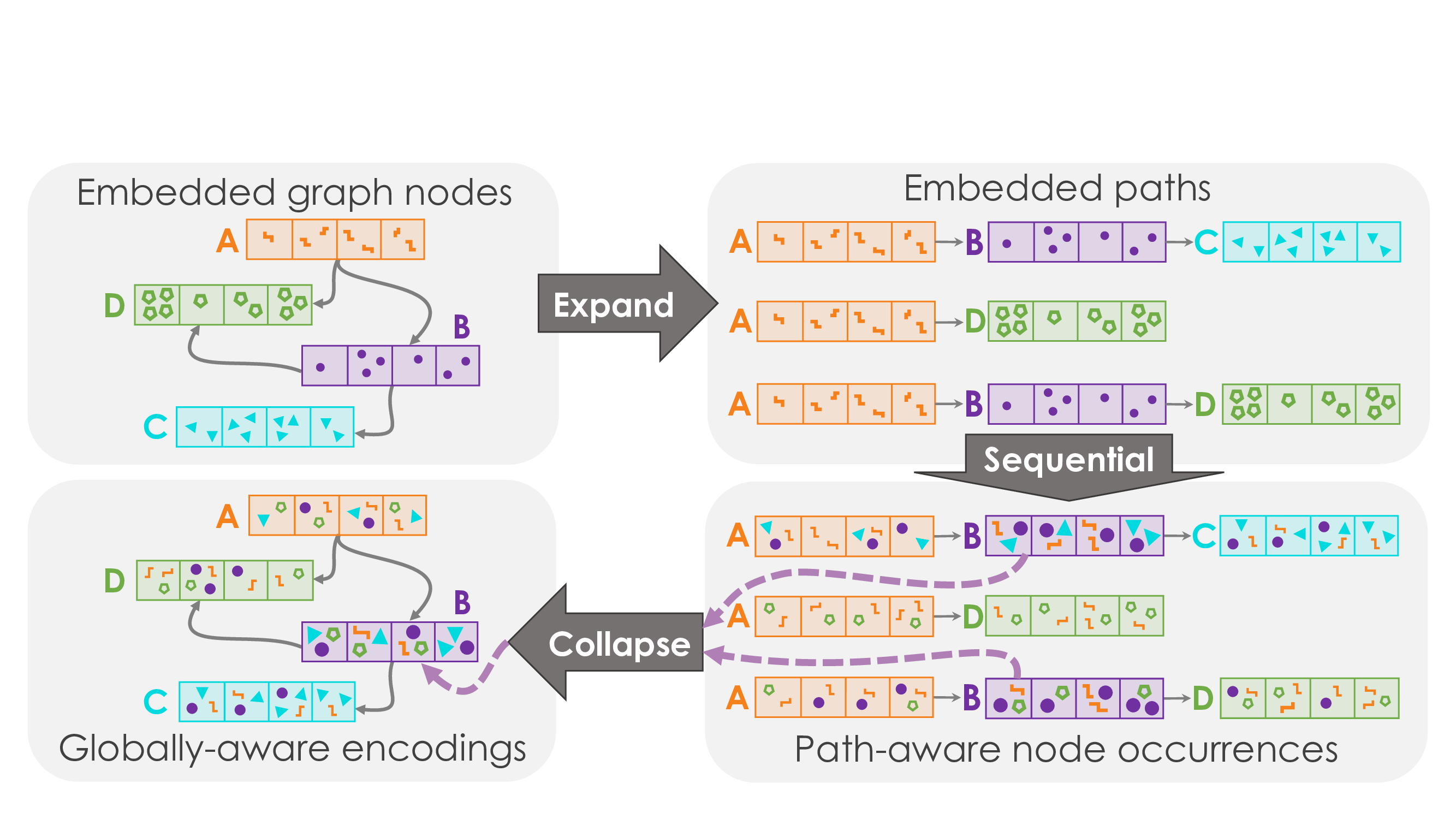

We extended the paths-based graph encoder (originally suggested by Alon et al. in code2seq). Our Expand-Collapse graph encoding framework expands the input graph into paths, uses sequential encoder to process it (propagate information along the paths individually), and then collapses the graph back into nodes representation (encodings of node occurrences scattered along paths are folded back into single node representation).

We integrate this approach in the hierarchic model both as a micro operator (applied over the top-level expressions sub-ASTs) and as a macro operator (applied over the CFG).