-

Notifications

You must be signed in to change notification settings - Fork 81

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Depth Value Discrepancies #200

Comments

|

Issue thread regarding this question. |

|

Hey @JJLimmm, I am currently working on a comparable project and struggle to get valid depth vales for my 2D-RGB Keypoints as well. The first try I did was taking the transformed_depth_image and just look up the depth value at [x,y]-coordinate of the RGB pose keypoint. At first it looked good, but some further investigations showed off, that this process has a major issue: The depth value, that is returned by the transformed_depth_image, is not the true depth (euclidean distance) from the object to the sensor, but it already is the Z-coordinate from one of the both 3D-coordinate systems. Some tests showed off this issue, even though on of the Azure Kinect developers wrote it differently: (Github Issue). When now converting [x,y] (2D image plane) and Z (3D coordinate system) to a set of 3D coordinates, by hand or with the help of calibration.convert_2d_to_3d() function, the resulting coordinates do not match the reality due to the miss interpretation of the given depth value. In my case, the hip of the 3D person is tilted forward towards the camera because it is placed approximately on the Z-coordinate line. Have you encountered similar anomalies and how did you solved your problems? :) |

|

Hey @nliedmey , yes i noticed this discrepancy as well between using the calibration from Azure SDK and also our own conversion. Currently i cannot find a way to create a custom calibration to obtain the depth at the 2D keypoint coordinate that i want, it will take up a lot of time and i dont have the luxury of that right now. For my project, i'm just taking the obtained depth value in the 2D RGB space at face value, and if it shows an invalid depth, i will skip that frame and obtain the next. |

Hi all,

Just a question regarding Depth Information obtained via the python wrapper and also using the Body Tracking SDK.

Also in relation to this issue #92 discussed in another python wrapper repo for more context as to this issue.

Background Info:

I am currently trying to test out how i can use a 2D pose estimation model (like openpose or any other) and together with the depth sensor data, obtain the accurate 3D coordinates for the keypoints detected, instead of using the official Kinect Azure Body Tracking SDK.

However, to make sure that i was getting the correct depth value corresponding to the SDK's body tracking keypoints, i had to verify if the method to convert those 2D keypoints from the model into the 3D Depth Coordinate system is giving me the correct results as seen from the Body Tracking SDK. But for comparison, i used the Neck Keypoint as the reference, then convert it to 2D keypoint (x, y) from the body Tracking SDK, and then transform those coordinates back to the 3D depth image (which is the same image coordinate system that is being used for the Body Tracking Keypoint results).

I perform the following steps before comparing the xyz values obtained:

After performing these steps, i noticed a difference in the z value (depth) from the manual conversion to the Body Tracking SDK.

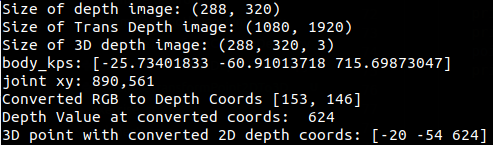

(As shown in the picture below, body_kps is the xyz coordinate obtained from the Body Tracking SDK where the z-value (depth) is 715.698... The converted xyz coordinate is the "3D point with converted 2D ......." where the z-value is 624. )

Does anybody know if i am doing anything wrong or have faced similar issues?

Am i supposed to use both 2D Depth & IR images for finding the actual depth? (saw that Body Tracking SDK documentation uses Depth and IR from the Capture object)

If so, how do i use these 2 images (depth and IR) to combine and give me the proper depth value with the corresponding coordinates converted from the 2D RGB space.

The text was updated successfully, but these errors were encountered: