-

Notifications

You must be signed in to change notification settings - Fork 815

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

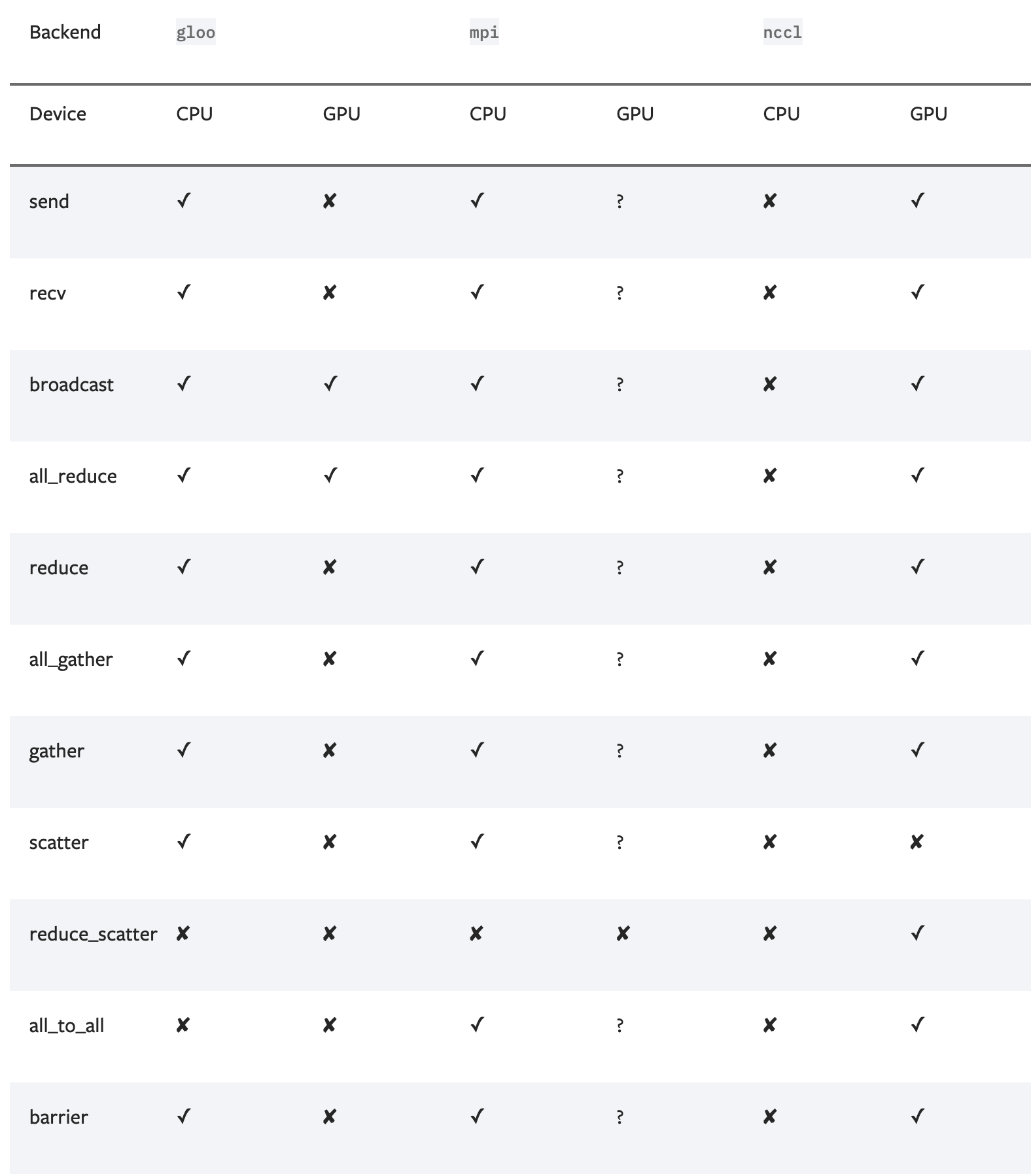

ProcessGroupNCCL does not support scatter #144

Comments

|

can u paste the complete trace? |

|

The trace is as follows: |

this is because NCCL indeed does not support scatter I believe? If you can update your pytorch to the latest, the code should be directly usingt the all2all primitive. For scatter, you have to use MPI guess. But we will update the documentation for better debugging. |

|

It looks like this is resolved. |

When trying running DLRM distributedly using mpirun with NCCL as the backend there is a runtime error "ProcessGroupNCCL does not support scatter". Is there anyway to solve that?

Thanks!

The text was updated successfully, but these errors were encountered: