-

Notifications

You must be signed in to change notification settings - Fork 1.3k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Strange Laplacian smoothing effect #432

Comments

|

Laplacian smoothing (with the

Where L is the Laplacian matrix (or a version of it, there is many versions in the literature) as follows pytorch3d/pytorch3d/structures/meshes.py Lines 1060 to 1071 in 83fef0a

In terms of math, the loss tries to bring each vertex close to the weighted average of the neighboring vertices (weighted by the degree of the vertex). Other forms of laplacian take into account the length of the edges (e.g. Taubin smoothing). So what is important to answer is whether what you are doing in terms of math by using our mesh laplacian smoothing loss is what you actually desire to do. |

I thought this was the case already, that's why I don't understand why it creates those spikes, shouldn't those vertices and their neighbours be smoothed? What I intend to use the loss for is to avoid the vertices offset overfit a given target by smoothing the resulting mesh. I'm also considering using the mesh edge loss, but the laplacian looked like a good option, specially since it is used in the mesh fitting tutorial. |

|

It's all in the math and the spikes are explained by the math. You could try to play with the weight on the loss. Perhaps you need to set it a lower value so that it does't try to do something aggressive. Or you need to play with additional regularizers like edge length and normal consistency. All in PyTorch3D. |

|

Can confirm this behavior. Perhaps it's really a consequence of the math but not sure in which way, would appreciate if someone can explain it |

|

So far, fixed the spikes by replacing sum to the L2 norm in:

to: return torch.linalg.norm(loss) / NAs far as I understand, |

|

did you also try using a different optimizer / smaller learning rates? Try converting it into an L2 norm, or a hinge loss. |

Hi.

Sorry if this is not direclty related to PyTorch3D or if this is caused by a mistake I've made.

I'm having trouble understanding how the laplacian smoothing loss works. Reading the paper linked in the documentation I would expect that the mesh it smooths would keep the shape more or less close to the original. I want to use this regularizer inside a bigger optimization problem, but I want to be sure I'm using it right and knowing what I am doing.

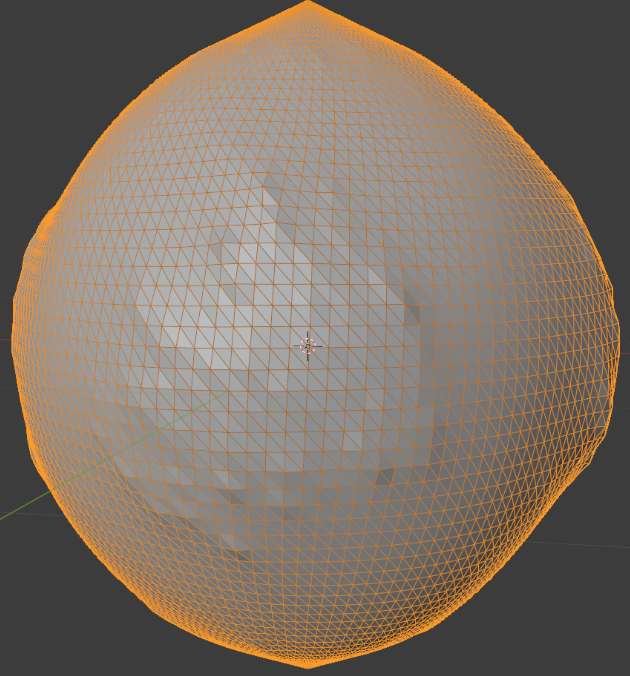

I've tried optimizing some meshes using only the laplacian loss, but even with regular meshes it somehow breaks the shape, usually by creating some spikes on the mesh. See the examples below.

Am I using it wrong? Is this expected?

Thanks in advance

Examples

Initial mesh:

Resulting mesh after 3k iterations:

If I use an icosphere instead of a UV sphere it has a better result, but there are some vertices that seem to collapse:

Initial mesh:

Resulting mesh after 300 iterations:

On more irregular meshes it is clearly visible that there are spikes emerging from the vertices where there is a normal difference between faces:

Initial mesh:

Result after 1k iterations:

Instructions To Reproduce the Issue:

Code

The text was updated successfully, but these errors were encountered: