New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

How to set random seeds fixed #618

Comments

|

Hi, |

|

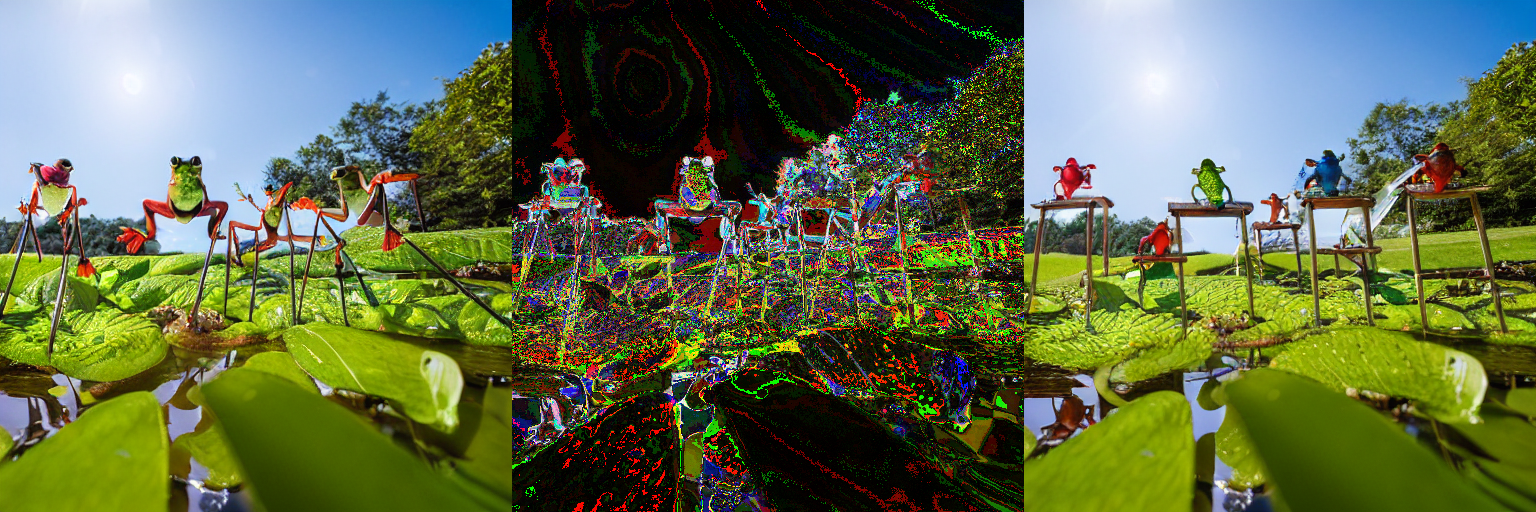

Got the same issue, enabling xformers in my project causes results to be slightly random even with the seed and other settings kept constant. Observed on Stable Diffusion 1.5 and 2.1 using huggingface/diffusers Stable Diffusion pipeline with the KDPM2 Ancestral Discrete scheduler. The differences are very subtle, see example: Note that the two xformers runs were performed sequentially here. On some other prompts the differences may be more drastic, I'm not exactly sure why, but the general layout of the image will remain the same. |

|

Hello, thanks for the report. |

|

Also might be a duplicate of #624 which contains a minimal repro |

I agree. There is a documentation on reproducibility for Pytorch. Though, an application like Stable Diffusion may be special for general machine learning applications. Since test_mem_eff_attention.py guarantees that the difference from the reference implementation (deterministic behavior as far as I have tried) is reasonably small (4e-3 in fp16), the accuracy required to reproduce the picture generated by Stable Diffusion seems more stringent. |

|

The use-case of stable diffusion is quite extreme because we're applying mem-eff multiple times per model forward, and repeating that dozen times, which can amplify even very small variations. However I totally agree with you that we should: I added the "Good first issue" tag, so we welcome contributions for that - if you want to add a pull request to address either of those, that would be great :) |

|

@danthe3rd I have not made a pull request yet, but I wrote it anyway. main...takuma104:xformers:non-determistic-warn Please let me know if there is an appropriate wording for the document, as I am not a native speaker. I have a few questions.

|

|

The code looks great! I didn't know about this Regarding Flash, I don't see a way to call "alertNotDeterministic" from python, but maybe @tridao can add it to Flash-Attention later EDIT: Not sure if Flash is deterministic or not actually, would be worth testing |

|

@danthe3rd Is this about the forward pass or the backward pass? |

|

Oh that's great news thanks! |

|

@danthe3rd I'm a little concerned about this alertNotDeterministic(). A warning like this will appear. This warning seems to be caused by Pytorch. Hmmm... @tridao |

|

I have tried the patch to Diffusers to force use FlashAttention , it seems to work without stopping Unet inference, but I got the following error in VAE decode.

The shape of the q,k,v inputs are all (1, 1, 9216, 512). I tried specifying this shape in the minimal code, and sure enough, I get the same error. I followed up with the debugger and it seems that the K in q and v exceeded SUPPORTED_MAX_K and this caused a Value Error. Hmmm.. :( |

|

Flash attention does not support K>128 unfortunately. But I believe you are doing something wrong there. Is your sequence of size 1?! |

|

Sorry, I have to make a correction. Here is the code I used: The following line was reported just before the Value Error. I have the batch size set at 1. I interpreted this result as (B,M,H,K)=(1,1,9216,512), but this is incorrect, since the following memory_efficient_attention() specifications:

so it had to be interpreted as (B,M,H,K)=(1,9216,1,512). So the sequence length is 9216. |

|

Usually it uses Flash Attention, and if it is not available, it fallbacks to Cutlass, which gave me almost the result I wanted. Thanks for the information on Flash Attention. |

|

So now it is normal that generations with exact same seed will have tiny differences with xformers? I check my old generations that I've made in december and a bit earlier and every time I generated images with exact same settings it gave me exact same 100% results. Now I will get changes and I can't prevent that? Cuz sometimes even small change can cauze a bad generation, and eye, a finger, etc. And when I make 100+ 512x512 images to regenerate them with highres fix, I can't cuz I won't get the same image. |

❓ Questions and Help

Different results occur when I run the same code twice. And the set_seed func run before all.

The text was updated successfully, but these errors were encountered: