New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Limit throughput of function deployments #1372

Comments

|

I have encountered the same issue and considered the same fix. |

|

Hah, we have an internal bug for specifically this! Would love your help in implementing :) thanks for offering! Internal issue reference: 126680733 |

|

I'm still going to implement it, I started with migrating some of the files I'll need to touch from JavaScript to TypeScript. Today I received some more information from Google Cloud Support which I might end up incorporating in the PR for this issue:

Request limit for one of my projects was actually reduced to 50 (the default is 80):

My initial intention was to fire 80 requests and then fire another batch after 100 seconds and so on, with a capped exponential backoff for contention errors. Given the above, I'd go for firing 1 request every 1.25 seconds so it complies with the default limit and the suggestion quoted above. In the future we might want to think of exposing this value (time between deployments) as a CLI option for occasions like this, but I'll make a separate issue for it once the PR with 1.25 lands in master. Let me know what you think, if this approach would be ok with you. Maybe you have some other ideas? |

|

As discussed some time ago, I don't have capacity at the moment to work on this feature, so I changed the title to reflect it. |

|

I was just about to log the same feature request to this repo. Looks like #1858 is still a WIP? Just wanted to update this issue for future readers. |

|

I think this issue impacts a lot of users. Would love to see a fix! If someone can outline the high level steps on what needs to change in the repo, I'd give it a shot. I have no experience contributing to this tool so some direction would probably be helpful. |

|

Folks who are still experiencing this issue, can you please make sure you're on the latest version of the CLI and provide details (how many functions you're deploying, |

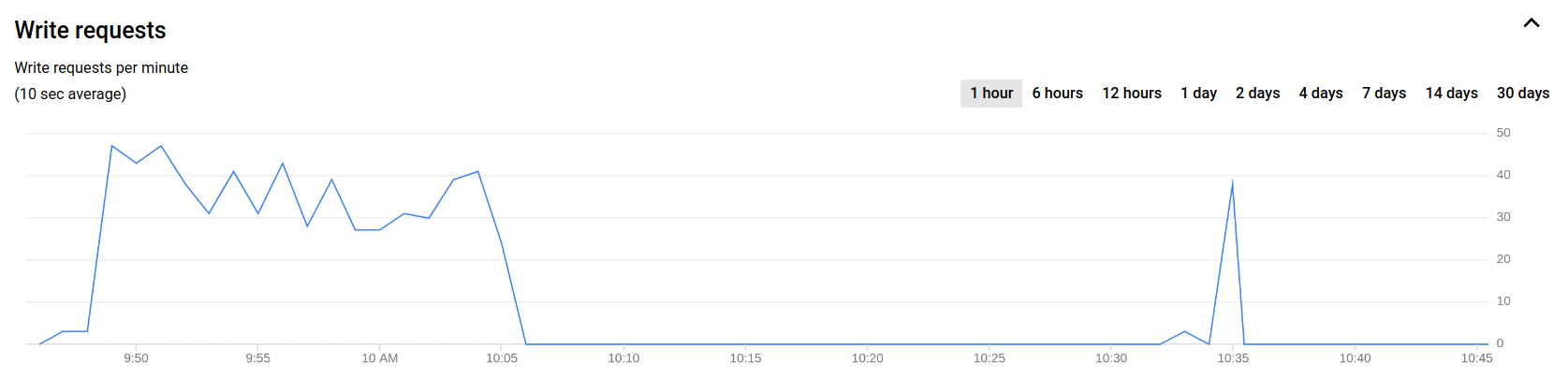

@mbleigh I'm still experiencing that issue. I have 598 Cloud Functions in one of the projects, attempting to deploy it consistently exceeds the "Cloud Functions: Write requests per minute" quota, which is understandable. I didn't look into the recent changes in depth, but my impression was that it reduced the build time (one container build no matter how many functions we're deploying), not how the orchestrator to switches Cloud Functions versions, which historically was the reason why this quota existed and cannot be increased. I currently have a script in place which does change detection and deploys a minimal set of Cloud Functions affected, while making sure to throttle the deployments. Here's how my deployments looks like: As you can see, ~10 AM a change was merged which affected a lot of functions, so they were deployed at a rate of 50/minute (all custom tooling, it's not built into To summarize, as far as I'm aware, there were no changes since I reported the issue which would help alleviate this issue. |

I would love to know more about this script :) |

|

@jsakas Copy-pasting here so that you have a starting point, but that's probably not going to work out of the box for your project. You'll need to install some dependencies, fix imports etc. It uses a TypeScript compiler for traversing files, but even if your project is plain JS, you can still make it work - TypeScript compiler can understand JS files. // getChangedFunctions.ts

import { basename, dirname, join, resolve } from 'path';

import { createProgram, SourceFile } from 'typescript';

const FUNCTIONS_REGEXP = /.*src\/(?<type>functions)\/(?<resourceName>[A-Za-z]*)\/(?<filename>.*)/u;

interface SourceNode extends SourceFile {

readonly imports?: ReadonlyArray<{ text: string }>;

}

const program = createProgram(

[join(__dirname, '../../../../src/index.ts')],

{},

);

const sources = program.getSourceFiles() as SourceNode[];

const dependantsMap = new Map<string, string[]>();

sources.forEach((source: SourceNode): void => {

const sourcePath = source.fileName;

const sourceDirectory = dirname(sourcePath);

const resolvedSourcePath = require.resolve(sourcePath);

if (sourcePath.includes('node_modules') === false) {

(source.imports ?? []).forEach(

({ text: importPath }: { text: string }): void => {

if (importPath.startsWith('.')) {

const resolvedDependencyPath = require.resolve(

resolve(sourceDirectory, importPath),

);

// Get or create a reference to dependants for a module.

const maybeDependants: string[] | undefined = dependantsMap.get(

resolvedDependencyPath,

);

const dependants = maybeDependants ?? [];

if (maybeDependants === undefined) {

dependantsMap.set(resolvedDependencyPath, dependants);

}

// Add a current source file as a dependant of an imported file.

dependants.push(resolvedSourcePath);

}

},

);

}

});

const findChangedDeep = (

root: string,

traversed: Set<string> = new Set(),

): string[] => {

// Get dependants of a file which were not already traversed.

const dependants = dependantsMap.get(root);

const traversableDependants =

dependants?.filter(

(dependant: string): boolean => traversed.has(dependant) === false,

) ?? [];

// Add all dependants to the list of traversed.

dependants?.forEach((dependant: string): void => {

traversed.add(dependant);

});

return [

root,

...traversableDependants.flatMap((dependant: string): string[] =>

findChangedDeep(dependant, traversed),

),

];

};

export const getChangedFunctions = async (

deployableFunctions: string[],

affectedFiles: string[],

): Promise<string[]> => {

const processedFiles = affectedFiles.flatMap(

(affectedFile: string): string[] =>

affectedFile.includes('.spec.ts') ? [] : findChangedDeep(affectedFile),

);

return processedFiles

.reduce((accumulator: string[], path: string): string[] => {

const matches = FUNCTIONS_REGEXP.exec(path);

if (matches?.groups !== undefined) {

const { resourceName: functionName } = matches.groups;

return deployableFunctions

.filter((deployableFunction): boolean =>

deployableFunction.startsWith(functionName),

)

.reduce((functionAccumulator, matchingFunction): string[] => {

if (

deployableFunctions.includes(matchingFunction) &&

functionAccumulator.includes(matchingFunction) === false

) {

return [matchingFunction, ...functionAccumulator];

}

return functionAccumulator;

}, accumulator);

}

return accumulator;

}, [])

.sort((first: string, second: string): number =>

first < second ? -1 : second < first ? 1 : 0,

);

};// index.ts

import * as depcheck from 'depcheck';

import { getLastCommit } from 'git-last-commit';

import { getChangedFilesForRoots } from 'jest-changed-files';

import { join } from 'path';

import { splitEvery } from 'ramda';

import { promisify } from 'util';

import { runFirebaseDeploy } from '../utils/firebaseDeploymentHelper';

import { getChangedFunctions } from './getChangedFunctions';

/* eslint-disable @typescript-eslint/no-require-imports */

/* eslint-disable @typescript-eslint/no-var-requires */

// Only built program can be deployed.

const functions = require('../../../../lib');

/* eslint-enable @typescript-eslint/no-require-imports */

/* eslint-enable @typescript-eslint/no-var-requires */

const DEPLOYMENT_BATCH_SIZE = 25;

const PRODUCTION_PROJECT_ID = '!!!change-me!!!';

const DEPENDENCY_CHECK_OPTIONS: depcheck.Options = {

detectors: [

depcheck.detector.typescriptImportEqualsDeclaration,

depcheck.detector.typescriptImportType,

depcheck.detector.requireCallExpression,

depcheck.detector.importDeclaration,

],

ignoreBinPackage: false,

ignoreDirs: ['scripts', 'test'],

parsers: {

'*.ts': depcheck.parser.typescript,

},

skipMissing: false,

};

/**

* Commit message that should trigger update of all functions.

*/

const deployAllFunctions = (commitSubject: string): boolean =>

commitSubject.startsWith('chore(deps-dev): bump firebase-tools') ||

commitSubject.startsWith('chore(deps-dev): bump json-perf-loader') ||

commitSubject.startsWith('chore(deps-dev): bump typescript') ||

commitSubject.startsWith('chore(deps-dev): bump webpack') ||

commitSubject.startsWith('chore(deps): bump source-map-support');

/**

* Exhaustive search of which functions should be deployed.

* - If the commit message starts with 'chore(deps)' it builds a dependency

* tree and updates the functions using the updated dependency.

* - Otherwise check the files that were changed in the previous commit and

* determine which functions are affected.

*/

const getFunctionsToDeploy = async (): Promise<string[]> => {

const deployableFunctions = Object.keys(functions);

const lastCommit = await promisify(getLastCommit)();

if (deployAllFunctions(lastCommit.subject)) {

console.info('Deploying all functions.');

return deployableFunctions;

}

if (lastCommit.subject.startsWith('chore(deps') === true) {

console.info('Dependency update, checking dependant functions.');

const dependencies = await depcheck(

join(__dirname, '../../../../'),

DEPENDENCY_CHECK_OPTIONS,

);

/**

* Dependency commit messages are structures as follow:

* - chore(deps): bump firebase-functions from 3.6.0 to 3.6.1

* - chore(deps): bump @google-cloud/firestore from 3.7.4 to 3.7.5

*/

const [, , updatedDependency] = lastCommit.subject.split(' ');

const filesUsingDependency = dependencies.using[updatedDependency];

return getChangedFunctions(deployableFunctions, filesUsingDependency);

}

console.info('Running file change check.');

const { changedFiles } = await getChangedFilesForRoots(

[join(__dirname, '../../../../src')],

{ lastCommit: true, withAncestor: true },

);

const changedFunctions = await getChangedFunctions(

deployableFunctions,

Array.from(changedFiles),

);

if (changedFunctions.length > 0) {

console.info('Changed functions detected.');

return changedFunctions;

}

/**

* Unable to determine minimum functions to deploy, deploy everything.

*/

console.info('Unable to detect changed functions, deploying everything.');

return deployableFunctions;

};

export const deploy = async (projectId: string): Promise<boolean> => {

const functionNames = await getFunctionsToDeploy();

const sortedFunctionNames = functionNames.sort(

(first: string, second: string): number =>

first < second ? -1 : second < first ? 1 : 0,

);

if (sortedFunctionNames.length > 0) {

console.info('Deploying the following functions: ');

console.table(sortedFunctionNames);

}

const functionNamesGroups = splitEvery(

DEPLOYMENT_BATCH_SIZE,

sortedFunctionNames,

);

const runFirebaseDeployPromises: Array<Promise<boolean>> = [];

for (const functionNamesGroup of functionNamesGroups) {

const functionDeploymentFilteringSentinel = functionNamesGroup

.map((functionName: string): string => `functions:${functionName}`)

.join(',');

runFirebaseDeployPromises.push(

runFirebaseDeploy(`${functionDeploymentFilteringSentinel}`, projectId),

);

/*

* Cloud Functions allow a maximum of 30 deployments per minute, but that is

* independent of the time it takes to deploy a function.

*/

/* eslint-disable-next-line no-await-in-loop */

await new Promise((resolve: (_: unknown) => void): void => {

setTimeout(resolve, 60_000);

});

}

return (await Promise.all(runFirebaseDeployPromises)).every(

(succeeded: boolean): boolean => succeeded,

);

};// utils/firebaseDeploymentHelper.ts

import { spawn } from 'child_process';

import { join } from 'path';

import { env as environment } from 'process';

const ROOT_PATH = join(__dirname, '..', '..', '..', '..');

export const runFirebaseDeploy = async (

component: string,

projectId: string,

): Promise<boolean> => {

/* eslint-disable unicorn/prevent-abbreviations */

const deploymentProcess = spawn(

'firebase',

['deploy', '--force', `--only=${component}`, `--project=${projectId}`],

{ cwd: ROOT_PATH, env: environment },

);

/* eslint-enable unicorn/prevent-abbreviations */

deploymentProcess.stdout.on('data', (data: Buffer): void => {

console.log(data.toString());

});

deploymentProcess.stderr.on('data', (data: Buffer): void => {

console.error(data.toString());

});

return new Promise<boolean>((resolve: (result: boolean) => void): void => {

deploymentProcess.on('close', (code: number): void => {

console.log(`Exit code: ${code.toString()}.`);

resolve(code === 0);

});

});

}; |

Wow, thank you so much! I will definitely update for my project and see how it goes. |

@mbleigh I am noticing the issue does appear to be somewhat resolved after upgrading from 9.5.0 to 9.6.1. However, it still fails over some number of functions deployed simultaneously. I'm able to get about 60-70 deployed with one call to |

|

Hey all, thanks for bringing this up again. I made some changes in a recent refactor of this code path that I hoped would help situations like this, but I missed these quota issues because of some wonkiness in quota enforcement. I'm working on a PR to retry on quota errors, which should help prevent these issues you're seeing. |

|

@joehan Do you think it would be possible to re-evaluate the need for this quota on the back end side? It's been a few years now, maybe something was optimized in the meantime and it no longer needs to be that strict? I also find it surprising that this quota is global, not per region. In my case, I'm deploying to four regions, so my deployment needs to be 4x slower than if I'd be deploying to one region only. Once I start adding more regions, the issue will only get worse. |

|

@merlinnot Sorry for the slow reply on this, it slipped off my radar after the initial fix. I just asked someone from Google Cloud Functions, and unfortunately they're not open to increasing the default or the limitsfor this quota. However, you should definitely request a higher quota if you need it: https://cloud.google.com/compute/quotas#requesting_additional_quota |

|

I did request it, but after evaluation it was actually lowered from the default for my project (I was constantly utilizing the quota right up to that limit and it was causing instability for other users). Do you think we could reopen this feature request, as it still seems valid? |

|

That feature would indeed be very helpful. |

Google Cloud functions has a quota on API calls (WRITE), which makes deployments of large number of functions impossible. This can be solved by limiting the number of requests

firebase-toolsmakes per 100 seconds by queueing and performing operations within this limitation.I'd be willing to work on adding support for this scenario, please let me know if such a PR would be accepted.Update: don't have capacity for this at the moment.The text was updated successfully, but these errors were encountered: