You signed in with another tab or window. Reload to refresh your session.You signed out in another tab or window. Reload to refresh your session.You switched accounts on another tab or window. Reload to refresh your session.Dismiss alert

We have a pretty sizable deploy of the of the kustomize-controller (v0.32.0) with increased quota, deployed to a node with 64 core CPU and 256GB of memory so capacity is not an issue.

We also have concurrency set to 96. Everything else is pretty much out of the box. Things run well for a good 3-4 hrs on our EKS cluster but eventually the kustomize-controller starts piling up on failed reconciling, all with the following error: failed to update status, error: timed out waiting for the condition

After which point, all subsequent Kustomization reconciliation produce the same error, with cpu and memory usage hanging near 0 even though at any point, we have up to 20K Kustomization CRs. These errors don't go away until we restart the kustomize-controller.

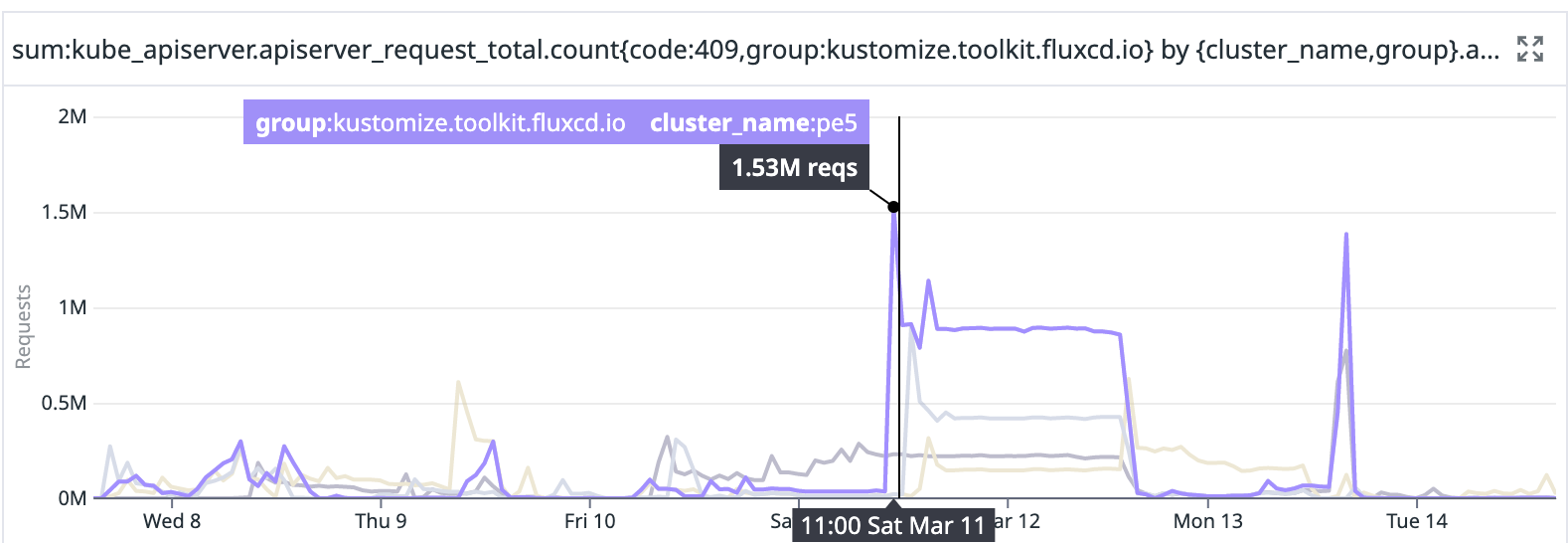

Inspecting our k8s api server requests shows that there are a bunch of HTTP 409s:

Further digging into the api server logs show the typical requests that it is erroring on, which appears to be a patch to update the Kustomization CR status:

{"kind":"Event","apiVersion":"audit.k8s.io/v1","level":"Metadata","auditID":"ff85d972-d379-4e2c-b765-f732c728b7b4","stage":"ResponseComplete","requestURI":"/apis/kustomize.toolkit.fluxcd.io/v1beta2/namespaces/nmspc/kustomizations/wrkld/status?fieldManager=gotk-kustomize-controller","verb":"patch","user":{"username":"system:serviceaccount:flux-system:kustomize-controller","uid":"bd13c9b0-b084-4c2a-a27e-1f5c3c35de81","groups":["system:serviceaccounts","system:serviceaccounts:flux-system","system:authenticated"],"extra":{"authentication.kubernetes.io/pod-name":["kustomize-controller-55cf9d7bfc-txhjs"],"authentication.kubernetes.io/pod-uid":["aadbd80f-ded0-4e50-825e-de607ad3235c"]}},"sourceIPs":["10.8.30.16"],"userAgent":"kustomize-controller/v0.0.0 (linux/amd64) kubernetes/$Format","objectRef":{"resource":"kustomizations","namespace":"nmspc","name":"wrkld","apiGroup":"kustomize.toolkit.fluxcd.io","apiVersion":"v1beta2","subresource":"status"},"responseStatus":{"metadata":{},"status":"Failure","reason":"Conflict","code":409},"requestReceivedTimestamp":"2023-03-14T18:01:31.905898Z","stageTimestamp":"2023-03-14T18:01:31.916156Z","annotations":{"authorization.k8s.io/decision":"allow","authorization.k8s.io/reason":"RBAC: allowed by ClusterRoleBinding \"cluster-reconciler-flux-system\" of ClusterRole \"cluster-admin\" to ServiceAccount \"kustomize-controller/flux-system\""}}

Looking at the managed fields for one of Kustomization CR shows 2 owners for the status subresource, which looks suspicious:

As you can see above, the status subresource is owned by both gotk-kustomize-controller and kustomize-controller. Is this intended?

I also noticed this commit that added more patches to update the status more regularly. Is there a possibility for it to conflict with patches/updates that happen prior?

Fwiw, even if we turn down concurrency to 8, we eventually run into this cascading error, though it takes longer. Anyhow, if you have any idea what's going on or suggestions on things to try out, I'm all ears.

Steps to reproduce

install flux v0.38.3 that comes with kustomize-controller v0.32.0

create 15-20K Kustomization CRs with pruning enabled

have KCRs track a flux repo that has commits added ~ every 10-15 mins. 500+ KCRs are adds and/or removes during these commits, that trigger pruning.

leave controller running for 3-4 hours.

eventually all reconciliations error out with failed to update status, error: timed out waiting for the condition and doesn't go away until the kustomize-controller is restarted.

Expected behavior

Able to recover on failure and continue reconciling again.

Hi, thanks for reporting this issue.

It'd be very helpful to get some more information about the observed state of the Kustomization objects when this happens, specifically the status of the object. Can you share with us what the status of Kustomization objects affected by this looks like? It'll help us in reasoning about the issue and maybe reproduce it more easily without waiting for it to happen.

Describe the bug

We have a pretty sizable deploy of the of the kustomize-controller (v0.32.0) with increased quota, deployed to a node with 64 core CPU and 256GB of memory so capacity is not an issue.

We also have concurrency set to 96. Everything else is pretty much out of the box. Things run well for a good 3-4 hrs on our EKS cluster but eventually the kustomize-controller starts piling up on failed reconciling, all with the following error:

failed to update status, error: timed out waiting for the conditionAfter which point, all subsequent Kustomization reconciliation produce the same error, with cpu and memory usage hanging near 0 even though at any point, we have up to 20K Kustomization CRs. These errors don't go away until we restart the kustomize-controller.

Inspecting our k8s api server requests shows that there are a bunch of HTTP 409s:

Further digging into the api server logs show the typical requests that it is erroring on, which appears to be a patch to update the Kustomization CR status:

Looking at the managed fields for one of Kustomization CR shows 2 owners for the status subresource, which looks suspicious:

As you can see above, the status subresource is owned by both

gotk-kustomize-controllerandkustomize-controller. Is this intended?I also noticed this commit that added more patches to update the status more regularly. Is there a possibility for it to conflict with patches/updates that happen prior?

Fwiw, even if we turn down concurrency to 8, we eventually run into this cascading error, though it takes longer. Anyhow, if you have any idea what's going on or suggestions on things to try out, I'm all ears.

Steps to reproduce

failed to update status, error: timed out waiting for the conditionand doesn't go away until the kustomize-controller is restarted.Expected behavior

Able to recover on failure and continue reconciling again.

Screenshots and recordings

No response

OS / Distro

Amazon linux (linux/amd64)

Flux version

v0.38.3

Flux check

► checking prerequisites

✗ flux 0.38.3 <0.41.1 (new version is available, please upgrade)

✔ Kubernetes 1.22.16-eks-ffeb93d >=1.20.6-0

► checking controllers

✔ helm-controller: deployment ready

► ghcr.io/fluxcd/helm-controller:v0.28.1

✔ kustomize-controller: deployment ready

► ghcr.io/fluxcd/kustomize-controller:v0.32.0

✔ notification-controller: deployment ready

► ghcr.io/fluxcd/notification-controller:v0.30.2

✔ source-controller: deployment ready

► ghcr.io/fluxcd/source-controller:v0.33.0

► checking crds

✔ alerts.notification.toolkit.fluxcd.io/v1beta2

✔ buckets.source.toolkit.fluxcd.io/v1beta2

✔ gitrepositories.source.toolkit.fluxcd.io/v1beta2

✔ helmcharts.source.toolkit.fluxcd.io/v1beta2

✔ helmreleases.helm.toolkit.fluxcd.io/v2beta1

✔ helmrepositories.source.toolkit.fluxcd.io/v1beta2

✔ kustomizations.kustomize.toolkit.fluxcd.io/v1beta2

✔ ocirepositories.source.toolkit.fluxcd.io/v1beta2

✔ providers.notification.toolkit.fluxcd.io/v1beta2

✔ receivers.notification.toolkit.fluxcd.io/v1beta2

✔ all checks passed

Git provider

No response

Container Registry provider

No response

Additional context

No response

Code of Conduct

The text was updated successfully, but these errors were encountered: