Classification of the MNIST dataset in Pytorch using 3 different approaches:

- Convolutional Neural Networks (CNN)

- Contrastive Learning (CL) framework SimCLR

- Multiple Instance Learning (MIL)

The MNIST dataset is an acronym that stands for the Modified National Institute of Standards and Technology dataset. It is a dataset of 70,000 small square 28×28 pixel grayscale images of handwritten single digits between 0 and 9. It has a training set of 60,000 examples, and a test set of 10,000 examples.

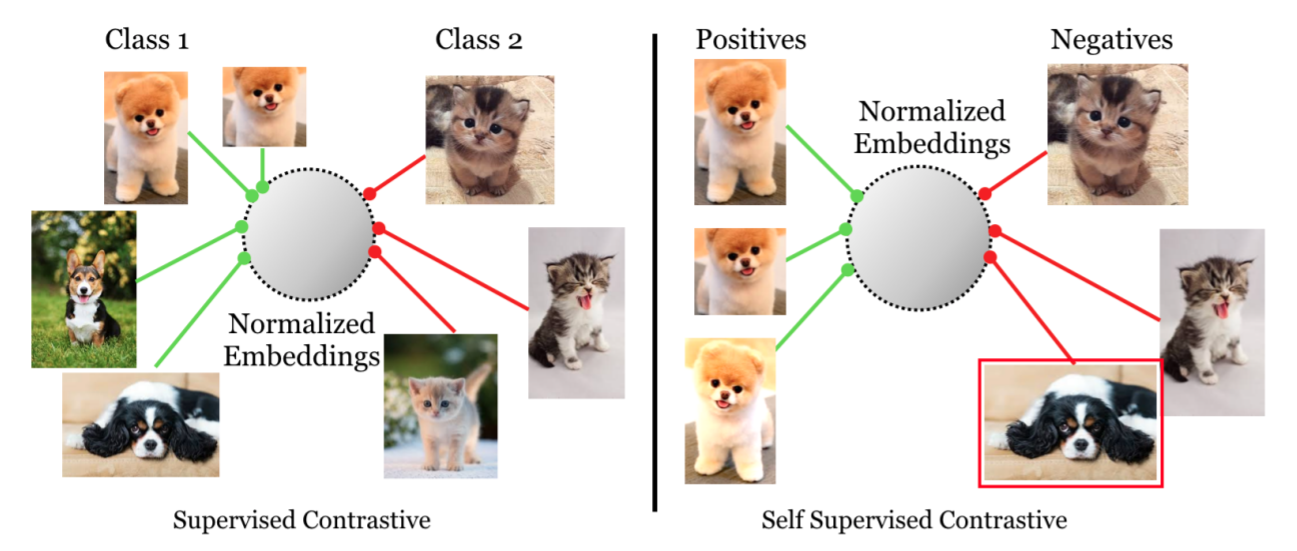

In recent years, a resurgence of work in CL has led to major advances in selfsupervised representation learning. The common idea in these works is the following: pull together an anchor and a “positive” sample in embedding space, and push apart the anchor from many “negative” samples. If no labels are available (unsupervised contrastive learning), a positive pair often consists of data augmentations of the sample, and negative pairs are formed by the anchor and randomly chosen samples from the minibatch.

More information on:

- SupContrast - Self-Supervised (see https://arxiv.org/abs/2002.05709)

- SimCLR - Unsupervised (see https://arxiv.org/abs/2004.11362)

In the classical (binary) supervised learning problem one aims at finding a model that predicts a value of a target variable, y ∈ {0, 1}, for a given instance, x. In the case of the MIL problem, however, instead of a single instance there is a bag of instances, X = {x1, . . . , xn}, that exhibit neither dependency nor ordering among each other. We assume that n could vary for different bags. There is also a single binary label Y associated with the bag. Furthermore, we assume that individual labels exist for the instances within a bag, i.e., y1,...,yn and yk ∈ {0, 1}, for k = 1,..., n, however, there is no access to those labels and they remain unknown during training.

More information on:

- Attention (see https://github.com/AMLab-Amsterdam/AttentionDeepMIL)

- Gated Attention (see https://github.com/AMLab-Amsterdam/AttentionDeepMIL)

An ensemble is a collection of models designed to outperform every single one of them by combining their predictions.

torch

torchvision

matplotlib

numpy

seaborn

This is for demonstation purposes only. The results are not validated correctly. That means that no validation protocol is applied (e.g. KFold Cross Validation). The parameters are not optimized, rather than arbitrarily chosen. The network is chosen to demonstate every possible CNN layer. Early Stopping and Scheduler are implemented for demonstration aswell.

Individual performance of 10 CNN trained on the same training dataset

Results after the Ensemble for minimizing loss and maximizing accuracy

TBD

Reach out to me: