-

Notifications

You must be signed in to change notification settings - Fork 341

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

BERT EXTRACTION: Unable to reproduce results on MNLI #52

Comments

|

Hi Ahmad, thanks for your interest! An accuracy of 31.9% indicates worse than random guess performance. A few questions to help debug this,

|

|

Thanks a lot for the prompt response.

|

|

Hi Ahmad,

|

|

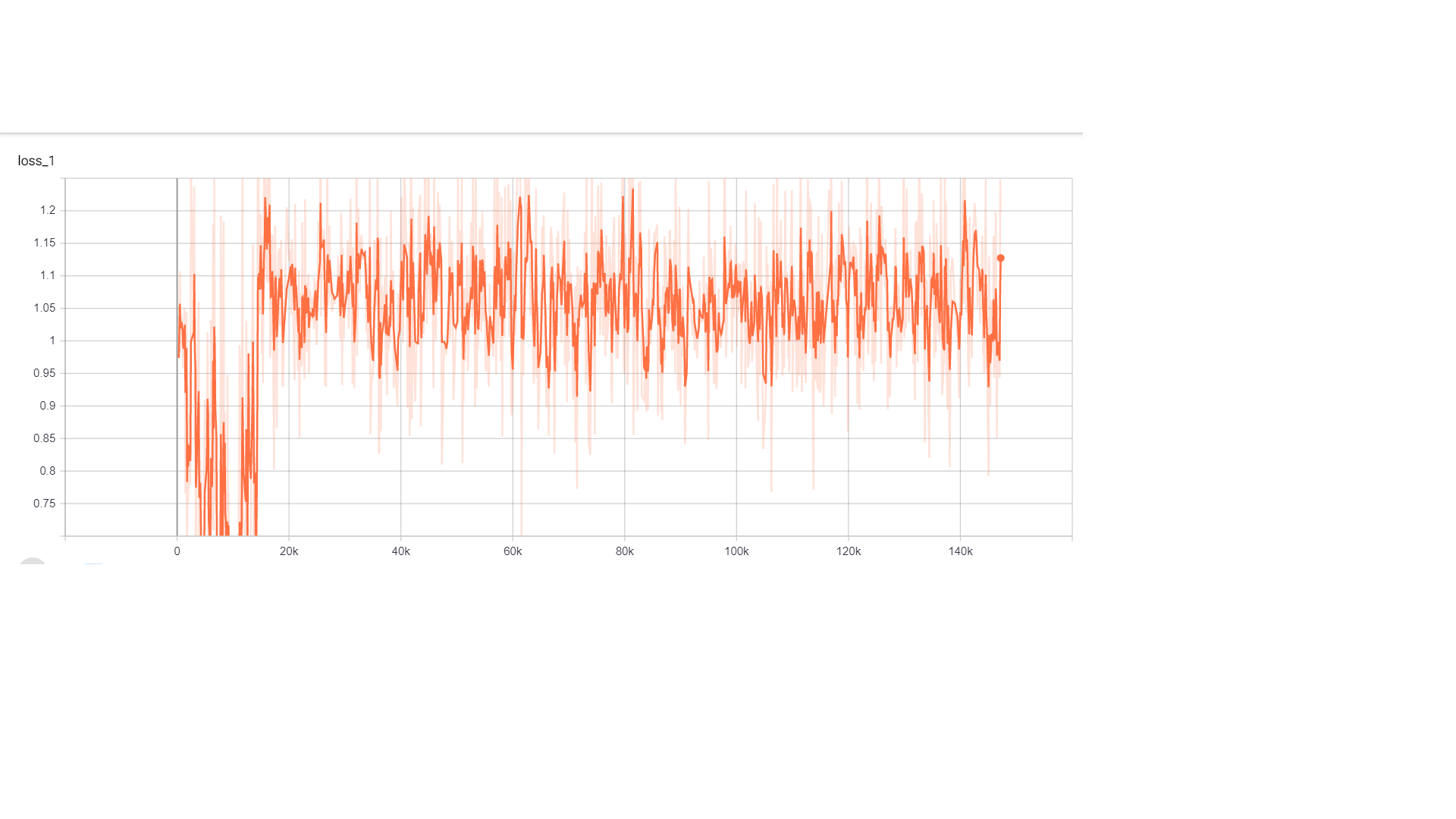

regarding your curve, how many epochs are you training it for / what's your batch size? A loss of 1.1 indicates nothing is being learnt, but I do see a strong decrease after the first few ~10k steps. Also, what is your learning rate, optimizer and learning rate schedule? Finally, what hardware are you using? |

|

I am training it for 3 epochs. I have a batch size of 8 on an NVIDIA V100 GPU. The learning rate,optimizer and schedule are the default in the script. --learning_rate=3e-5 And optimizer is same as the default for BERT |

|

I think the batch size might be the issue, learning is less stable for RANDOM than the original MNLI, and smaller batch sizes (hence weaker gradient estimates) could put the model off the optimization path. I'd recommend trying batch size 32. If it doesn't fit on the GPU, you could try using BERT-base or gradient accumulation. Another thing you could try is a learning rate decay. From your graph, it is clear that the training loss reduces during the warmup phase of training, but then the learning rate is too high and a bad gradient (from a small batch) can put off the optimization. You could also simply try smaller learning rates, maybe 1e-5 |

|

Thanks a lot for the suggestions. I will try these and report back. |

|

Thanks Kalesh! I was able to to get 78 on MNLI dev and 90 on SST-2 reducing the learning rate to 1e-5. The loss curve still is not ideal but much better than what we were seeing before. |

|

Hi, I am a beginner in deep learning and have little experience in implementing the code. May I ask how can you draw the loss curve from tensorboard? I would really appreciate if you can help me! |

Following the scrips I trained a teacher model successfully, generated the extraction data and ran the knowledge distillation on teacher logits. On evaluation on the dev set I am getting 0.319 eval accuracy.

The text was updated successfully, but these errors were encountered: