-

Notifications

You must be signed in to change notification settings - Fork 1.5k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

BigQuery: insert_rows does not seem to work #5539

Comments

|

|

|

If "go to my table" means checking the web UI, be aware that the UI doesn't refresh table state automatically. You should be able to issue a query against the table and expect the streamed records to be available. |

|

hi, there are no errors thrown, that's literally the only output produced. I came back to check today (via the UI), the table still has no rows. I run a manual query and it returned nothing. |

|

@epifab Note that the Trying to reproduce (Gist of $ python3.6 -m venv /tmp/gcp-5539

$ /tmp/gcp-5539/bin/pip install --upgrade setuptools pip wheel

...

Successfully installed pip-10.0.1 setuptools-39.2.0 wheel-0.31.1

$ /tmp/gcp-5539/bin/pip install google-cloud-bigquery

...

Successfully installed cachetools-2.1.0 certifi-2018.4.16 chardet-3.0.4 google-api-core-1.2.1 google-auth-1.5.0 google-cloud-bigquery-1.3.0 google-cloud-core-0.28.1 google-resumable-media-0.3.1 googleapis-common-protos-1.5.3 idna-2.7 protobuf-3.6.0 pyasn1-0.4.3 pyasn1-modules-0.2.1 pytz-2018.4 requests-2.19.1 rsa-3.4.2 six-1.11.0 urllib3-1.23

$ /tmp/gcp-5539/bin/python /tmp/gcp-5539/reproduce_gcp_5539.py

Schema:

------------------------------------------------------------------------------

[SchemaField('doi', 'STRING', 'REQUIRED', None, ()),

SchemaField('subjects', 'STRING', 'REPEATED', None, ())]

------------------------------------------------------------------------------

Errors:

------------------------------------------------------------------------------

[]

------------------------------------------------------------------------------

Fetched:

------------------------------------------------------------------------------

[Row(('test-157', ['something']), {'doi': 0, 'subjects': 1}),

Row(('test-325', ['something']), {'doi': 0, 'subjects': 1}),

Row(('test-73', ['something']), {'doi': 0, 'subjects': 1}),

Row(('test-524', ['something']), {'doi': 0, 'subjects': 1}),

...

Row(('test-805', ['something']), {'doi': 0, 'subjects': 1})] |

|

Please reopen if you can provide more information to help us reproduce the issue. |

|

I run the code again and printed the results from This time, all the records were correctly inserted in the table and basically instantly available. |

|

+1, currently also facing this issue exactly as epifab described |

|

+1, it works if i run it as a script, but not when I run it as part of a unittest class |

|

I had the same issue and managed to identify the problem. My service create a tmp table each time I call it and use a QueryJobConfiguration to copy data from this tmp table to the final destination table (BigQuery does not like when you Delete/Update while the streaming buffer is not empty that's why I am using this trick). this process flow did not work until I tried to use a US dataset instead of my initial EU dataset. to confirm the issue I deleted the US dataset and tried again on EU, same as before does not work. It is not the first time that I notice some discrepancies between data centers' region. |

|

|

|

Have got same issue here with insert_rows api. |

|

@zhudaxi, @heisen273 Please check the response returned from |

|

Hi @tseaver , the errors in my scripts is empty. |

|

Here is my very simple code to insert two rows. FYI. Here is the output of my script: |

|

In my script, the google-cloud-bigquery is in version 1.17.0. |

|

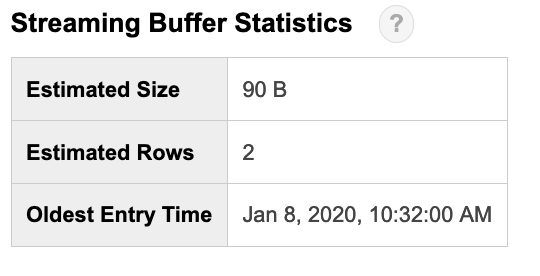

@zhudaxi You appear to be printing stale table stats. Try invoking client.get_table after performing some streaming inserts. |

|

@shollyman Thanks! |

|

Yes, if you want to consult the current metadata of the table you'll need to invoke get_table to fetch the most recent representation from the BigQuery backend service. As an aside, the streaming buffer statistics are computed lazily (as it's an estimation), so comparing it to verify row count is not an appropriate verification method. It may be the case that the buffer doesn't immediately refresh after the first insert, so that may be causing the issue you're observing. If you run a SELECT query that would include your inserted data and you find the data not present, that would indicate there's something more fundamental at issue. |

|

Thanks~ |

|

Any chance you're destroying and recreating a table with the same id?https://cloud.google.com/bigquery/troubleshooting-errors#metadata-errors-for-streaming-inserts |

|

@shollyman Thanks. |

|

I had the same problem. I got around it by using jobs to push data instead of Like this: Reference: https://cloud.google.com/bigquery/docs/loading-data-local |

|

The issue is eventual consistency with the backend. Replacing the table, while it has the same table_id, represents a new table in terms of it's internal UUID and thus backends may deliver to the "old" table for a short period (typically a few minutes). |

|

Hey folks, Has anyone found a reliable solution for this issue? I'm also facing the same issue, I insert like this: And I'm not receiving any error. However, if I go to my table in the GCP console, I don't see my inserted record, even half an hour later. |

|

Hi all! |

|

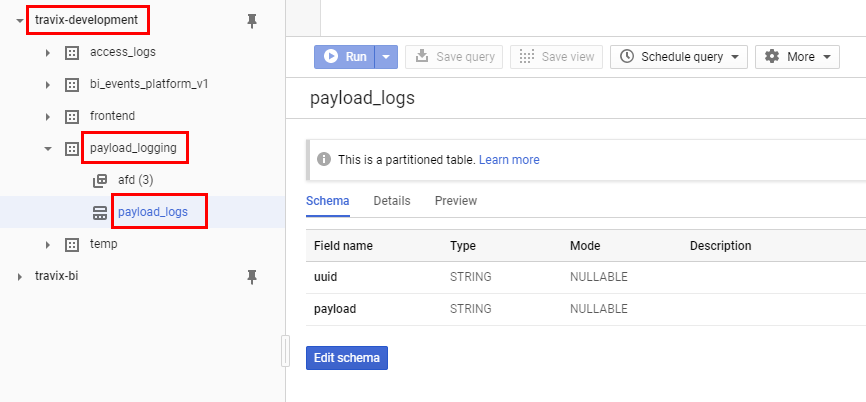

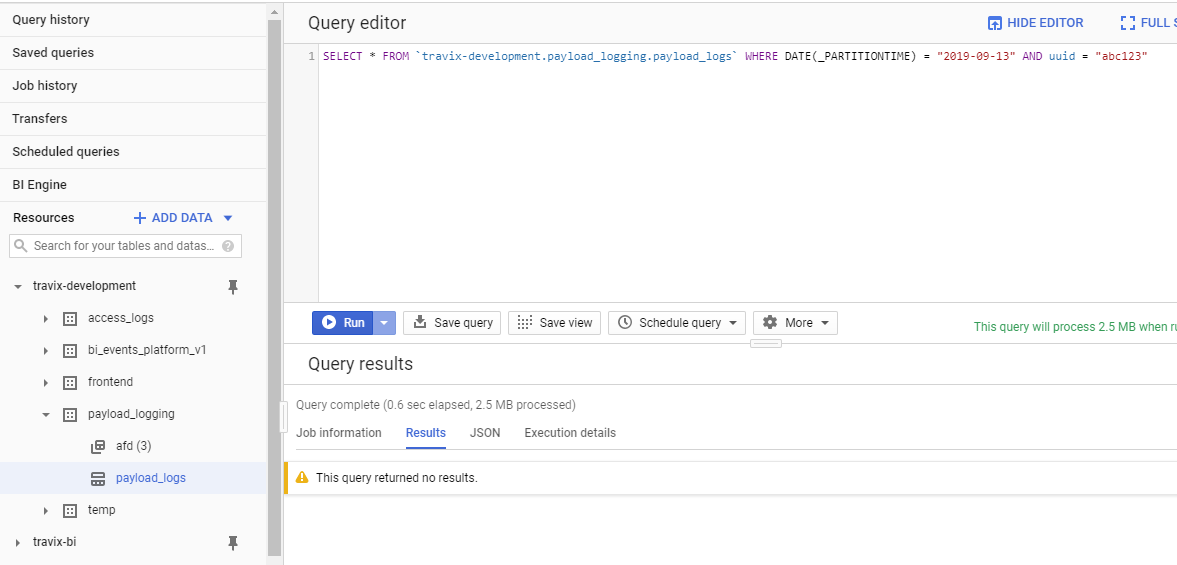

@heisen273 thanks for the reply! I was trying with a proper query, and not the Preview tab. (I also tried to query with the SDK from Python.) Just to show more concretely what I'm doing. I have this table in BQ: Then this is the actual code I'm running: Which prints success at the end. And then this query doesn't return any results, even half an hour later: Since this is such a basic scenario, I'm sure that I'm making some trivial mistake. Might this be related to partitioning? Do I maybe have to use |

|

Update: I tried some of the queries that weren't working yesterday, and now they are returning the results properly. So it seems that it takes even more time than half an hour for the results be available. This answer suggests that this is related to partitioning, and that it can take several hours for the result to be partitioned. Is it maybe possible to speed this up? |

|

Okay, I think I might have found a solution. In the "Streaming into ingestion-time partitioned tables" section on this page there is the suggestion that the partition can be explicitly specified with the syntax This is a bit surprising to me, because if I don't specify the partitiontime explicitly, then I'd expect BigQuery to simply take the current UTC date, which seems to be identical to what I'm doing when I'm specifying it in code. |

|

I just had a similar experience and can confirm that the solution that @markvincze suggest seems to work. First I was trying to run: In this case I got no errors. However, when looking in the UI I saw no data: I then used the solution mentioned above and added: after creating the table (and overwriting the |

|

I now tried the exact same code again, and the "Streaming Buffer Statistics" did not show up.. This seems highly irregular... |

|

Very late to the party but @adderollen I think you need to change the table_id. You have |

Hello,

I have this code snippet:

This produces the following output:

It seems like it worked, but when I go to my table it's empty. Any idea?

Python version: 3.6.0

Libraries version:

google-cloud-bigquery==1.1.0

google-cloud-core==0.28.1

The text was updated successfully, but these errors were encountered: