New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

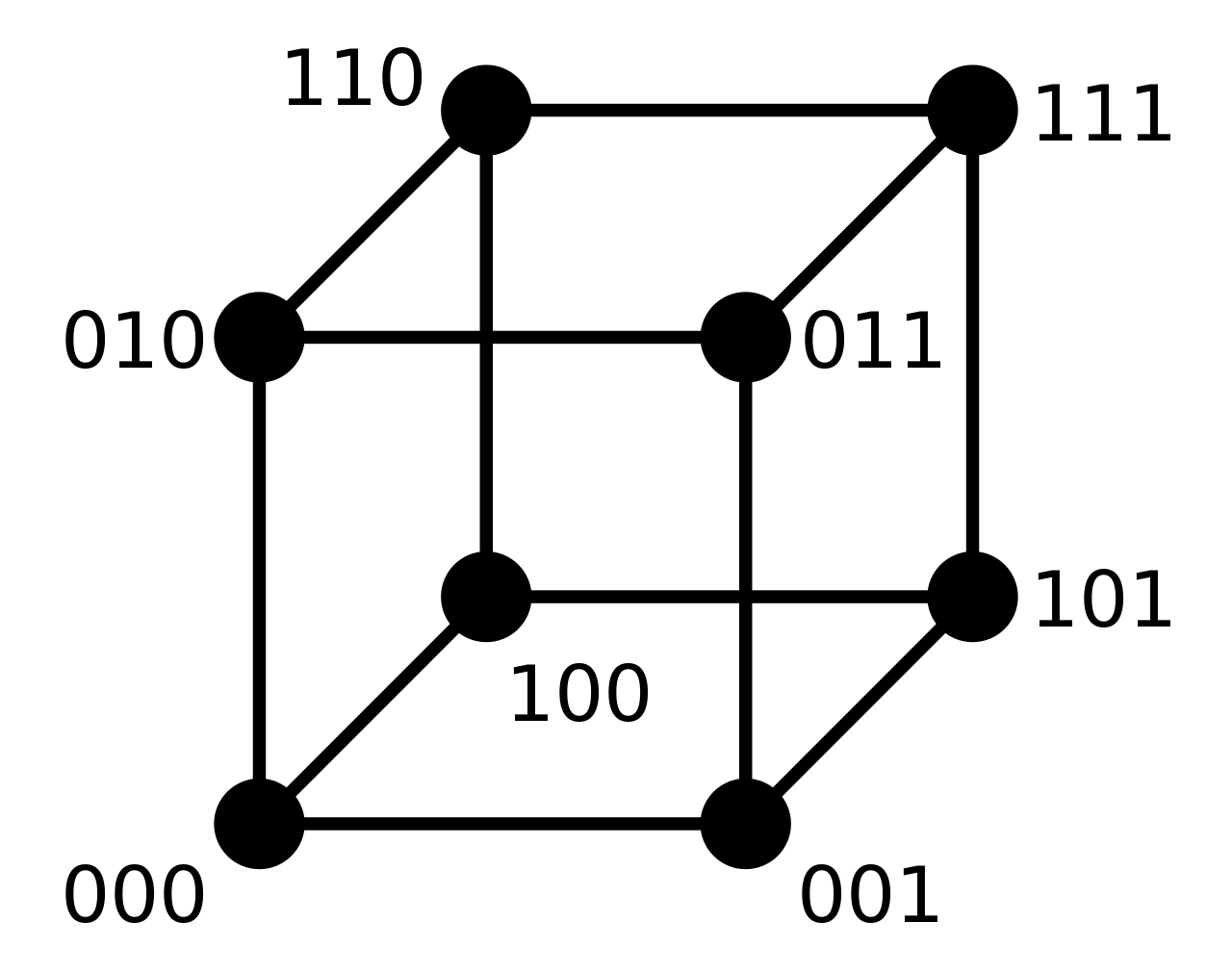

Pixelfly is a binary hypercube! #7

Comments

|

This is super cool! I love this interpretation :D It might relate to how this butterfly pattern of connection was used in telephone switching networks and computer networks Would love to chat more! |

|

Thanks for the reference. I did not know about telephone switching, but i've seen NVIDIA use the same pattern for adding vectors in parallel in scientific computing. Would love to chat as well. We can find each other on discord (i'm Random thoughts: 1. it is possible to halve the max distance in a hypercube graph by adding one more "edge" (in discussion with @denkorzh ) This may be useful if we scale pixelfly to very large models, e.g. GPT-3 has 12288 hidden units, this would translate to a 256x256 grid of 48x48 blocks. It would take 8 consecutive layers to propagate signal from block 00000000 to block 11111111. After folding, we get marginally more parameters, but it now takes only 4 layers (instead of 8) to connect any pair of blocks. 2.Relation to efficient convolutions and GNN / GCN ( proposed by @TimDettmers )

3. There are

[projected usefulness = low] 4. product spaces

[projected usefulness = none] 5. (low) there are two "brethren" to the binary hypercube that are more dense (in discussion with @denkorzh )

|

|

Update ( from @ostroumova-la )

source codeAgain, this may not reflect deep learning performance, but it just might work for transformers -- and it has less edges -> less compute. |

Okay, so imagine the full pixelfly matrix with 2^n blocks

Let's give each input block a number 0... 2^n-1

Then, the pixelfly matrix can be defined as such:

This is the same condition that defines a binary cube in n dimensions:

Ergo, pixelfly neurons actually form a cube and "connect" over the edges of said cube.

p.s. not my original thought, discovered in discussion with @ostroumova-la

p.p.s. if that is the case, are there other geometric shapes we could try?

So, for instance, fully connected matrix can be viewed as an n-dimensional simplex (triangle -> tetrahedron -> simples) because all blocks connect to all other blocks. Than goes the hypercube of pixelfly. Then what?

The text was updated successfully, but these errors were encountered: