New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Enable Transformer LT search space for Dynamic Neural Architecture Search Toolkit #197

Conversation

This reverts commit 79a4758.

Signed-off-by: Maciej Szankin <maciej.szankin@intel.com>

Signed-off-by: Maciej Szankin <maciej.szankin@intel.com>

Signed-off-by: Maciej Szankin <maciej.szankin@intel.com>

|

Failing on timeout now. Locally the same test passes. Will re-run to see if anything has changed... |

Signed-off-by: Maciej Szankin <maciej.szankin@intel.com>

Signed-off-by: Maciej Szankin <maciej.szankin@intel.com>

Signed-off-by: Maciej Szankin <maciej.szankin@intel.com>

Signed-off-by: Maciej Szankin <maciej.szankin@intel.com>

Signed-off-by: Maciej Szankin <maciej.szankin@intel.com>

e08f567

to

858d7b0

Compare

0232314

to

d1a11ce

Compare

Signed-off-by: Xinyu Ye <xinyu.ye@intel.com> Conflicts: test/nas/test_nas.py

d1a11ce

to

758701c

Compare

|

/Azurepipeline run |

|

@macsz we have root caused the timeout issue and fixed it. we also made rebase operation. now we are waiting for test report. if it passes, we will merge it today. |

|

Azure Pipelines successfully started running 4 pipeline(s). |

Thanks! Appreciate the help to rebase to speed things up! I will be monitoring the PR as well. |

Signed-off-by: Maciej Szankin <maciej.szankin@intel.com>

|

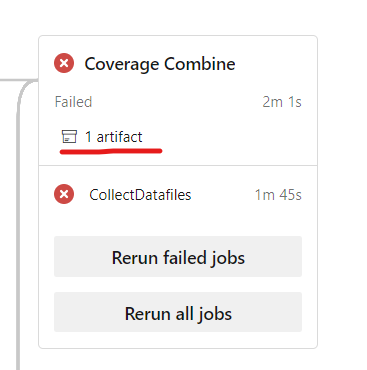

Merged master & Removed cleanup in UTs that I added before you fixed the model cache problem. This triggered the CI to re-run the tests. So we are ok with this PR once it passes? To avoid additional delays I won't be touching anything till you say so. By the way - Where in the CI can I see visual test coverage, like the one you posted @chensuyue ? Tried decoding raw output but it was a little bit too much ... |

|

Should we merge now for code freeze, as @chensuyue suggested? I will work on adding unit tests as a follow up to this PR. |

…arch Toolkit (#197) Signed-off-by: Maciej Szankin <maciej.szankin@intel.com> Co-authored-by: Nittur Sridhar, Sharath <sharath.nittur.sridhar@intel.com> Co-authored-by: Xinyu Ye <xinyu.ye@intel.com> Signed-off-by: zehao-intel <zehao.huang@intel.com>

* add primitive_cache & weight_sharing & dispatcher_tuning ut * fix pybandit * add weight sharing with dispatcher ut * change model load method * change model load method * add the ut of dispatcher tuning perf * remove unuse files * change moudle,dataset address * remove unuse file * fix the ut and add glog level =2 * add the time module * change format * remove the ir * modify * modify- * review modify * test modify * modify for unuse Co-authored-by: Wang, Wenqi2 <wenqi2.wang@intel.com> Co-authored-by: sys-lpot-val <sys_lpot_val@intel.com> Co-authored-by: Bo Dong <bo1.dong@intel.com>

Type of Change

Feature. Extends current Dynamic Neural Architecture Search Toolkit set of supported search spaces with Transformer LT super-network for En-De language translation.

Description

DyNAS-T (Dynamic Neural Architecture Search Toolkit) is a SuperNet NAS optimization package (part of Intel Neural Compressor) designed for finding the optimal Pareto front during neural architecture search while minimizing the number of search validation measurements. It supports single-/multi-/many-objective problems for a variety of domains supported. The system currently heavily utilizes the pymoo optimization library. Some of the key DyNAS-T features are:

This PR extends supported search spaces with Transformer-based Language Translation (

transformer_lt_wmt_en_de) for English and German languages. Implementation of the supernet is based on Hardware Aware Transformers (HAT) by MIT HAN Lab.How has this PR been tested?

To run an example, trained supernet weights and preprocessed WMT En-De dataset is needed. Both can be downloaded from Hardware Aware Transformers (HAT) repository.

Example code to test new functionality:

Dependency Change?

fairseqsacremosestorchprofileSigned-off-by: Maciej Szankin maciej.szankin@intel.com